1. Overview

This Blog is for both system administrators and application developers interested in learning how to deploy and manage Red Hat OpenShift Container Storage 4 (OCS). This Blog outlines how you will be using OpenShift Container Platform (OCP) 4.2.14+ and the OCS operator to deploy Ceph and the Multi-Cloud Object Gateway as a persistent storage solution for OCP workloads. If you do not have a current OpenShift test cluster, you can deploy OpenShift 4 by going to the OpenShift 4 Deployment page and then follow the instructions for AWS Installer-Provisioned Infrastructure (IPI).

> Note: If deploying OpenShift 4 on VMware infrastructure or other User-Provisioned Infrastructure (UPI) skip the steps that include creating machines and machinesets given these resources are not currently available for UPI. To complete all of the instructions in this lab you will need to pre-provision six OCP worker nodes if OCP 4 is on UPI (i.e. not on AWS). To deploy OCS, OCP worker nodes need to have a minimum of 16 CPUs and 64 GB memory available.

1.1. In this Blog you will learn how to

- Configure and deploy containerized Ceph and NooBaa

- Validate deployment of containerized Ceph Nautilus and NooBaa

- Deploy the Rook toolbox to run Ceph and RADOS commands

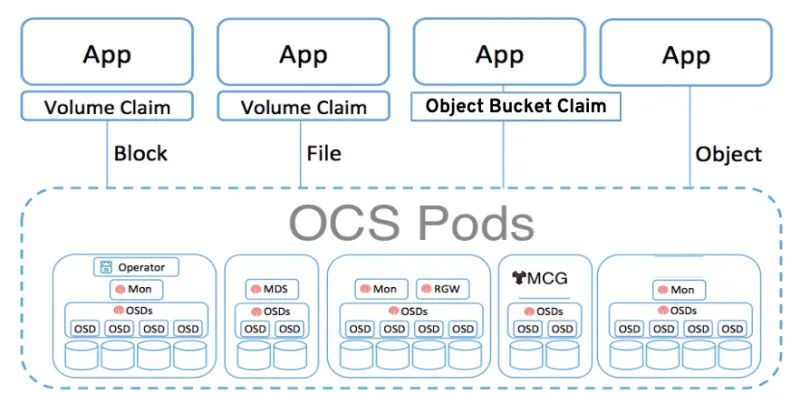

Figure 1. OpenShift Container Storage components

2. Deploy your storage backend using the OCS operator

2.1. Scale OCP cluster and add 3 new nodes

In this section, you will first validate the OCP environment has 3 master and 2 worker nodes (or 3 worker nodes) before increasing the cluster size by additional 3 worker nodes for OCS resources. The NAME of your OCP nodes will be different than shown below.

oc get nodes

Example output:

NAME STATUS ROLES AGE VERSION

ip-10-0-135-157.ec2.internal Ready worker 38m v1.14.6+c07e432da

ip-10-0-138-253.ec2.internal Ready master 42m v1.14.6+c07e432da

ip-10-0-157-189.ec2.internal Ready master 42m v1.14.6+c07e432da

ip-10-0-159-240.ec2.internal Ready worker 38m v1.14.6+c07e432da

ip-10-0-164-70.ec2.internal Ready master 42m v1.14.6+c07e432da

Now you are going to add 3 more OCP compute nodes to cluster using machinesets.

oc get machinesets -n openshift-machine-api

This will show you the existing machinesets used to create the 3 worker nodes in the cluster already. There is a machineset for each AWS AZ (us-east-1a, us-east-1b, us-east-1c). Your machinesets NAME will be different than below.

NAME DESIRED CURRENT READY AVAILABLE AGE

cluster-ocs-79cf-lj8wq-worker-us-east-1a 1 1 1 1 43m

cluster-ocs-79cf-lj8wq-worker-us-east-1b 1 1 1 1 43m

cluster-ocs-79cf-lj8wq-worker-us-east-1c 0 0 43m

cluster-ocs-79cf-lj8wq-worker-us-east-1d 0 0 43m

cluster-ocs-79cf-lj8wq-worker-us-east-1e 0 0 43m

cluster-ocs-79cf-lj8wq-worker-us-east-1f 0 0 43m

> Warning: Make sure you do the next step for finding and using your CLUSTERID

CLUSTERID=$(oc get machineset -n openshift-machine-api -o jsonpath='{.items[0].metadata.labels.machine\.openshift\.io/cluster-api-cluster}')

echo $CLUSTERID

curl -s https://raw.githubusercontent.com/red-hat-storage/ocs-training/master/ocp4ocs4/cluster-workerocs.yaml | sed "s/CLUSTERID/$CLUSTERID/g" | oc apply -f -

Check that you have new machines created.

oc get machines -n openshift-machine-api

They may be in pending for sometime so repeat command above until they are in a running STATE. The NAME of your machines will be different than shown below.

NAME STATE TYPE REGION ZONE AGE

cluster-ocs-79cf-lj8wq-master-0 running m4.xlarge us-east-1 us-east-1a 54m

cluster-ocs-79cf-lj8wq-master-1 running m4.xlarge us-east-1 us-east-1b 54m

cluster-ocs-79cf-lj8wq-master-2 running m4.xlarge us-east-1 us-east-1c 54m

cluster-ocs-79cf-lj8wq-worker-us-east-1a-xscbs running m4.4xlarge us-east-1 us-east-1a 54m

cluster-ocs-79cf-lj8wq-worker-us-east-1b-qcmrl running m4.4xlarge us-east-1 us-east-1b 54m

cluster-ocs-79cf-lj8wq-workerocs-us-east-1a-xmd9q running m4.4xlarge us-east-1 us-east-1a 46s

cluster-ocs-79cf-lj8wq-workerocs-us-east-1b-jh6k4 running m4.4xlarge us-east-1 us-east-1b 46s

cluster-ocs-79cf-lj8wq-workerocs-us-east-1c-649kq running m4.4xlarge us-east-1 us-east-1c 45s

You can see that the workerocs machines are using are also using the AWS EC2 instance type m4.4xlarge. The m4.4xlarge instance type follows our recommended instance sizing for OCS, 16 cpu and 64 GB mem.

Now you want to see if our new machines are added to the OCP cluster.

watch oc get machinesets -n openshift-machine-api

This step could take more than 5 minutes. The result of this command needs to look like below before you proceed. All new workerocs machinesets should have an integer, in this case 1, filled out for all rows and under columns READY and AVAILABLE. The NAME of your machinesets will be different than shown below.

NAME DESIRED CURRENT READY AVAILABLE AGE

cluster-ocs-79cf-lj8wq-worker-us-east-1a 1 1 1 1 62m

cluster-ocs-79cf-lj8wq-worker-us-east-1b 1 1 1 1 62m

cluster-ocs-79cf-lj8wq-worker-us-east-1c 0 0 62m

cluster-ocs-79cf-lj8wq-worker-us-east-1d 0 0 62m

cluster-ocs-79cf-lj8wq-worker-us-east-1e 0 0 62m

cluster-ocs-79cf-lj8wq-worker-us-east-1f 0 0 62m

cluster-ocs-79cf-lj8wq-workerocs-us-east-1a 1 1 1 1 8m26s

cluster-ocs-79cf-lj8wq-workerocs-us-east-1b 1 1 1 1 8m26s

cluster-ocs-79cf-lj8wq-workerocs-us-east-1c 1 1 1 1 8m25s

You can exit by pressing Ctrl+C

Now check to see that you have 3 new OCP worker nodes. The NAME of your OCP nodes will be different than shown below.

oc get nodes -l node-role.kubernetes.io/worker

Example output:

NAME STATUS ROLES AGE VERSION

ip-10-0-131-236.ec2.internal Ready worker 4m32s v1.14.6+c07e432da

ip-10-0-135-157.ec2.internal Ready worker 60m v1.14.6+c07e432da

ip-10-0-145-58.ec2.internal Ready worker 4m28s v1.14.6+c07e432da

ip-10-0-159-240.ec2.internal Ready worker 60m v1.14.6+c07e432da

ip-10-0-164-216.ec2.internal Ready worker 4m35s v1.14.6+c07e432da

2.2. Installing the OCS operator

In this section you will be using three of the worker OCP 4 nodes to deploy OCS 4 using the OCS Operator in OperatorHub. The following will be installed:

Groups and sources for the OCS operators

An OCS subscription

All OCS resources (Operators, Ceph pods, Noobaa pods, StorageClasses)

Start with creating the openshift-storage namespace.

oc create namespace openshift-storage

You must add the monitoring label to this namespace. This is required to get prometheus metrics and alerts for the OCP storage dashboards. To label the openshift-storage namespace use the following command:

oc label namespace openshift-storage "openshift.io/cluster-monitoring=true"

> Note: The below manifest file will not be needed when OCS 4 is released.

To apply this manifest, execute the following:

oc apply -f https://gist.githubusercontent.com/netzzer/207e00a1cbc86006652a100d28be9987/raw/ea441f97a1bfdf476d756bf986b8038ceb086076/deploy-with-olm.yaml

Now switch over to your Openshift Web Console. You can get your URL by issuing command below to get the OCP 4 console route. Put this URL in a browser tab. You will use the same Admin username and password you used to login and use the oc client to login to the OCP 4 console.

oc get -n openshift-console route console

Once you are logged in, navigate to the OperatorHub menu.

Figure 2. OCP OperatorHub

Now type container storage in the Filter by keyword… box.

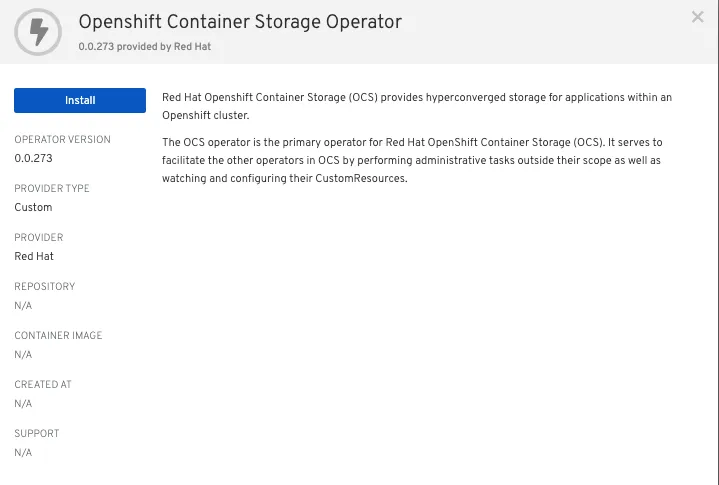

Figure 3. OCP OperatorHub filter on OpenShift Container Storage Operator

Select OpenShift Container Storage Operator and then select Install.

Figure 4. OCP OperatorHub Install OpenShift Container Storage

On the next screen make sure the settings are as shown in this figure. Make sure to change to A specific namespace on the cluster and chose namespace openshift-storage. Click Subscribe.

Figure 5. OCP Subscribe to OpenShift Container Storage

Now you can go back to your terminal window to check the progress of the installation.

watch oc -n openshift-storage get csv

Example output:

NAME DISPLAY VERSION REPLACES PHASE

ocs-operator.v0.0.284 OpenShift Container Storage 0.0.284 Succeeded

You can exit by pressing Ctrl+C

The resource csv is a shortened word for clusterserviceversions.operators.coreos.com.

> Caution: Please wait until the operator

PHASEchanges toSucceeded. This will mark that the installation of your operator was successful. Reaching this state can take several minutes.

You will now also see some new operator pods in the newopenshift-storagenamespace:

oc -n openshift-storage get pods

Example output:

NAME READY STATUS RESTARTS AGE

noobaa-operator-7c55776bf9-kbcjp 1/1 Running 0 3m16s

ocs-operator-967957d84-9lc76 1/1 Running 0 3m16s

rook-ceph-operator-8444cfdc4c-9jm8p 1/1 Running 0 3m16s

Now switch back to your Openshift Web Console for the remainder of the installation for OCS 4.

Navigate to the Operators menu on the left and select Installed Operators. Make sure the selected project is set to openshift-storage. What you see, should be similar to the following example picture:

Figure 6. Installed operators: 1) Make sure you are in the right project; 2) Check Operator status; 3) Click on Openshift Container Storage Operator

Click on Openshift Container Storage Operator to get to the OCS configuration screen.

Figure 7. OCS configuration screen

On the top of the OCS configuration screen, scroll over to Storage cluster and click on Create OCS Cluster Service. If you do not see Create OCS Cluster Service refresh your browser window.

Figure 8. OCS Create Storage Cluster

A dialog box will come up next.

Figure 9. OCS create a new storage cluster

> Caution: Make sure to select three workers in different availability zones using instructions below.

To select the appropriate worker nodes of your OCP 4 cluster you can find them by searching for the node label role=storage-node.

oc get nodes --show-labels | grep storage-node |cut -d' ' -f1

Select the three nodes that resulted from the command above. Then click on the button Create below the dialog box where you selected the 3 workers with a checkmark.

> Note: If your worker nodes do not have the label role=storage-node just select 3 worker nodes that meet requirements for OCS nodes (16 vCPUs and 64 GB Memory) and in different availability zones. It would be a good practice to add a unique label to OCP nodes that are to be used for creating the Storage Cluster prior to this step so they are easy to find in list of OCP nodes. In this case it was done by adding this label, role=storage-node, in the machineset YAML files that you used earlier to create the new OCS worker nodes.

In the background this will start initiating a lot of new pods in theopenshift-storagenamespace, as can be seen on the CLI:

oc -n openshift-storage get pods

Example of a in process installation of the OCS storage cluster:

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-72n5r 3/3 Running 0 52s

csi-cephfsplugin-cgc4p 3/3 Running 0 52s

csi-cephfsplugin-ksp9j 3/3 Running 0 52s

csi-cephfsplugin-provisioner-849895689c-5mcvm 4/4 Running 0 52s

csi-cephfsplugin-provisioner-849895689c-k784q 4/4 Running 0 52s

csi-cephfsplugin-sfwwg 3/3 Running 0 52s

csi-cephfsplugin-vmv77 3/3 Running 0 52s

csi-rbdplugin-56pwz 3/3 Running 0 52s

csi-rbdplugin-9cwwt 3/3 Running 0 52s

csi-rbdplugin-pmw5g 3/3 Running 0 52s

csi-rbdplugin-provisioner-58d79d7895-69vx9 4/4 Running 0 52s

csi-rbdplugin-provisioner-58d79d7895-mkr78 4/4 Running 0 52s

csi-rbdplugin-pvn82 3/3 Running 0 52s

csi-rbdplugin-zdz5c 3/3 Running 0 52s

noobaa-operator-7ffd9dc86-nmfwm 1/1 Running 0 40m

ocs-operator-9694fd887-mwmsn 0/1 Running 0 40m

rook-ceph-detect-version-544tg 0/1 Terminating 0 46s

rook-ceph-mon-a-canary-6874bdb7-rjv95 0/1 ContainerCreating 0 14s

rook-ceph-mon-b-canary-5d5b47ccfd-wpvnp 0/1 ContainerCreating 0 8s

rook-ceph-mon-c-canary-56969776fc-xgkvw 0/1 ContainerCreating 0 3s

rook-ceph-operator-5dc5f9d7fb-zd7qs 1/1 Running 0 40m

You can also watch the deployment using the Openshift Web Console by going back to the Openshift Container Storage Operator screen and selecting All instances.

Please wait until all Pods are marked as Running in the CLI or until you see all instances shown below as ReadyStatus in the Web Console. Some instances may stay in Unknown Status which is not a concern if your Ready status matches the following diagram:

Figure 10. OCS instance overview after cluster install is finished

oc -n openshift-storage get pods

Output when the cluster installation is finished

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-72n5r 3/3 Running 0 10m

csi-cephfsplugin-cgc4p 3/3 Running 0 10m

csi-cephfsplugin-ksp9j 3/3 Running 0 10m

csi-cephfsplugin-provisioner-849895689c-5mcvm 4/4 Running 0 10m

csi-cephfsplugin-provisioner-849895689c-k784q 4/4 Running 0 10m

csi-cephfsplugin-sfwwg 3/3 Running 0 10m

csi-cephfsplugin-vmv77 3/3 Running 0 10m

csi-rbdplugin-56pwz 3/3 Running 0 10m

csi-rbdplugin-9cwwt 3/3 Running 0 10m

csi-rbdplugin-pmw5g 3/3 Running 0 10m

csi-rbdplugin-provisioner-58d79d7895-69vx9 4/4 Running 0 10m

csi-rbdplugin-provisioner-58d79d7895-mkr78 4/4 Running 0 10m

csi-rbdplugin-pvn82 3/3 Running 0 10m

csi-rbdplugin-zdz5c 3/3 Running 0 10m

noobaa-core-0 2/2 Running 0 6m3s

noobaa-operator-7ffd9dc86-nmfwm 1/1 Running 0 49m

ocs-operator-9694fd887-mwmsn 1/1 Running 0 49m

rook-ceph-drain-canary-ip-10-0-136-247.ec2.internal-55c658klgqg 1/1 Running 0 6m10s

rook-ceph-drain-canary-ip-10-0-157-178.ec2.internal-758658dr4jw 1/1 Running 0 6m26s

rook-ceph-drain-canary-ip-10-0-168-170.ec2.internal-5b499cfc6xl 1/1 Running 0 6m27s

rook-ceph-mds-ocs-storagecluster-cephfilesystem-a-8568c68dmzctp 1/1 Running 0 5m57s

rook-ceph-mds-ocs-storagecluster-cephfilesystem-b-77b78d-6jhcw 1/1 Running 0 5m57s

rook-ceph-mgr-a-7767f6cf56-2s6mt 1/1 Running 0 7m24s

rook-ceph-mon-a-65b6ffb7f4-57gds 1/1 Running 0 8m50s

rook-ceph-mon-b-6698bf6d5-zml6j 1/1 Running 0 8m25s

rook-ceph-mon-c-55c8f47456-7x455 1/1 Running 0 7m54s

rook-ceph-operator-5dc5f9d7fb-zd7qs 1/1 Running 0 49m

rook-ceph-osd-0-7fc4dd559b-kgvgb 1/1 Running 0 6m27s

rook-ceph-osd-1-9d9dc8f4b-kh8qr 1/1 Running 0 6m27s

rook-ceph-osd-2-559fb96fcb-zc97d 1/1 Running 0 6m10s

rook-ceph-osd-prepare-ocs-deviceset-0-0-g9j2d-wvqj5 0/1 Completed 0 7m2s

rook-ceph-osd-prepare-ocs-deviceset-1-0-h59x8-l5wjs 0/1 Completed 0 7m2s

rook-ceph-osd-prepare-ocs-deviceset-2-0-74spm-tdlb6 0/1 Completed 0 7m1s

2.3. Getting to know the Storage Dashboards

You can now also check the status of your storage cluster with the OCS specific Dashboards that are included in your Openshift Web Console. You can reach this by clicking on Home on your left navigation bar, then selecting Dashboards and finally clicking on Persistent Storage on the top navigation bar of the content page.

> Note: If you just finished your OCS 4 deployment it could take 5-10 minutes for your Dashboards to fully populate.

Figure 11. OCS Dashboard after successful backing storage installation

1 | Health | Quick overview of the general health of the storage cluster

2 | Details | Overview of the deployed storage cluster version and backend provider

3 | Inventory | List of all the resources that are used and offered by the storage system

4 | Events | Live overview of all the changes that are being done affecting the storage cluster

5 | Utilization | Overview of the storage cluster usage and performance

OCS ships with a Dashboard for the Object Store service as well. From within the Dashboard menu click on the Object Service on the top navigation bar of the content page.

Figure 12. OCS Multi-Cloud-Gateway Dashboard after successful installation

1 | Health | Quick overview of the general health of the Multi-Cloud-Gateway

2 | Details | Overview of the deployed MCG version and backend provider including a link to the MCG Dashboard

3 | Buckets | List of all the ObjectBucket with are offered and ObjectBucketClaims which are connected to them

4 | Resource Providers | Shows the list of configured Resource Providers that are available as backing storage in the MCG

Once this is all healthy, you will be able to use the three new StorageClasses created during the OCS 4 Install:ocs-storagecluster-ceph-rbdocs-storagecluster-cephfsopenshift-storage.noobaa.io

You can see these three StorageClasses from the Openshift Web Console by expanding the Storage menu in the left navigation bar and selecting Storage Classes. You can also run the command below:

oc -n openshift-storage get sc

Please make sure the three storage classes are available in your cluster before proceeding.

> Note: The NooBaa pod used the

ocs-storagecluster-ceph-rbdstorage class for creating a PVC for mounting to it’sdbcontainer.

2.4. Using the Rook-Ceph toolbox to check on the Ceph backing storage

Since the Rook-Ceph toolbox is not shipped with OCS, we need to deploy it manually. For this, we can leverage the upstream toolbox.yaml file, but we need to modify the namespace as shown below.

curl -s https://raw.githubusercontent.com/rook/rook/release-1.1/cluster/examples/kubernetes/ceph/toolbox.yaml | sed 's/namespace: rook-ceph/namespace: openshift-storage/g'| oc apply -f -

After the rook-ceph-tools Pod is Running you can access the toolbox like this:

TOOLS_POD=$(oc get pods -n openshift-storage -l app=rook-ceph-tools -o name)

oc rsh -n openshift-storage $TOOLS_POD

Once inside the toolbox, try out the following Ceph commands:ceph statusceph osd statusceph osd treeceph dfrados dfceph versions

Example output:

sh-4.2# ceph status

cluster:

id: 786dbab2-ae4f-4352-8d83-5e27c6a4f341

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 105m)

mgr: a(active, since 104m)

mds: ocs-storagecluster-cephfilesystem:1 {0=ocs-storagecluster-cephfilesystem-a=up:active} 1 up:standby-replay

osd: 3 osds: 3 up (since 104m), 3 in (since 104m)

data:

pools: 3 pools, 24 pgs

objects: 100 objects, 114 MiB

usage: 3.2 GiB used, 3.0 TiB / 3.0 TiB avail

pgs: 24 active+clean

io: client: 1.2 KiB/s rd, 39 KiB/s wr, 2 op/s rd, 3 op/s wr

You can exit the toolbox by either pressing Ctrl+D or by executing exit.

Resources and Feedback

To find out more about OpenShift Container Storage or to take a test drive, visit https://www.openshift.com/products/container-storage/.

If you would like to learn more about what the OpenShift Container Storage team is up to or provide feedback on any of the new 4.2 features, take this brief 3-minute survey.

執筆者紹介

Red Hatter since 2018, technology historian and founder of The Museum of Art and Digital Entertainment. Two decades of journalism mixed with technology expertise, storytelling and oodles of computing experience from inception to ewaste recycling. I have taught or had my work used in classes at USF, SFSU, AAU, UC Law Hastings and Harvard Law.

I have worked with the EFF, Stanford, MIT, and Archive.org to brief the US Copyright Office and change US copyright law. We won multiple exemptions to the DMCA, accepted and implemented by the Librarian of Congress. My writings have appeared in Wired, Bloomberg, Make Magazine, SD Times, The Austin American Statesman, The Atlanta Journal Constitution and many other outlets.

I have been written about by the Wall Street Journal, The Washington Post, Wired and The Atlantic. I have been called "The Gertrude Stein of Video Games," an honor I accept, as I live less than a mile from her childhood home in Oakland, CA. I was project lead on the first successful institutional preservation and rebooting of the first massively multiplayer game, Habitat, for the C64, from 1986: https://neohabitat.org . I've consulted and collaborated with the NY MOMA, the Oakland Museum of California, Cisco, Semtech, Twilio, Game Developers Conference, NGNX, the Anti-Defamation League, the Library of Congress and the Oakland Public Library System on projects, contracts, and exhibitions.

類似検索

IT automation with agentic AI: Introducing the MCP server for Red Hat Ansible Automation Platform

General Availability for managed identity and workload identity on Microsoft Azure Red Hat OpenShift

Data Security 101 | Compiler

Technically Speaking | Build a production-ready AI toolbox

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

仮想化

オンプレミスまたは複数クラウドでのワークロードに対応するエンタープライズ仮想化の将来についてご覧ください