With Red Hat OpenStack Platform 13 released so recently, I feel it's time to talk about real-time support in Red Hat OpenStack Platform. At the end of the day, it's one of the (many) major features in the new release and something we’re excited to put in the hands of customers.

Real-time is not something new in Linux. It was originally designed to ensure a real-time task would be executed within a certain amount of time making sure the system would not step in your way, which made it suitable for industrial process that need to respect operational deadlines. Nowadays it can be considered for other use-cases, where meeting those deadlines is a must. A common example is the telco world where sometimes it’s mandatory to have consistent network latency. For example, a RTOS would enable a real-time telco application to always execute all packet processing function in a certain period.

At the kernel and OS level, real-time has been around for a very long time. Red Hat, since the Red Hat Enterprise Linux 6 days, has supported the Linux PREEMPT_RT (massive) patch. But being able to support it in OpenStack is another story, and now we can. Let’s take a look at where we’re at now.

A bit of history

About a year ago I reverse-engineered Tuned CPU-partitioning (Tuning for Zero Packet Loss in Red Hat OpenStack Platform ). Back in the old "OVS+DPDK" days, most people used to apply some of those techniques but definitely not all of them at once.

In my opinion, everything was kickstarted by Intel. Around late 2013 or early 2014, OpenStack Icehouse was just around the corner. At that time OpenStack was in full force, everyone was talking about it (just like Linux containers yesterday and serverless today). It was a momentum. Not just a few companies but the whole industry was focused on one topic in a true open source spirit. In that context, Intel issued a reference architecture "Intel Open Network Platform Server Release 1.2: Driving Network Transformation" (I couldn't find the 1.0 version online anymore, sorry about that).

When you read the opening and keep in mind it was written five or six years ago: "Intel ONP Server is a reference architecture [...] to enable adoption of SDN and NFV solutions across telecommunications, cloud, and enterprise sectors. [...] open-source software stack [...] including: Data Plane Development Kit (DPDK), Open vSwitch*, OpenDaylight*, OpenStack*, and KVM*. It is a “better together” software". This was a masterpiece at the time.

The white papers that followed extended the original picture but the core components never changed.

Back to Tuned and Real-Time

In the original Intel documents, Real-Time was identified as a core component. Why is that? And what for? There is just one answer: deterministic results.

But let's start with the basics.

What's Real-Time?

First in my words, real-time is not about the lowest possible latency or the maximum possible throughput. Real-time is deterministic execution time. Deterministic execution time means performing tasks within a certain time, this not being affected by any external process.

Then quoting RTWiki in a more professional way "real-time applications have operational deadlines between some triggering event and the application's response to that event. To meet these operational deadlines, programmers use real-time operating systems (RTOS) on which the maximum response time can be calculated or measured reliably for the given application and environment."

Why is it called PREEMPT_RT?

Again quoting RTWiki, "the RT-Preempt patch converts Linux into a fully preemptible kernel." So using preemption in the whole stack (userland, kernel, interrupts times, etc), deterministic execution time can be achieved.

Where can I learn more?

I can only advise watching Thomas Gleixner (PREEMPT_RT's father) during a Linux Foundation keynote. It takes only 20 minutes but will give you a good understanding.

Keynote: Preempt-RT by Thomas Gleixner

Following there are more detailed documents and videos (courtesy of the Linux Foundation).

- Introduction to Realtime Linux

- RTwiki - Frequently Asked Questions

- Understanding a Real-Time System by Steven Rostedt

- Red Hat Official Documentation

- Basic Linux from a Real-Time Perspective

- Low Latency Performance Tuning

Tuned Real-Time Profiles

By the time Tuned upstream community completed CPU-partitioning work, three other profiles were also ready: realtime, realtime-virtual-host, and lastly realtime-virtual-guest.

- RealTime is the common Tuned profile with generic configuration for a real-time environment.

- realtime-virtual-host is a specific profile for a hypervisor in real-time.

- realtime-virtual-guest is a specific profile for a guest running in real-time on a real-time hypervisor.

How does the Real-Time profile stack against CPU-Partitioning?

Let's say that CPU-Partitioning is the foundation for the real-time profiles. Real-time doesn't heritage CPU-Partitioning but it implements the relevant technologies. Following is a simplified slide from my NFV deck about two profiles.

Of course in Real-Time you have RT-Kernel and KVM-RT, but another big difference is how the CPU Cores are partitioned. CPU-partitioning was originally designed to use systemd CPU Affinity then later on in our NFV Reference Architecture we included isolcpus but it is mostly an extension and it is going to be removed soon. Real-Time is meant to use isolcpus.

Difference in Technologies

In term of technology being used and enabled, the two are mostly the same:

For any of the (many) other technologies, just go check out my other post aboutTuning for Zero Packet Loss in Red Hat OpenStack Platform for CPU partitioning. As for the Tuned Real-Time profiles, two technologies are definitely worth additional details.

Allows RT tasks to run indefinitely without interruption

It's achieved through a kernel parameter "kernel.sched_rt_runtime_us" set at "-1". We even have a knowledge base article about it and it's rated as extremely dangerous. The sched_rt_runtime_us parameter defines how long a real-time task can be executed without any interruption. Default is for 95% of its time. The configuration you want in a properly configured NFV environment is "forever.”

Local APIC Timer in TSC-Deadline

Well, that's an interesting one and here we're going deep into how x86 works and personally I struggle to understand all the ramifications. Let's just say that advancing the TSC-Deadline expiration can avoid additional latency removing some VMExit. Additional info can be found at APIC timer.

Real-Time Kernel Features

The PREEMPT_RT Patch turns the Linux kernel into a fully preemptible kernel, changing the Linux internals. Over time multiple things have been fully moved into the upstream kernel (RCU Offload, tickless kernel, FIFO Scheduler etc) and only a few remaining ones are now part of the patch. Following a high-level list.

- Making in-kernel locking-primitives (spinlocks) preemptible

- Critical sections protected by i.e. spinlock_t and rwlock_t are now preemptible

- Implementing priority inheritance for in-kernel spinlocks and semaphores

- Converting interrupt handlers into preemptible kernel threads

- Converting the old Linux timer API into separate infrastructures for high resolution kernel timers plus one for timeouts, leading to userspace POSIX timers with high-resolution

Of course, the Process Scheduling plays a major role here. Any task with higher priority can delay low priority tasks for an unknown amount of time. And sched_rt_runtime_us allows it.

SCHED_FIFO

SCHED_FIFO and SCHED_RR are the two real-time scheduling policies. Each task that is scheduled according to one of these policies has an associated static priority value that ranges from 1 (lowest priority) to 99 (highest priority).

The scheduler keeps a list of ready-to-run tasks for each priority level. Using these lists, the scheduling principles are quite simple:

- SCHED_FIFO tasks are allowed to run until they have completed their work or voluntarily yields.

- SCHED_RR tasks are allowed to run until they have completed their work, until they voluntarily yield, or until they have consumed a specified amount of CPU time.

As long as there are real-time tasks that are ready to run, they might consume all CPU power.

SCHED_FIFO is the one configured by the KVM and OpenStack Nova for the VM's vCPUs and it's also used e.g. by the RT-Kernel for Interrupts and RCUs. The following one is quite interesting about Real Time Kernel. In order to have a fully preemptible system, you can see the IRQs as kernel threads with scheduling FIFO and priority 50.

# tuna --show_threads|grep irq|grep mlx4

768 FIFO 50 10,30 5620 0 irq/56-mlx4-asy

1120 FIFO 50 10,30 2 1 irq/57-mlx4-1@0

1121 FIFO 50 10,30 2 1 irq/58-mlx4-2@0

1122 FIFO 50 10,30 1 1 irq/59-mlx4-3@0

1123 FIFO 50 10,30 2 1 irq/60-mlx4-4@0

1124 FIFO 50 10,30 2 1 irq/61-mlx4-5@0

1125 FIFO 50 10,30 2 1 irq/62-mlx4-6@0

1126 FIFO 50 10,30 2 1 irq/63-mlx4-7@0

1127 FIFO 50 10,30 2 1 irq/64-mlx4-8@0

1128 FIFO 50 10,30 2 1 irq/65-mlx4-9@0

1129 FIFO 50 10,30 2 1 irq/66-mlx4-10@

1130 FIFO 50 10,30 2 1 irq/67-mlx4-11@

1131 FIFO 50 10,30 2 0 irq/68-mlx4-12@

1132 FIFO 50 10,30 2 0 irq/69-mlx4-13@

1133 FIFO 50 10,30 2 1 irq/70-mlx4-14@

1134 FIFO 50 10,30 2 1 irq/71-mlx4-15@

[cut]

And this is not just Mellanox that somehow has a different implementation. This shows output from my Intel x550 using the ixgbe driver.

tuna --show_threads|grep irq|grep ixgbe

1802 FIFO 50 0,20 3 1 irq/35-enp3s0f0 ixgbe

1803 FIFO 50 0,20 7324 1 irq/37-enp3s0f0 ixgbe

1804 FIFO 50 0,20 224 1 irq/38-enp3s0f0 ixgbe

1805 FIFO 50 0,20 131 1 irq/39-enp3s0f0 ixgbe

1806 FIFO 50 0,20 230 1 irq/40-enp3s0f0 ixgbe

1807 FIFO 50 0,20 115 1 irq/41-enp3s0f0 ixgbe

1808 FIFO 50 0,20 123 1 irq/42-enp3s0f0 ixgbe

1809 FIFO 50 0,20 135 1 irq/43-enp3s0f0 ixgbe

1810 FIFO 50 0,20 139 1 irq/44-enp3s0f0 ixgbe

1818 FIFO 50 0,20 2 1 irq/47-enp3s0f1 ixgbe

1819 FIFO 50 0,20 107 1 irq/48-enp3s0f1 ixgbe

1820 FIFO 50 0,20 107 1 irq/49-enp3s0f1 ixgbe

1821 FIFO 50 0,20 107 1 irq/50-enp3s0f1 ixgbe

1822 FIFO 50 0,20 107 1 irq/51-enp3s0f1 ixgbe

1823 FIFO 50 0,20 107 1 irq/52-enp3s0f1 ixgbe

1824 FIFO 50 0,20 107 1 irq/53-enp3s0f1 ixgbe

1825 FIFO 50 0,20 107 1 irq/54-enp3s0f1 ixgbe

1826 FIFO 50 0,20 107 1 irq/55-enp3s0f1 ixgbe

[CUT]

Latency Results

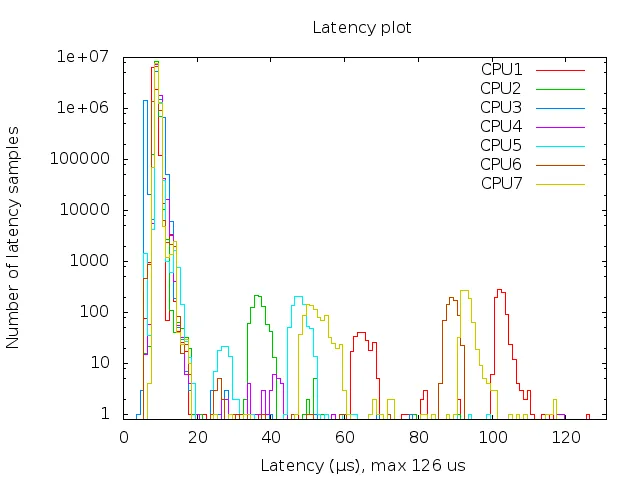

The environment is a fresh Red Hat Enterprise Linux 7.5 using latest kernel 3.10.0-862.3.2.el7.x86_64 for CPU-Partitioning and 3.10.0-862.3.2.rt56.808.el7.x86_64 for real time. Of course with CPU-Partitioning, isolcpus is enabled as per our NFV Reference Architecture. None of those graphs shows the system cores. The ones (0,10,20,30 in my case) dedicated to Kernel, Userland, IRQ etc. The latency over there is way too high. The physical setup is a dual socket Xeon E5-2640 v4 Broadwell-EP.

CPU-Partitioning w/ isolcpus

Realtime-virtual-host

Between the two, the later one in RealTime is a very different picture. My system shows a few cores over 20us. This could be due to SMT that I left enabled. Anyways it's a better result given the maximum scheduling latency being lower (from 91us to 23us)..

Now about KVM-RT. It's the same environment. The only difference is the single system core, in this case, is vCPU0. Again excluded from the graphs.

Host CPU-Partitioning w/ isolcpus and Guest the same.

Host Realtime-virtual-host and guest realtime-virtual-guest

Again a much better picture, maximum latency is 22us vs. 126 of the Host CPU-Partitioning..

But wait a second! Is this the typical customer case? Well, in my experience, most virtual network functions (VNF) vendors use some form of RTOS for the VNF. So, Nope. What happens mixing the situation?

Host CPU-Partitioning w/ isolcpus and Guest realtime-virtual-guest

THIS! The picture looks a little worse than the pure real-time but not as bad as in full CPU-partitioning mode.

Autograph Generator

If you’d like to try the tool used to generate these charts, please go to the Cyclictest_and_Plot repository on GitHub.

Wrap-up

I believe that Red Hat OpenStack Platform 13 is going to change a lot of things. From a telco perspective, Real Time, especially in Radio, should be a killer feature.

What bugs me is that this time the hardware plays even a more important role. During my initial tests, I was running an old (6-month) CPU microcode with a known TSC-Deadline bug (simple dmesg showed it) and the results were inconsistent. For me, it's easy to upgrade for fixing. At a major telco service provider in production, it can be another very different story.

How effective KVM-RT will be, only time will tell. However I already got a request on how the network latency can be affected. In my case, a plan to run MoonGen in loopback mode was there, but in the end, I didn't have time for it. Is this a problem? Well, it's not representative of a 2018 telco network topology where going through the Switch interconnection may double the average latency...

執筆者紹介

Federico Iezzi is an open-source evangelist who has witnessed the Telco NFV transformation. Over his career, Iezzi achieved a number of international firsts in the public cloud space and also has about a decade of experience with OpenStack. He has been following the Telco NFV transformation since 2014. At Red Hat, Federico is member of the EMEA Telco practice as a Principle Architect.

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

仮想化

オンプレミスまたは複数クラウドでのワークロードに対応するエンタープライズ仮想化の将来についてご覧ください