When we started the discussions on the requirements that led to the development of Hirte (introduced by Pierre-Yves Chibon and Daniel Walsh in their blog post), we explored using systemctl with its --host parameter to manage systemd units on remote machines. However, this capability requires a secure shell (SSH) connection between the nodes, and SSH is too large of a tunnel.

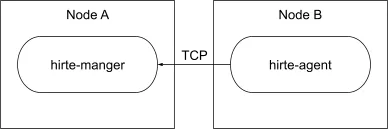

Instead, Hirte was created using transmission control protocol (TCP) based manager-client communication between the machines. Since Hirte manages systemd units, it uses the D-Bus protocol and the sd-bus application programming interface (API), not only between hirte-agent and systemd, but also between hirte-manager and hirte-agent.

The problem is that D-Bus mainly targets local interprocess communication on top of Unix sockets and therefore does not natively support encryption or authentication. One possible solution is to use SSH, but this brings us back to the original challenge: SSH between nodes is too large of a tunnel.

The double proxy approach

The double proxy approach uses two proxies—forward and reverse—between the two communicating parties. This approach has several advantages when it comes to service discovery and, more relevant to our case, the off-loading of mutual transport layer security (mTLS).

In its simplest form, hirte-agent connects to the hirte-manager’s port directly over TCP.

With the double proxy approach, instances of hirte-agent connect to a local forward proxy over a Unix socket. The forward proxy connects over TCP to the remote reverse proxy for mTLS. Finally, the reverse proxy connects to hirte-manager locally over a Unix socket.

NOTE: The hirte-manager and hirte-agent are not aware of the existence of the proxies. They just listen (hirte-manager) and connect (hirte-agent) to a local Unix socket. If the application does not support Unix socket bindings, a TCP port locked to localhost can be used to achieve the same functionality.

Demo

This demo uses HAProxy for both proxies, but other implementations can be used, such as envoy proxy.

All machines

Installation

Install HAProxy:

$ dnf install haproxy

SELinux

When running on an Security-Enhanced Linux (SELinux) enforcing system, the communication port must be allowed on all machines. In this demo, the communication port is 2021:

$ semanage port -a -t http_port_t -p tcp 2021

SSL certificates

Each node should have its own secure sockets layer (SSL) key and certificate. By default, HAProxy confines itself to /var/lib/haproxy, therefore all files must be stored inside this path. The demo uses a self-signed certificate authority (CA) certificate, so three files were used: ca-certificate.pem, cert.key and cert.

Enable and start HAProxy

HAProxy is installed with an empty configuration file and supports reloading when the configuration is updated. So, you can start HAProxy before it is actually configured:

$ systemctl enable --now haproxy.service

hirte-manager machine

hirte-manager

Install hirte-manager along with hirtectl:

$ dnf install hirte hirte-ctl

Hirte supports systemd socket activation, so the hirte.conf file in /etc/hirte/ will not include any port configuration:

[hirte] AllowedNodeNames=<List the node names>

Instead, a systemd socket unit in /etc/systemd/system named hirte.socket is used:

[Socket] ListenStream=/var/lib/haproxy/hirte-manager.sock [Install] WantedBy=multi-user.target

NOTE: By default, HAProxy confines itself to /var/lib/haproxy. Therefore, the socket must be created in this directory.

Enable and start the hirte.socket unit:

$ systemctl enable --now hirte.socket

HAProxy as a reverse proxy

Configure HAProxy to listen on port 2021 and enforce mTLS using the certificates. All traffic is forwarded to hirte-manager via its Unix socket.

#--------------------------------------------------------------------- # main frontend which proxys to the backends #--------------------------------------------------------------------- frontend hirte-manager bind *:2021 ssl crt /var/lib/haproxy/cert verify required ca-file /var/lib/haproxy/ca-certificate.pem default_backend hirte-manager #--------------------------------------------------------------------- # round robin balancing between the various backends #--------------------------------------------------------------------- backend hirte-manager balance roundrobin server hirte-manager /hirte-manager.sock

Store the configuration in /etc/haproxy/conf.d/hirte-manager.cfg and reload HAProxy:

$ systemctl reload haproxy.service

hirte-agent machine

HAProxy as a forward proxy

Configure HAProxy to listen on a local Unix socket. All traffic is forwarded to the remote machine while enforcing mTLS.

#----------------------------------------------------------------

# main frontend which proxys to the backends

#----------------------------------------------------------------

frontend hirte-agent

bind /var/lib/haproxy/hirte-agent.sock

default_backend hirte-agent

#----------------------------------------------------------------

# round robin balancing between the various backends

#----------------------------------------------------------------

backend hirte-agent

balance roundrobin

server hirte-agent < MANAGER_ADDRESS >:2021 ssl crt /var/lib/haproxy/cert verify required ca-file /var/lib/haproxy/ca-certificate.pem

Store the configuration in /etc/haproxy/conf.d/hirte-agent.cfg and reload HAProxy:

$ systemctl reload haproxy.service

hirte-agent

Install the hirte-agent:

$ dnf install hirte-agent

Configure hirte-agent to connect to hirte-manager using the local Unix socket:

[hirte-agent] NodeName=< NODE_NAME > ManagerAddress=unix:path=/var/lib/haproxy/hirte-agent.sock

Enable and start hirte-agent:

$ systemctl enable --now hirte-agent.service

Verify the connection

There are three main ways to verify that hirte-agent connected to hirte-manager.

hirte-agent logs

On the hirte-agent machine, review the hirte-agent journal logs:

$ journalctl -u hirte-agent.service Apr 30 11:14:03 ip-172-31-90-110.ec2.internal systemd[1]: Started Hirte systemd service controller agent daemon. Apr 30 11:14:03 ip-172-31-90-110.ec2.internal hirte-agent[58879]: DEBUG : Final configuration used Apr 30 11:14:03 ip-172-31-90-110.ec2.internal hirte-agent[58879]: DEBUG : Connected to system bus Apr 30 11:14:03 ip-172-31-90-110.ec2.internal hirte-agent[58879]: DEBUG : Connected to systemd bus Apr 30 11:14:03 ip-172-31-90-110.ec2.internal hirte-agent[58879]: INFO : Connecting to manager on unix:path=/var/lib/haproxy/hirte-agent.sock Apr 30 11:14:03 ip-172-31-90-110.ec2.internal hirte-agent[58879]: INFO : Connected to manager as 'remote'

hirte-manager logs

On the hirte-manager machine, review the hirte journal logs:

$ journalctl -u hirte.service Apr 30 11:06:52 ip-172-31-81-115.ec2.internal systemd[1]: Started Hirte systemd service controller manager daemon. Apr 30 11:06:52 ip-172-31-81-115.ec2.internal hirte[59970]: INFO : Registered managed node from fd 8 as 'self' Apr 30 11:14:03 ip-172-31-81-115.ec2.internal hirte[59970]: INFO : Registered managed node from fd 9 as 'remote'

hirtectl

Hirte provides a command-line tool that communicates with hirte-manager. From the hirte-manager machine, run hirtectl to list the systemd units on all machines:

$ hirtectl list-units ODE |ID | ACTIVE| SUB ==================================================================================================== self |haproxy.service | active| running self |systemd-ask-password-wall.path | active| waiting ... remote |systemd-modules-load.service | inactive| dead remote |initrd-cleanup.service | inactive| dead

Summary

Hirte leverages the DBus protocol that does not natively support encryption. Although SSH can be used for this purpose, using a double proxy approach for mTLS allows you to limit the tunnel between the nodes to the specific use case.

Other implementations that leverage the DBus protocol can use the same mechanism to secure their connections.

For more information about the deployment see the instructions in the git repository.

執筆者紹介

Ygal Blum is a Principal Software Engineer who is also an experienced manager and tech lead. He writes code from C and Java to Python and Golang, targeting platforms from microcontrollers to multicore servers, and servicing verticals from testing equipment through mobile and automotive to cloud infrastructure.

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

仮想化

オンプレミスまたは複数クラウドでのワークロードに対応するエンタープライズ仮想化の将来についてご覧ください