Installing OpenShift Data Foundation (ODF) with external Ceph storage is pretty straightforward: some clicks on the OpenShift console, execute a script inside the Ceph cluster, and you are ready to go. Changing those configurations is not as clear.

ODF is software-defined storage for containers. This article shows how to reconfigure ODF to add or remove Ceph functionalities or change parameters.

Why you might need to reconfigure ODF

Here are some reasons why you might need to update an ODF configuration:

- You used the default rados block device (RDB) pool but it is the wrong one.

- You forgot to add Ceph Object Gateway (radosgw) support.

- You used the wrong cephfs volume.

- Your radosgw endpoint changed.

For example, imagine you configured ODF on Ceph but specified only the need for block and filesystem volumes, not buckets (S3). This setting configured ODF on Ceph without support for Ceph Object Gateway (radosgw), which you later realized you need.

Even without radosgw support, ODF installs and configures NooBaa, which delivers ObjectBucketClaims using any available volumes and exposes an S3-compatible API. This should be enough, but the Apache Spark application crashed the NooBaa endpoint when it tried to upload a file.

You sent the problem to the developers, who opened an issue. Instead of waiting for the fix, you decided to try to reconfigure the ODF to support the radosgw, hoping to get around the bug.

Reinstalling ODF requires a lot of work to back up and restore volumes within the applications. Reconfiguring the ODF is the simplest way.

[ Learn how Red Hat OpenShift and Kubernetes compare.]

Examine the configuration script

When using an external Ceph inside OpenShift, you need to download a script from the console and execute it inside a Ceph administration node. This script has a lot of parameters, and the most basic ones to support blocks, filesystems, and buckets are:

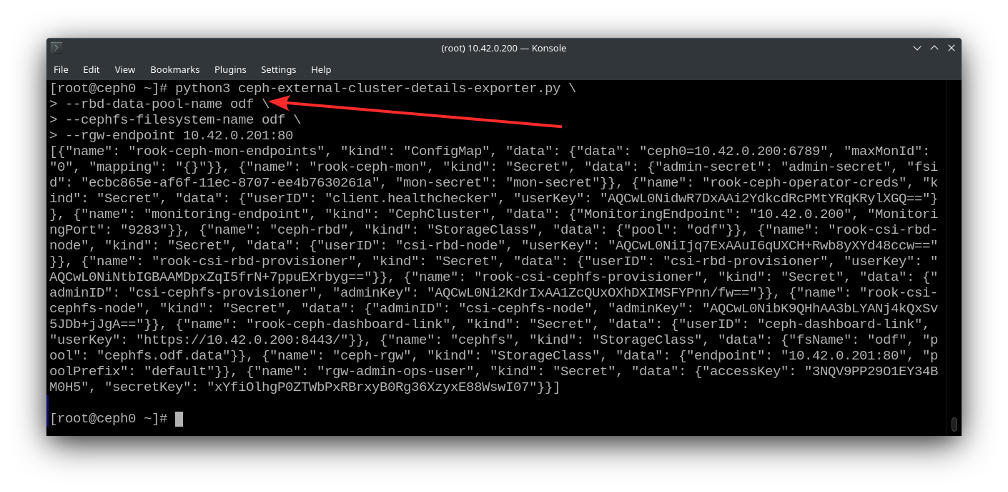

# python3 script.py --cephfs-filesystem-name ocp --rbd-data-pool-name rbd --rgw-endpoint 172.27.11.200:80Executing this script returns a JSON file that you must upload through the OpenShift panel. Here is an image with the file, the parameters, and the output:

The problem happens when the script executes without some of these parameters (for example, without specifying --rgw-endpoint). In this case, the resulting JSON is similar but with less content:

These properties are missing from the JSON:

- StorageClass: ceph-rgw

- Secret: rgw-admin-ops-user

[ Get this complimentary eBook from Red Hat: Managing your Kubernetes clusters for dummies. ]

Reconfigure ODF

ODF reconfiguration is very simple. By default, all elements belonging to ODF stay on the openshift-storage namespace.

There is a secret inside this namespace named rook-ceph-external-cluster-details. Updating this secret triggers the SystemStorage update process. These are the steps:

- Rerun the script with the desired parameters.

- Save the JSON in a file.

- Replace the secret rook-ceph-external-cluster-details with the new JSON.

The "complete" JSON has data related to endpoints and connection users, and the output does not change with subsequent executions. You just need to rerun the script with the parameters related to radosgw. If you lost your script, you could download it again with:

oc get csv $(oc get csv -n openshift-storage | grep ocs-operator | awk '{print $1}') -n openshift-storage -o jsonpath='{.metadata.annotations.external\.features\.ocs\.openshift\.io/export-script}' | base64 --decode > ceph-external-cluster-details-exporter.pyA misnamed pool example

Suppose that you configured ODF with the default rbd pool, which is also named rbd, but the correct one is odf. This can happen if you are not cautious; Ceph has more than one pool of each application type, and the cephfs filesystem and rbd pool have the same name (as in this example).

Here is the process. Execute the script shown below, where rbd-data-pool-name is specified as rbd:

Next, save this JSON output inside a file and upload it using the OpenShift console:

There's the mistake. To complete the steps, click Next, and then, on the next page, click on Create StorageSystem. OpenShift takes care of the tasks.

This works without any problems. But what if this rbd pool is erasure-coded instead of replicated? The erasure-coded setting uses more RAM and CPU than you expect, and now you need to change the RDB pool OpenShift is using.

This example is a bit stranger than a missing radosgw because when you update the ODF, your cluster may already have some volumes created. But this shows that ODF can use volumes in different pools without any problems.

[ Getting started with containers? Check out this no-cost course. Deploying containerized applications: A technical overview. ]

Rerun the script

Rerun the script with the desired parameters:

Save the JSON

Save the output JSON in a file you can send to OpenShift through the command line. For example, saving the JSON in a file named external_cluster_details inside the bastion machine makes the update easier because of the name of the key inside the secret.

Replace the secret

Replace the secret rook-ceph-external-cluster-details with the new JSON:

# oc project openshift-storage

# oc create secret generic rook-ceph-external-cluster-details \

--from-file=external_cluster_details --dry-run=client \

-o yaml > ceph-secret.yml

# oc replace -f ceph-secret.ymlAfter updating this secret, OpenShift creates and updates all the necessary resources to interact with Ceph.

Test this configuration by creating a persistent volume claim (PVC) with the ocs-external-storagecluster-ceph-rbd StorageClass:

There are two volumes. One, db-noobaa-db-pg-0, was created when you configured ODF the first time with the wrong pool. The second, redhat-blog, was created after reconfiguration.

The journalPool is different in each PersistentVolume:

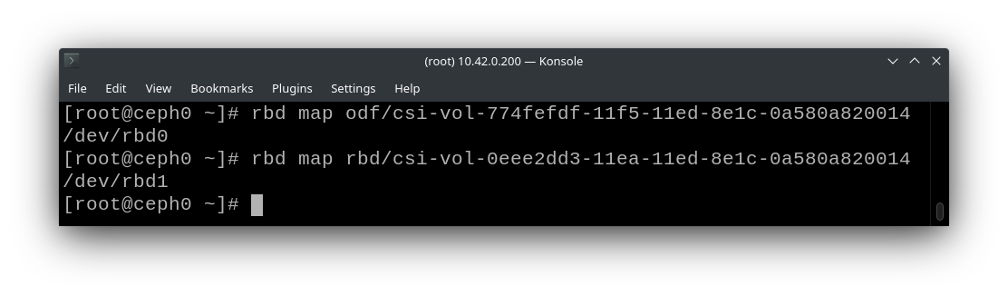

This looks odd, but these volumes can coexist without any problem because of how Ceph works with RDB. You can map, mount, list, or create volumes in any pool available to your user by just changing the pool:

Wrap up

It is easy to reconfigure ODF to change incorrect configurations or add functionality. Just remember to update the secret rook-ceph-external-cluster-details, which you can do from the console.

One behavior I need to mention is not being able to remove radosgw after configuration. Even if you remove the radosgw from the secret, OpenShift will do nothing about it.

Finally, you need to pay close attention if you already have some volumes inside your cluster and want to change the pool. These changes can make maintenance a bit more complicated because you now have OpenShift disks spread in different pools inside Ceph. So, the best option is still to provision once with everything correct.

About the author

Hector Vido serves as a consultant on the Red Hat delivery team. He started in 2006 working with development and later on implementation, architecture, and problem-solving involving databases and other solutions, always based on free software.

More like this

Strategic momentum: The new era of Red Hat and HPE Juniper network automation

Redefining automation governance: From execution to observability at Bradesco

Technically Speaking | Taming AI agents with observability

You Can’t Automate Cultural Change | Code Comments

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds