You've been searching the Internet with your favorite search engine, such as DuckDuckGo, learning all you can learn about containers—this sensation that has swept over the tech industry in the last few years. By now, you have heard about Kubernetes, by far the most popular platform for managing containers. Maybe you stumbled across Kubernetes The Hard Way or maybe even some Kubernetes guided courses.

Maybe you went through the process of figuring this stuff out on your own. "There has to be an easier way!" you say to yourself as you stare at offerings from Google, Amazon, and others. You've got a good idea why you want to use them and may have even deployed some containerized applications, and the apps are working. Great! Along the way, you started reading or hearing about container orchestration, and you went down the rabbit hole following every click-bait link out there.

If looking at these providers makes your wallet hurt, or you're like me, and prefer to keep your data under your control, have no fear! OpenShift can help! After some more searching, you discover the OpenShift Container Platform, the offering from Red Hat. While they do offer 60-day trials, you don't really want to put a credit card on file. Maybe you don't want to deal with salespeople. Either way, I have a solution for you. As with almost everything Red Hat does, there is an open source community project called OpenShift OKD.

Why did you produce this guide?

I love open source. Even though I have been happily working at Red Hat for almost five years now, I still run the upstream versions of everything. I like to be closer to the community and kick off some of the corporate ties from my day job. In addition, one of the parts I love about my job is breaking down complex processes into easily understood and repeatable pieces.

I travel week in and week out for my job. While I have a substantial lab at home consisting of various off the shelf parts, there are times when a client site does not allow me to VPN out. For cases like these, I had to come up with a way to run an environment reliably on my laptop. I also had to make sure that this environment was NAT'd so that, to the outside world, it was just my laptop connecting to the WiFi.

This guide shows you how to run OpenShift with a 2+ node cluster using a single hypervisor running libvirt. Most of the guides I found were only partially complete. Either difference between OCP versions or different use cases necessitated an alternate way of doing things.

Ultimately, I enjoy taking a complex topic, and making a straight-forward walk-through, explaining things as I go. I, like all other authors, am flawed in that I fail to explain things in sufficient detail when I become overly familiar with the process. I do try hard to make corrections based on feedback to leave you with a clear, concise guide that explains what is happening so that it is not a simple monkey-see-monkey-do guide.

If you are not interested in the NAT'd portion of this guide (i.e., you have standard network layouts), skip steps 1, 2A, 2B below.

What were my goals?

I had the following goals:

- Use

libvirton the hypervisor - Work within the constraints of a captive portal (i.e., a hotel or other captive portal that does not allow multiple connections from bridged VMs)

- Use only dnsmasq

- Have a minimal OCP 4 install

- Have a self-contained environment

What technologies were used

- Libvirt

- HAProxy

- Dnsmasq

- TFTP

- Apache

- Syslinux

- Fedora CoreOS

- CentOS 8 Stream (for the gateway)

Assumptions

- I assume you know how to set up a hypervisor of some sort (if skipping the NAT-ing section)

- I assume you know how to create empty VMs

- I assume you are trying to make an enclosed environment (i.e., you are not using a centralized DNS/DHCP/webserver)

Step 1: Setting up the host (hypervisor)

To set up the host, create a bridge network for all the VMs to communicate with themselves on:

nmcli con show

nmcli con add ifname br0 type bridge con-name br0

nmcli con add type bridge-slave ifname eno1 master br0

nmcli con up br0

nmcli con modify "bridge-br0" ifname br0 type ethernet ip4 10.120.120.1/24

This should be all that is required for a bridged network for the libvirt guests to use.

Step 2: Setting up the gateway VM

This VM is independent of the OpenShift install. It hosts services required by OpenShift but is unaffected by the installation (or lack thereof) of OKD4.

This VM should have two network cards, one that is on the bridge, and one that is on the NAT interface. In my case, I set up the following:

Default NAT for libvirt: 192.168.122.x/24

Bridge network I setup: 10.120.120.x/24

Step 2A: Set the sysctl for port-forwarding

On the guest that is designated as the gateway, ensure that the sysctl is on for packet-forwarding. Packet-forwarding allows the gateway to act as a router forwarding and routing traffic.

To configure the kernel to forward IP packets, edit /etc/sysctl.conf

# Controls IP packet forwarding

net.ipv4.ip_forward = 1

sysctl -w net.ipv4.ip_forward=1

Step 2B: Set the firewall zones with firewalld

Next, make two zones with firewalld. The external zone should be bound to the interface on the libvirt NAT, which can access the internet. This interface/zone should also make sure that masquerading is enabled:

EXTERNAL=enp9s0

INTERNAL=enp8s0

firewall-cmd --zone=external --add-interface=${EXTERNAL} --permanent

firewall-cmd --zone=internal --add-interface=${INTERNAL} --permanent

firewall-cmd --zone=external --add-masquerade --permanent

Allow all traffic from the host-only network:

firewall-cmd --permanent --zone=internal --set-target=ACCEPT

firewall-cmd --complete-reload

Disable DNS from libvirt because dnsmasq will forward to it:

nmcli con mod ${EXTERNAL} ipv4.ignore-auto-dns yes

nmcli con mod ${EXTERNAL} connection.autoconnect yes

Set the DNS on the internal network to dnsmasq:

nmcli con mod ${INTERNAL} ipv4.dns "10.120.120.250"

Set the IP address for the internal network:

nmcli con mod ${INTERNAL} ipv4.address "10.120.120.250/24"

nmcli con mod ${INTERNAL} ipv4.dns-search "lab-cluster.okd4.lab"

nmcli con mod ${INTERNAL} connection.autoconnect yes

nmcli con mod ${INTERNAL} ipv4.method manual

I have decided to allow internal traffic on the internal zones instead of opening up all the individual ports. Do this at your own risk.

Make sure that systemd-resolved is disabled and that Network Manager uses the default DNS settings:

systemctl disable systemd-resolved

systemctl stop systemd-resolved

systemctl mask systemd-resolved

sed -i '/\[main\]/a dns=default' /etc/NetworkManager/NetworkManager.conf

systemctl restart NetworkManager

Step 2C: Install and configure services

We are installing all the packages we need for this project to save some commands.

Install the httpd, tftp-server, dnsmasq, haproxy, and syslinux packages:

dnf install httpd tftp-server dnsmasq haproxy syslinux -y

Enable the services:

systemctl enable httpd tftp.socket dnsmasq haproxy

Because HAProxy binds to port 80, we need to move Apache to a different port. Port 8080 is a reasonable choice:

sed -i s/'Listen 80'/'Listen 8080'/g /etc/httpd/conf/httpd.conf

Make sure SELinux doesn't get in the way:

semanage port -m -t http_port_t -p tcp 8080

Add the Apache port to the internal firewall. This is not necessary if you have set the internal to accept everything:

firewall-cmd --add-port=8080/tcp --permanent --zone=internal

Next, create the directory for the CoreOS Images and the Ignition files.

Create the directories for Apache:

mkdir /var/www/html/fedcos

mkdir /var/www/html/ignition

CoreOS files are downloaded from https://getfedora.org/coreos/download/

For this installation, I am using the Bare Metal images.

We are going to use symlinks in order not to have to change configuration files if we update the CoreOS image. Also, create a symlink to the latest version so that we don't have to update the PXE menu each release:

cd /var/www/html/fedcos

ln -s fedora-coreos-31.20200210.3.0-live-initramfs.x86_64.img installer-initramfs.img

ln -s fedora-coreos-31.20200210.3.0-live-kernel-x86_64 installer-kernel

ln -s fedora-coreos-31.20200210.3.0-metal.x86_64.raw.xz metal.raw.gz

ln -s fedora-coreos-31.20200210.3.0-metal.x86_64.raw.xz.sig metal.raw.gz.sig

Step 2D: Set up dnsmasq

Most of the setup for dnsmasq is done in its configuration file. However, in a single master OKD4 deployment, I found that the maximum number of concurrent DNS requests was being reached. The default is 150. To increase this, you need to edit the systemd unit file located at /etc/systemd/system/multi-user.target.wants/dnsmasq.service so that it looks like this:

Description=DNS caching server.

After=network.target

[Service]

ExecStart=/usr/sbin/dnsmasq --dns-forward-max=500 -k

[Install]

WantedBy=multi-user.target

Finally, reload systemd:

systemctl daemon-reload

After that is complete, we are ready to start editing the dnsmasq.conf file. This file is located at /etc/dnsmasq.conf on CentOS 8. Dnsmasq is used for both DHCP and DNS. Dnsmasq implements the official DHCP options. Review this page, as it helps you make sense of the dhcp-option= settings in the file. While some of the DHCP options in dnsmasq have "pretty" names. I avoided using them for consistency's sake as several of the options used below did not have a "pretty" name. The syntax of the dhcp-option is as follows:

dhcp-option=<option number>,<argument>

It is important to know that the Fedora CoreOS DHCP client does not currently use send-name. This means that while a host receives its hostname and IP from the DHCP server, it does not register with the DNS portion of dnsmasq. This led me to statically set the IPs with dnsmasq. This is done using tagging, like so:

dhcp-mac=set:<tag name>,<mac address>

Once you have tagged the MAC address, you can then use this tag to help identify the host. Below is the hostname that is sent to the new VM:

dhcp-option=tag:<tag name>,12,<hostname>

I am not going to cover all of the OpenShift 4 requirements in depth. You can find the requirements in the official documentation. For DNS, you need to have:

- Forward/reverse lookup for all hosts

- A wildcard entry that points to a load balancer fronting the routers (in our case it is the gateway IP handled by HAProxy)

- SRV records for each master/ETCD host

- An API record pointing to load balancers

- API-INT record pointing to load balancers (again for me it's HAProxy)

We are going to configure these in dnsmasq using the host-record option. This option is at the bottom of the file below:

# Setup DNSMasq on Guest For Default NAT interface

# dnsmasq.conf

# Set this to listen on the internal interface

listen-address=::1,127.0.0.1,10.120.120.250

interface=enp2s0

expand-hosts

# This is the domain the cluster will be on

domain=lab-cluster.okd4.lab

# The 'upstream' dns server... This should be the libvirt NAT

# interface on your computer/laptop

server=192.168.122.1

local=/okd4.lab/

local=/lab-cluster.okd4.lab/

# Set the wildcard DNS to point to the internal interface

# HAProxy will be listening on this interface

address=/.apps.lab-cluster.okd4.lab/10.120.120.250

dhcp-range=10.120.120.20,10.120.120.220,12h

dhcp-leasefile=/var/lib/dnsmasq/dnsmasq.leases

# Specifies the pxe binary to serve and the address of

# the pxeserver (this host)

dhcp-boot=pxelinux.0,pxeserver,10.120.120.250

# dhcp option 3 is the router

dhcp-option=3,10.120.120.250

# dhcp option 6 is the DNS server

dhcp-option=6,10.120.120.250

# dhcp option 28 is the broadcast address

dhcp-option=28,10.120.120.255

# The set is required for tagging. Set a mac address with a specific tag

dhcp-mac=set:bootstrap,52:54:00:15:30:f2

dhcp-mac=set:master,52:54:00:06:94:85

dhcp-mac=set:worker,52:54:00:82:fc:9e

# Match the tag with a specific hostname

# dhcp option 12 is the hostname to hand out to clients

dhcp-option=tag:bootstrap,12,bootstrap.lab-cluster.okd4.lab

dhcp-option=tag:master,12,master-0.lab-cluster.okd4.lab

dhcp-option=tag:worker,12,worker-0.lab-cluster.okd4.lab

dhcp-host=52:54:00:15:30:f2,10.120.120.99

dhcp-host=52:54:00:06:94:85,10.120.120.50

dhcp-host=52:54:00:82:fc:9e,10.120.120.60

# the log-dhcp option is verbose logging used for debugging

log-dhcp

pxe-prompt="Press F8 for menu.", 10

pxe-service=x86PC, "PXEBoot Server", pxelinux

enable-tftp

tftp-root=/var/lib/tftpboot

# Set the SRV record for etcd. Normally there are 3 or more entries in

# SRV record

srv-host=_etcd-server-ssl._tcp.lab-cluster.okd4.lab,etcd-0.lab-cluster.okd4.lab,2380,0,10

# host-record options are linking the mac, ip and hostname for dns

host-record=52:54:00:dd:5e:07,10.120.120.250,bastion.okd4.lab

host-record=52:54:00:15:30:f2,10.120.120.99,bootstrap.lab-cluster.okd4.lab

host-record=52:54:00:06:94:85,10.120.120.50,master-0.lab-cluster.okd4.lab

host-record=52:54:00:06:94:85,10.120.120.50,etcd-0.lab-cluster.okd4.lab

host-record=52:54:00:82:fc:9e,10.120.120.60,worker-0.lab-cluster.okd4.lab

# These host records are for the api and api-int to point to HAProxy

host-record=bastion.okd4.lab,api.lab-cluster.okd4.lab,10.120.120.250,3600

host-record=bastion.okd4.lab,api-int.lab-cluster.okd4.lab,10.120.120.250,3600

Copy the contents of the above file, overwrite the /etc/dnsmasq.conf and then restart the service: sudo systemctl restart dnsmasq

NOTE: Don't forget to change the MAC addresses in the above file to match your VM's MAC addresses

Step 2E: HAProxy setup

As OKD represents a community version of an enterprise solution, a load balancer is assumed to be functioning in your environment. To achieve this, we are going to use a software load balancer called HAProxy. It's relatively straight forward to set up.

The first thing to do is to make sure that SELinux allows the needed HAProxy ports:

semanage port -a -t http_port_t -p tcp 6443

semanage port -a -t http_port_t -p tcp 22623

Port 6443 is the API port which is used for master-to-master communication and the Web UI, as well as using the oc command-line utilities.

Port 22623 provides configuration files to new hosts joining the cluster. Both ports are required for the successful operation of the cluster, and both are assumed to have multiple backends in the load balancer.

To accomplish this, simply edit the haproxy.cfg so that it looks similar to this:

# HAProxy Config section

# Global settings

#---------------------------------------------------------------------

global

maxconn 20000

log /dev/log local0 info

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 300s

timeout server 300s

timeout http-keep-alive 10s

timeout check 10s

maxconn 20000

frontend control-plane-api

bind *:6443

mode tcp

default_backend control-plane-api

option tcplog

backend control-plane-api

balance source

mode tcp

server master-0 master-0.lab-cluster.okd4.lab:6443 check

server bootstrap bootstrap.lab-cluster.okd4.lab:6443 check backup

frontend control-plane-bootstrap

bind *:22623

mode tcp

default_backend control-plane-bootstrap

option tcplog

backend control-plane-bootstrap

balance source

mode tcp

server master-0 master-0.lab-cluster.okd4.lab:22623 check

server bootstrap bootstrap.lab-cluster.okd4.lab:22623 check backup

frontend ingress-router-http

bind *:80

mode tcp

default_backend ingress-router-http

option tcplog

backend ingress-router-http

balance source

mode tcp

server worker-0 worker-0.lab-cluster.okd4.lab:80 check

frontend ingress-router-https

bind *:443

mode tcp

default_backend ingress-router-https

option tcplog

backend ingress-router-https

balance source

mode tcp

server worker-0 worker-0.lab-cluster.okd4.lab:443 check

I am running the ingress router on the single worker node. This requires some tweaking of OpenShift before and after the installation. This is reflected in the HAProxy config above by the heading backend ingress-router-https. If you are using more nodes, simply add node definitions to the appropriate backend section in the HAProxy configuration.

HAProxy is prone to failure anytime you restart dnsmasq, and it cannot resolve hosts during the restart, however brief. In addition, I have observed that there are times on reboot that HAProxy will not start for the same reason. The solution is to simply restart it after dnsmasq is running (although you can optionally edit the systemd service unit for HAProxy to make sure it starts after dnsmasq).

Step 2F: Setting up TFTP

Setting up the TFTP server involves only a couple of steps:

- Install the TFTP package

- Copy the files from syslinux to the TFTP root

- Make the directory for the menus

- Create a menu file for each server

Copy the bootloaders into the TFTP server:

cp -r /usr/share/syslinux/* /var/lib/tftpboot/

Make the pxelinux.cfg:

mkdir /var/lib/tftpboot/pxelinux.cfg

The file below references resources hosted by Apache, so we want to make sure the menu has port 8080 for the pull. Because HAProxy is listening on both standard web ports (80 and 443), in an earlier step, we told Apache to listen on port 8080.

# master pxe file

UI menu.c32

DEFAULT fedcos

TIMEOUT 100

MENU TITLE RedHat CoreOS Node Installation

MENU TABMSG Press ENTER to boot or TAB to edit a menu entry

LABEL fedcos

MENU LABEL Install fedcos

KERNEL http://bastion.okd4.lab:8080/fedcos/installer-kernel

INITRD http://bastion.okd4.lab:8080/fedcos/installer-initramfs.img

APPEND console=tty0 console=ttyS0 ip=dhcp rd.neednet=1 coreos.inst=yes coreos.inst.ignition_url=http://bastion.okd4.lab:8080>/ignition/master.ign coreos.inst.image_url=http://bastion.okd4.lab:8080/fedcos/metal.raw.gz coreos.inst.install_dev=vda

IPAPPEND 2

NOTE: You should create this file for each host you have. The file name should be 01-<mac addresses with '-' instead of ':'>. The dnsmasq lease looks like this:

1582066860 52:54:00:06:94:85 10.120.120.50 * 01:52:54:00:06:94:85

Therefore, use the fifth field to match with the TFTP server. Given the MAC address of 52:54:00:06:94:85, the full path to the file would be /var/lib/tftpboot/pxelinux.cfg/01-52-54-00-06-94-85

You are now ready to enable and start all of the services.

systemctl enable --now httpd dnsmasq tftp.socket

systemctl enable --now haproxy

Step 3: Setup the gateway to be the "bastion" for installation

The steps for setting up an environment are almost identical between OCP4 and OKD. The main differences are:

- Different binaries: The OKD binaries are obtained in a different manner

- OKD/Fedora CoreOS looks for the signature for the metal.raw.gz file

- Need to manually edit the ETCD Quorum guard if you have less than three masters

OKD Clients can be downloaded here.

Extract and place the binaries in /bin (or alter your PATH so that the binaries are there).

wget https://github.com/openshift/okd/releases/download/4.4.0-0.okd-2020-01-28-022517/openshift-client-linux-4.4.0-0.okd-2020-01-28-022517.tar.gz

tar xvf openshift-client-linux-4.4.0-0.okd-2020-01-28-022517.tar.gz

At this time, the only release that appears to be working is 2020-01-28-022517. I have tried several others without success. Make sure you have the correct version by running the following command:

./oc adm release extract --tools registry.svc.ci.openshift.org/origin/release:4.4.0-0.okd-2020-01-28-022517

tar xvf openshift-install-linux-4.4.0-0.okd-2020-01-28-022517.tar.gz

mv oc openshift-install /bin/

The pull secrets are optional for OKD. However, they are helpful for testing specific operators. I recommend getting a 60-day evaluation for the pull secret before installation. Obtain your pull secret from here.

Next, create your SSH key and a directory from which to launch the installer.

Generate an ssh key:

ssh-keygen -N ''

Make a new directory for OCP install:

mkdir /root/$(date +%F)

cd /root/$(date +%F)

Set up your environment variables to make the rest of the configuration easier.

ENSURE THAT ALL VARIABLES ARE NOT EMPTY! The rest of this guide relies on the environment variables to fill in configuration files.

Create a variable to hold the SSH_KEY:

SSH_KEY=\'$(cat ~/.ssh/id_rsa.pub )\'

Create a variable to hold the PULL_SECRET:

PULL_SECRET=\'$(cat ocp4_pullsecret.txt)\'

BASE_DOMAIN=okd4.lab

CLUSTER_NAME=lab-cluster

CLUSTER_NETWORK='10.128.0.0/14'

SERVICE_NETWORK='172.30.0.0/16'

NUMBER_OF_WORKER_VMS=1

NUMBER_OF_MASTER_VMS=1

INGRESS_WILDCARD=apps.${CLUSTER_NAME}.${BASE_DOMAIN}

The cat command below replaces the variables with the shell variables created above. This is the initial cluster configuration YAML file that is used to create the manifests and Ignition files.

cat << EOF > install-config.yaml.bak

apiVersion: v1

baseDomain: ${BASE_DOMAIN}

compute:

- hyperthreading: Enabled

name: worker

replicas: ${NUMBER_OF_WORKER_VMS}

controlPlane:

hyperthreading: Enabled

name: master

replicas: ${NUMBER_OF_MASTER_VMS}

metadata:

name: ${CLUSTER_NAME}

networking:

clusterNetwork:

- cidr: ${CLUSTER_NETWORK}

hostPrefix: 23

networkType: OpenShiftSDN

serviceNetwork:

- ${SERVICE_NETWORK}

platform:

none: {}

fips: false

pullSecret: ${PULL_SECRET}

sshKey: ${SSH_KEY}

EOF

The reason we create the backup is that running the openshift-install command will consume the file and we want to have a copy of it in case we start over or create a new cluster.

Next, copy the file to the name which is consumed by the installation process:

cp install-config.yaml.bak install-config.yaml

I have run several tests with OKD 4.4.x, trying to adjust the manifests for the Ingress Controller and the CVO. Adjusting these files instead of using the stock IGN files seems to result in the bootstrap machine hanging after receiving the DHCP response from the server, so we will make these adjustments in a post-install step.

Next, generate the Ignition configurations, which will consume the manifests directory. Copy these Ignition configurations to the appropriate Apache directory:

openshift-install create ignition-configs

cp *.ign /var/www/html/ignition/

Change the ownership on all the files so Apache can access them:

chown -Rv apache. /var/www/html/*

Finally, PXEboot the VMs. If everything is configured correctly, the cluster starts to configure itself.

On a computer with limited resources, only start the bootstrap and master VMs. After the bootstrap process is complete, you can start the worker/infra/compute node and take down the bootstrap machine. It can take quite some time for this to complete (30 minutes or more).

Step 4: Post-installation cluster config

As long as you are interacting with the cluster on the same date as you started the process, you can run the following to export the KUBECONFIG variable that is needed for interacting with the cluster:

export KUBECONFIG=/root/$(date %+F)/auth/kubeconfig

You can run commands such as:

openshift-install wait-for bootstrap-complete

openshift-install wait-for install-complete

to monitor the initial installation process. The bootstrap-complete command will tell you when it is safe to stop the bootstrap machine. The install-complete command simply checks the API every so often to ensure everything has completed.

During the addition of the worker node, you may see it not being ready, or not even listed. Use the following commands to view the status.

Check to see if the nodes are ready:

oc get nodes

Sometimes there are CSRs to approve so put a watch on:

watch oc get csr

When a new server attempts to join a cluster, there are often certificate signing requests that are issued by said server so that the cluster trusts the new node. If you do not see nodes, and you have pending CSRs, you need to approve them.

If there are pending CSRs, approve them:

oc adm certificate approve `oc get csr |grep Pending |awk '{print $1}' `

Once the worker shows up in the list, label it so that the ingress operator can proceed:

oc label node worker-0.lab-cluster.okd4.lab node-role.kubernetes.io/infra="true"

Finally, watch the cluster operators. This process can take up to a couple of hours to complete before everything goes "True," depending on Internet speed and system resources:

oc get clusteroperators

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE

authentication 4.3.1 False False False 14h

cloud-credential 4.3.1 True False False 20h

cluster-autoscaler 4.3.1 True False False 18h

console 4.3.1 False False False 9h

dns 4.3.1 True False False 18m

image-registry 4.3.1 True False False 18h

ingress 4.3.1 False True True 11m

insights 4.3.1 True False False 19h

kube-apiserver 4.3.1 True False False 19h

kube-controller-manager 4.3.1 True False False 19h

kube-scheduler 4.3.1 True False False 19h

machine-api 4.3.1 True False False 19h

machine-config 4.3.1 True False False 19h

marketplace 4.3.1 True False False 18m

monitoring 4.3.1 False True True 9h

network 4.3.1 True True True 19h

node-tuning 4.3.1 True False False 9h

openshift-apiserver 4.3.1 True False False 17m

openshift-controller-manager 4.3.1 True False False 18m

openshift-samples 4.3.1 True False False 18h

operator-lifecycle-manager 4.3.1 True False False 19h

operator-lifecycle-manager-catalog 4.3.1 True False False 19h

operator-lifecycle-manager-packageserver 4.3.1 True False False 18m

service-ca 4.3.1 True False False 19h

service-catalog-apiserver 4.3.1 True False False 18h

service-catalog-controller-manager 4.3.1 True False False 18h

storage 4.3.1 True False False 18h

After the cluster is up, consider scaling most of the replicas down to one to reduce resources:

oc scale --replicas=1 ingresscontroller/default -n openshift-ingress-operator

oc scale --replicas=1 deployment.apps/console -n openshift-console

oc scale --replicas=1 deployment.apps/downloads -n openshift-console

oc scale --replicas=1 deployment.apps/oauth-openshift -n openshift-authentication

oc scale --replicas=1 deployment.apps/packageserver -n openshift-operator-lifecycle-manager

When enabled, the Operator will auto-scale these services back to the original quantity:

oc scale --replicas=1 deployment.apps/prometheus-adapter -n openshift-monitoring

oc scale --replicas=1 deployment.apps/thanos-querier -n openshift-monitoring

oc scale --replicas=1 statefulset.apps/prometheus-k8s -n openshift-monitoring

oc scale --replicas=1 statefulset.apps/alertmanager-main -n openshift-monitoring

Step 5: OKD-specific post install

If you have a single master, you need to adjust the clusterversion operator. Create a YAML file with the following contents:

- op: add

path: /spec/overrides

value:

- kind: Deployment

group: apps/v1

name: etcd-quorum-guard

namespace: openshift-machine-config-operator

unmanaged: true

We need to have this unmanaged, or else the number of replicas will always be reverted to three. Patch this file in:

oc patch clusterversion version --type json -p "$(cat etcd_quorum_guard.yaml)"

Next, we need to patch the ingress controller to set the label to match the worker as well as reduce the number of replicas to coincide with the number of workers we have:

oc patch -n openshift-ingress-operator ingresscontroller/default --patch '{"spec":{"replicas": 1,"nodePlacement":{"nodeSelector":{"matchLabels":{"node-role.kubernetes.io/infra":"true"}}}}}' --type=merge

Finally, scale down the etcd-quorum-guard:

oc scale --replicas=1 deployment/etcd-quorum-guard -n openshift-machine-config-operator

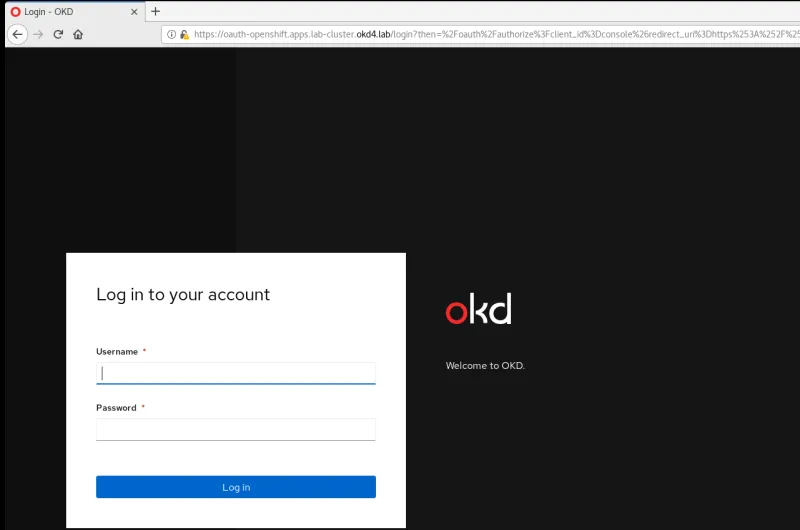

Step 6: Access your new cluster

NOTE: To access the cluster behind the gateway computer, you need to either route to the gateway computer or simply use the gateway VM to interact with the cluster.

You should be able to find the URL to your cluster login page by running the following command:

[root@gateway 2020-04-17]# oc get routes --all-namespaces |grep console |grep -v download

openshift-console console console-openshift-console.apps.lab-cluster.okd4.lab console https reencrypt/Redirect None

Since you are already at the command line, you should also find the default username and password in the auth directory in your install directory:

[root@gateway 2020-04-17]# ls /root/$(date +%F)/auth/kubeadmin-password

/root/2020-04-17/auth/kubeadmin-password

You can now log in with the user kubeadmin and the password that is in the file.

Concluding thoughts

I really love the usability that OpenShift allows for in terms of ease of installation, configuration, and operation of a complex Kubernetes environment. I think that it is the best way to get acclimatized to the container orchestration world. There are many standard integrations that you might want to use, including but not limited to:

- Storage for registry

- Storage/extra disk for Prometheus

- LDAP/Active Directory/OAuth integration

- Replacing the wildcard certificates for the *.apps domain

- Integration with a third party image registry

- Jenkins integration

- Source control integration if using OpenShift to do builds

So there you have a straight-forward way to install OpenShift OKD 4 in a lab environment. I recognize that this configuration is non-standard, but it allows you to carry a small cluster around on your laptop without exposing it to any WiFi networks directly.

[ New to containers? Download the Containers Primer and learn the basics of Linux containers tools and what each does. ]

About the author

Steve is a dedicated IT professional and Linux advocate. Prior to joining Red Hat, he spent several years in financial, automotive, and movie industries. Steve currently works for Red Hat as an OpenShift consultant and has certifications ranging from the RHCA (in DevOps), to Ansible, to Containerized Applications and more. He spends a lot of time discussing technology and writing tutorials on various technical subjects with friends, family, and anyone who is interested in listening.

More like this

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 4)

The Containers_Derby | Command Line Heroes

Can Kubernetes Help People Find Love? | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds