One of the fantastic things about mobile technology is that you can conduct a call on your cellphone while traveling hundreds of miles on a high-speed bullet train and never lose the connection. It's all due to mobile handoff architecture, which has evolved into a near-magical technology over the years.

In my previous article about the essentials of mobile computing architecture, I covered how radio waves work and how they relate to cellular communication. In this article, I'll move on to the mechanics of connecting calls and the architecture that enables customers to pay for their mobile service.

The basics of call transfer

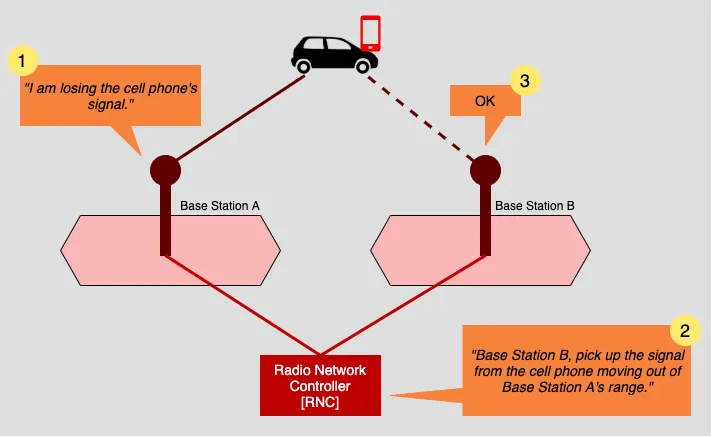

Initially, when cellular networks started, they used an architecture in which base stations were pretty dumb. They could receive and transmit radio signals, but all other activity was handled by an independent device called a base station controller (BSC), which evolved into a Radio Network Controller (RNC). These devices had the smarts required to negotiate and monitor caller access to and among the network. Also, these controller devices knew all the base stations in the network. This enabled the controller to manage handing off a cellphone among base stations as the mobile device goes in and out of range. Figure 1 below illustrates the essential principle behind call handoff management using a central Radio Network Controller.

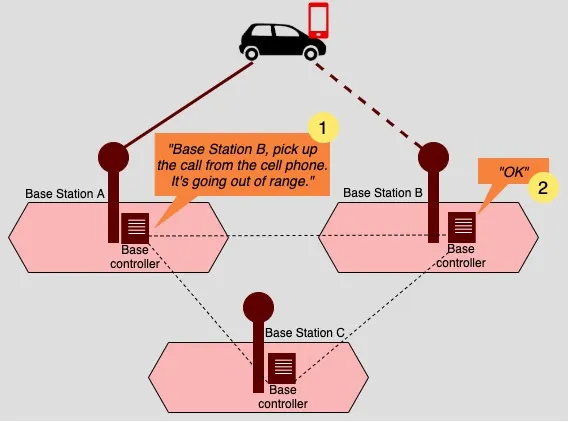

Eventually, controlling call and user activity moved directly onto the base station with the release of 4G LTE technology. Thus, a base station became aware of the other base stations in its vicinity. This effectively put handoff activity under the control of the base station.

Transferring handoff activity from a central, all-knowing controller to individual base stations might seem like a minor point, but it's quite the opposite. Granted, from the user's point of view, nothing is really different. A caller can still make a call and travel about without any interruption in service. But, behind the scenes, a dramatic shift has occurred.

First, moving from a centrally controlled system to a decentralized system using Evolved UMTS Terrestrial Radio Access Network (E-UTRAN) technology required a complete equipment overhaul. As Figure 2 above shows, each E-UTRAN-powered base station needs to have its own device to facilitate call management activities.

Moving to E-UTRAN was a significant undertaking in terms of technological transformation and financial cost. Given that there were nearly a quarter-million base stations in the United States in 2009, which is the year that introduced LTE technology, you can easily imagine that upgrade costs went into the tens of millions of dollars, if not more.

More importantly, pushing call management to the base station puts the notion of edge computing at the forefront of architectural design. In the edge computing paradigm, the computation burden is pushed onto the devices receiving and transmitting data instead of being passed back to a central set of computers for processing. Again, it's the difference between a central radio network controller architecture and a decentralized architecture where processing intelligence resides in the base station. It's a big deal.

Understanding Control and User Plane Separation (CUPS)

Handoff management is only one aspect of the processing activity that takes place in a mobile computing architecture. Other vital elements are authenticating and connecting mobile devices to the carrier network. Connecting to the carrier network's backend gives the caller access to the internet. In addition, connecting to the backend facilitates monitoring a customer's usage behavior, which, in turn, allows the carrier to bill the customer according to the monitored usage.

When you're exchanging information on your cellphone in the form of voice, text, or even listening to your favorite podcast, that interaction has two sets of functions. One set of functions is part of the user plane; the other is part of the control plane. This separation is formally called Control and User Plane Separation (CUPS).

User-plane functionality encompasses user behavior around the call such as signal handoff activity. Control-plane functionality is about the operational specifics of the call, for example, giving your phone an IP address, recording the time and place of your location as you travel, and keeping track of the amount of data you're using.

In the early days of cellular communication, user- and control-plane activity was coordinated in a central location. This approach was an inefficient use of the network. For example, if the central server is far away from the mobile device, a lot of time can be wasted doing user-specific tasks, such as a call handoff. That user behavior is better handled closer to the user at the base station. This is the reason behind the separation inherent in CUPS.

Today, user-plane and control-plane functionality are separated. User-plane activity is executed as close to the user as possible, while control-plane behavior is executed back at central servers on the core network. Behaviorally, this means handoff behavior is handled in the user plane, while viewing your account activity on your carrier's website is what's reported from the control plane.

The important thing to understand about CUPS from an architectural perspective is that separating the user plane from control-plane activities increases network efficiency. Data only goes as far across the network as it has to, and overall performance increases.

CUPS plays a major role in decreasing the time a signal takes to get to a mobile device (lower latency) and increasing data throughput velocity on the network. In short, CUPS satisfies mobile customers' basic desire: getting more data at faster rates of exchange, which leads us to 5G.

Putting 5G into the mix: The need for speed

5G is the latest generation of technology on the cellular landscape. The motivating force driving its adoption is speed. Some of 5G's promises to mobile users are more data at faster rates, reduced latency for communication sessions, and better support for a high density of IoT devices in a particular physical location. More data at lower latency translates into 4K Ultra HD movies on your cellphone, higher fidelity in audio broadcasting, and fast access to data that changes in millisecond increments.

While there are an enormous number of technical details underlying 5G technology, at the most refined level, the way that 5G intends to satisfy the planet's ever-increasing need for speed is to push the frequencies that mobile carriers transmit signals higher up the frequency spectrum while also modifying the design of the physical architecture of mobile base stations.

[ Learn more by reading An architect's guide to GPS and GPS data formats. ]

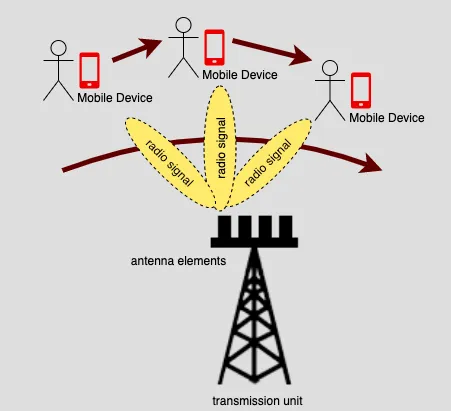

As I described above, a fundamental principle of cellular networks is that the data transmitted at higher frequencies has faster throughput than data transmitted lower down on the spectrum. The problem with transmitting at higher frequencies is that the signals fall off faster and can become distorted due to physical and atmospheric conditions. Thus, in order to transmit effectively at higher frequencies, the base stations need to be closer together. This, in turn, means you have to have a lot more base stations (that is, cell towers and other types of transmission units) on the landscape. Also, the base stations will be able to transmit signals in a more targeted manner, directly at the cellphone using beamforming technology (Figure 4).

5G beamforming enables wireless antennas to focus connections more directly toward mobile devices. A transmission tower or unit that implements beamforming has multiple radiating elements transmitting the same signal to a caller at an identical wavelength and phase in a reinforced manner. The radiating element will steer the beam to follow a particular mobile device. When the device goes out of range, the signal is handed off, and beamforming continues to steer the new signal toward the mobile device of interest.

The important thing about 5G from an architectural perspective is that it will broaden the scope of cellular activity, particularly as 5G technology moves beyond cellphones and mobile tablets. The lower latency 5G offers is expected to make it an essential part of autonomous vehicle safety. Also, the faster download times should enable edge computing devices to absorb a lot more data much more quickly, thus making them more independent of the network to do commonplace work. Imagine it taking only seconds for a doctor to download to a smartphone the gigabytes of current data and algorithms required for AI to do complex medical analysis. That doctor can then go into an area that's not connected to the internet or a wireless network and have that data and AI capability readily available to do the work of saving lives.

This is what the future under 5G can look like.

Putting it all together

Mobile networking has changed the very nature of enterprise architecture. We've moved way past needing to be tethered to a desktop computer to do meaningful work. Being free of the wire means that we can work just about anywhere on the planet in our own time, at our own pace.

But, there's a price to be paid for that freedom. Mobile computing has made enterprise architecture a lot more complex. While years ago, understanding the basic concepts that drove wireless technology was the province of network engineers, in today's world, anybody who touches code and technology needs to understand how these things work. For example, how can you design a mission-critical transportation system without understanding the tradeoffs that go with potential latency problems when vehicles must report their activities back to a central coordinating hub? A few tenths of seconds might make all the difference in the world, particularly if the vehicle in question is an ambulance driving a patient in need to the hospital.

To be viable in today's world of modern enterprise architecture, it's a good idea to have a basic understanding of the essential principles driving mobile computing. It's not a topic that you can master overnight. There's a lot to learn, but we all need to start somewhere. Hopefully, this was a good place to start.

About the author

Bob Reselman is a nationally known software developer, system architect, industry analyst, and technical writer/journalist. Over a career that spans 30 years, Bob has worked for companies such as Gateway, Cap Gemini, The Los Angeles Weekly, Edmunds.com and the Academy of Recording Arts and Sciences, to name a few. He has held roles with significant responsibility, including but not limited to, Platform Architect (Consumer) at Gateway, Principal Consultant with Cap Gemini and CTO at the international trade finance company, ItFex.

More like this

Red Hat Learning Subscription Course: Skills for the future

14 software architecture design patterns to know

Mailbag: Managers, Technical Debt | Compiler

Hardy Hardware | Compiler: Legacies

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds