Generative artificial intelligence (gen AI) is one of the most powerful technologies we have seen in decades. And with this, many organizations are scrambling to figure out the best way to implement it while maintaining operations and growing business.

One of the most important considerations when deploying gen AI is that model selection, training, tuning and lifecycle are only a part of the picture. Often a model will be delivered as part of a cloud-native application. The “last mile” of delivering a business-ready application is key to delivering ROI. Your business needs a foundation that is powerful and flexible enough for you to keep pace with the competition. And as Red Hat suggested in a recent Forbes article, make sure you’re investing in a stable platform that’s going to be able to take in that level of innovation.

Azure Red Hat OpenShift can help accelerate the time to value of AI projects by pre-integrating the DevOps pipeline with data science workflows. Data scientists and data engineers don’t want to spend precious time managing infrastructure and DevOps tools when that time could be better spent managing gen AI models and addressing the business problem.

What does this look like in action? Red Hat and Microsoft are teaming up for a hands-on workshop to demonstrate how Azure Red Hat OpenShift and open source solutions on Microsoft Azure can be used to integrate different AI tools and services to accelerate application delivery.

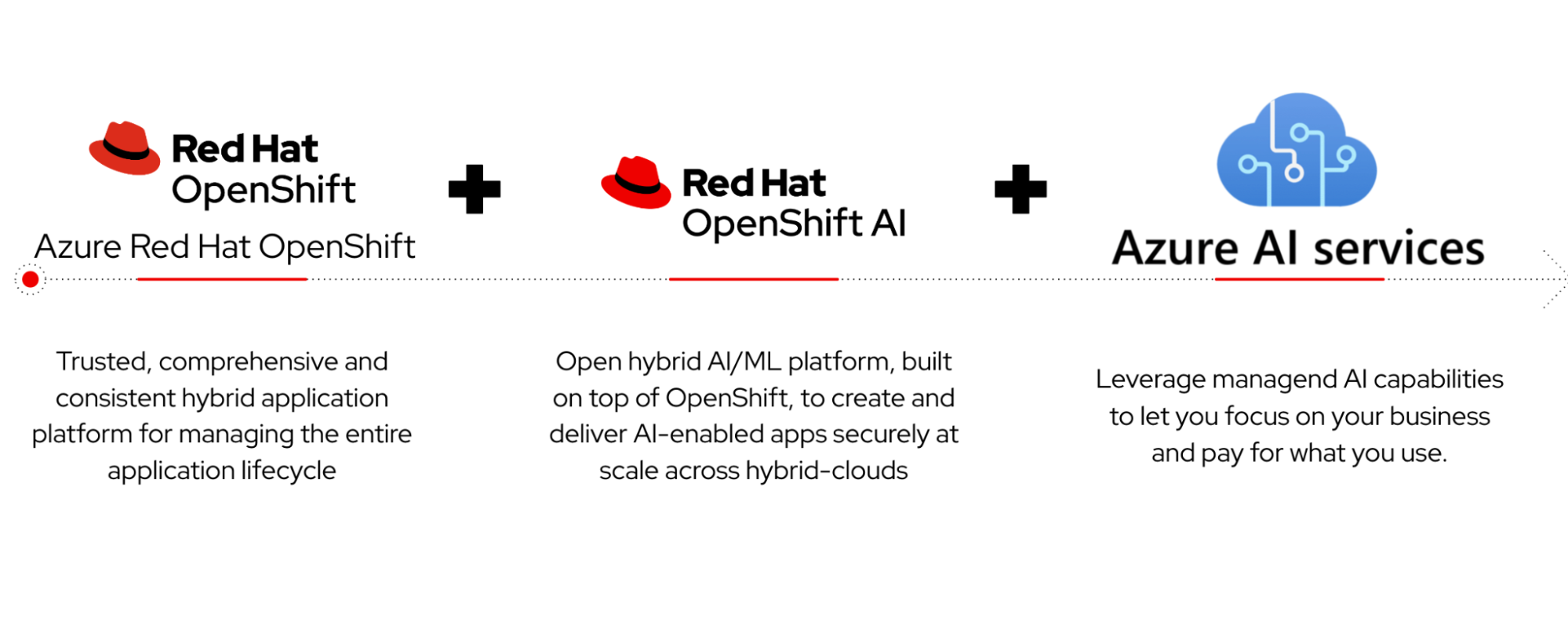

In the workshop, Red Hat and Microsoft showcase the combined benefits of Azure Red Hat OpenShift, Red Hat OpenShift AI, Azure OpenAI models, open source models, Azure compute and data services and the Azure AI Studio to support retrieval-augmented generation (RAG) functionality for improving the performance of gen AI models. Whether an organization is using Red Hat OpenShift AI as the primary AI offering or Azure AI services as the data tool, or both, organizations get the flexibility to choose the right MLOps platform for their data science and business teams to rapidly prototype and convert to a business application.

Technology in the Parasol Workshop with integrated Red Hat and Azure Services

Workshop at a glance

Let’s dive more into what you will learn in this workshop. We use a fictional insurance company to demonstrate how historical claims data could be used to fine-tune an AI model for a chatbot application. You may have first seen this demonstration during the Red Hat Summit 2024 keynote presentation. We have put an additional spin on this demo and are using both Red Hat OpenShift AI and Azure Open AI to compare models from multiple sources with different scenarios from text summarization to sentiment analysis. From there, we utilize a RAG vector database to enhance the prompt data with external documentation to enhance the output. The workshop will then combine a YOLO image classification model with an additional tuning and training step to demonstrate a typical data scientist task. Lastly, we demonstrate how to take the tuned model and deploy it in a model server to access it by API.

Parasol Workshop integration with Azure Red Hat OpenShift, OpenShift AI and Azure OpenAI

Once the models are tested and ready to deploy, the data science and development team can use a GitOps deployment template in ArgoCD to deploy an integrated application that integrates the AI models into a business-ready application. This can be iterated through automation of the MLOps and DevOps interface as new models, new requirements and enhancements are required. The combination of MLOps and a turnkey DevOps platform that can be used by technical and non-technical users alike can help significantly accelerate the ROI of gen AI applications.

Final integrated Application deployed by GitOps with multiple models.

Get production-ready AI solutions faster

You have many goals to achieve, requirements to consider and tools to choose from when planning application modernization efforts in your AI journey. A stable platform that offers the flexibility to choose the right models and AI tools to help you more reliably scale and accelerate application deployment is critical. The workshop effectively showcases how Red Hat OpenShift AI and Azure Open AI services can empower organizations to build, deploy and manage AI solutions at scale. Utilizing Azure Red Hat OpenShift can accelerate AI adoption in enterprise environments, enabling organizations to focus on creating value through AI rather than managing complex infrastructure. With these solutions, the path from concept to production-ready AI solutions can be shorter and more straightforward.

Join us for a live workshop

Are you interested in learning more about your gen AI options on Azure Red Hat OpenShift? Join us for a virtual workshop where you’ll have the opportunity to build, train, deploy and compare AI models and integrate them in a GitOps deployed application. Solutions explored include Azure Red Hat OpenShift, Red Hat OpenShift AI, Azure OpenAI, Azure AI Search, Postgres DB and Cosmos DB.

About the authors

Brooke is a product marketer for Red Hat OpenShift focused on helping organizations get to market faster and with less complexity with Red Hat OpenShift cloud services. Prior to joining Red Hat, she was leading product management and product marketing initiatives in the cloud with managed services. Brooke received her undergraduate degrees and MBA from Virginia Tech, lives in Virginia with her husband and two children, and enjoys gardening, skiing and cheering on her favorite sports teams.

A Senior Black Belt on the Managed Cloud Services team at Red Hat, where I have been contributing expertise since March 2022. With a robust background in infrastructure and automation, I bring nearly three years of valuable experience to his role at Red Hat. My career journey includes significant contributions in the Financial Services and Defense Contracting industries, where I honed skills in complex technological environments.

Dave Strebel is a Microsoft Principal Cloud Native Architect on the Azure Global Black Belt team for containers. He has worked at Microsoft for the last 8 years on cloud native solutions, DevOps and AI based solutions. Prior to joining Microsoft Dave worked at MSP customers, integrators and with customer environments.

More like this

Building the foundation for an AI-driven, sovereign future with Red Hat partners

How llm-d brings critical resource optimization with SoftBank’s AI-RAN orchestrator

Technically Speaking | Build a production-ready AI toolbox

Technically Speaking | Platform engineering for AI agents

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds