In part three of our Davie Street Enterprises (DSE) series, we're going to take a look at how DSE addressed some of its network issues that led to a major outage.

DSE Chief Architect Daniel Mitchell has been put in charge of Davie Street Enterprises’ (DSE) digital transformation. He's feeling pressure from management since the website crashed last month, and was down for 46 hours straight. Shifting from one-off configurations and into an Infrastructure-as-Code (IaC) model is now critical for DSE. It took a crisis, but management realized that procrastinating isn’t an option and have tasked Mitchell to lead various teams to modernize their infrastructure.

The Backstory

DSE’s IT environment is scattered and fragmented. In one instance, an IP address overlap combined with a misconfigured ADCs/load balancers caused an outage with an inventory tracking system. This took more than a day to troubleshoot and cost the company more than $100,000 in lost revenue.

In addition, the WAN deployment was delayed and eventually scaled back, which also contributed to delays in migrating services to the cloud. The company already fixed the lack of IP automation with Red Hat Ansible and Infoblox, and now it's time to tackle the F5 ADCs/load balancers.

Mitchell ran into Ranbir Ahuja, Director of IT Operations at DSE, in the elevator one afternoon. Ahuja congratulated Mitchell on the automation of IP Administration but had some constructive feedback. He explained that the recent outage was not just caused by a duplicate IP but compounded by misconfigured ADCs/load balancers.

To help address this problem, Mitchell consulted Gloria Fenderson, Sr. Manager of Network Engineering, and her team. After their success with Red Hat Ansible Automation Platform and Infoblox, the team decides Red Hat Ansible Automation could help with this case as well.

Mitchell sets up a call with F5 and Red Hat to go over his options. Luckily, Ansible F5 modules can help him automate things that used to take his network team hours to complete. With Ansible he can automate the initial configuration of BIG-IP devices like self IP addresses, DNS, VLANS, route domains and NTP.

Putting theory into practice

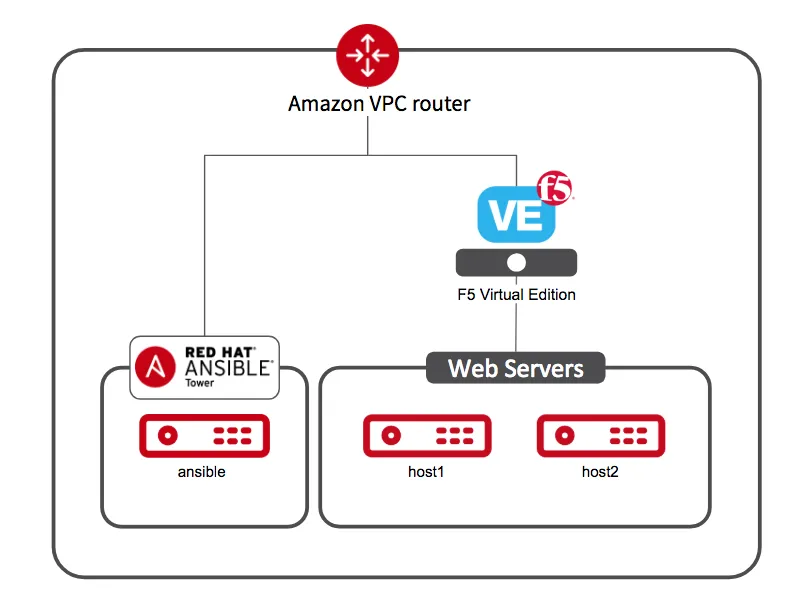

Mitchell decides to dive in and give it a try with the environment depicted in figure 1.

He starts by reading through the different modules available to him and decides to write one that leverages the “bigip_facts” module to gather facts about the environment. When he’s done it looks like this:

--- - name: GRAB F5 FACTS hosts: f5 connection: local gather_facts: no tasks: - name: COLLECT BIG-IP FACTS bigip_device_info: gather_subset: - system-info provider: server: "{{private_ip}}" user: "{{ansible_user}}" password: "{{ansible_ssh_pass}}" server_port: 8443 validate_certs: no register: device_facts - name: DISPLAY COMPLETE BIG-IP SYSTEM INFORMATION debug: var: device_facts - name: DISPLAY ONLY THE MAC ADDRESS debug: var: device_facts['system_info']['product_version'] - name: DISPLAY COMPLETE BIG-IP SYSTEM INFORMATION debug: var: device_facts tags: debug

He runs the Playbook with “ansible-playbook bigip-facts” and the output looks like this:

system_info: base_mac_address: 02:e5:47:3f:24:17 chassis_serial: 950421ec-3dfc-0db5-4856ad84cd03 hardware_information: - model: Intel(R) Xeon(R) CPU E5-2686 v4 @ 2.30GHz name: cpus type: base-board versions: - name: cache size version: 46080 KB - name: cores version: 2 (physical:2) - name: cpu MHz version: '2299.976' - name: cpu sockets version: '1' - name: cpu stepping version: '1' marketing_name: BIG-IP Virtual Edition package_edition: Point Release 4 package_version: Build 0.0.5 - Tue Jun 16 14:26:18 PDT 2020 platform: Z100 product_build: 0.0.5 product_build_date: Tue Jun 16 14:26:18 PDT 2020 product_built: 200616142618 product_changelist: 3337209 product_code: BIG-IP product_jobid: 1206494 product_version: 13.1.3.4 time: day: 22 hour: 14 minute: 51 month: 10 second: 8 year: 2020 uptime: 225122

Mitchell moves on to the next module to add two Red Hat Enterprise Linux (RHEL) servers as nodes in his environment.

His new playbook leveraging the “bigip_node” module looks like this:

--- - name: BIG-IP SETUP hosts: lb connection: local gather_facts: false tasks: - name: CREATE NODES bigip_node: provider: server: "{{private_ip}}" user: "{{ansible_user}}" password: "{{ansible_ssh_pass}}" server_port: 8443 validate_certs: no host: "{{hostvars[item].ansible_host}}" name: "{{hostvars[item].inventory_hostname}}" loop: "{{ groups['web'] }}"

He runs this playbook and gets the output:

[student1@ansible]$ ansible-playbook bigip-node.yml [student1@ansible]$ ansible-playbook bigip-node.yml PLAY [BIG-IP SETUP] ************************************************************ TASK [CREATE NODES] ************************************************************ changed: [f5] => (item=node1) changed: [f5] => (item=node2) PLAY RECAP ********************************************************************* f5 : ok=1 changed=1 unreachable=0 failed=0

Curious he runs it again and gets this output instead:

PLAY [BIG-IP SETUP] *************************************************************************************************************************************************************************** TASK [CREATE NODES] *************************************************************************************************************************************************************************** ok: [f5] => (item=node1) ok: [f5] => (item=node2) PLAY RECAP ************************************************************************************************************************************************************************************ f5 : ok=1 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

The two nodes were already created, but the Ansible Playbook being run understood this and did not create two more nodes. Instead, the Playbook just checked to make sure that the nodes were still there.

One thing he liked about Ansible was that it didn't require any setup on the F5 device beforehand. Since Ansible is agentless he was able to just plunge in and start working, while Ansible leverages the REST API that comes with F5 technology.

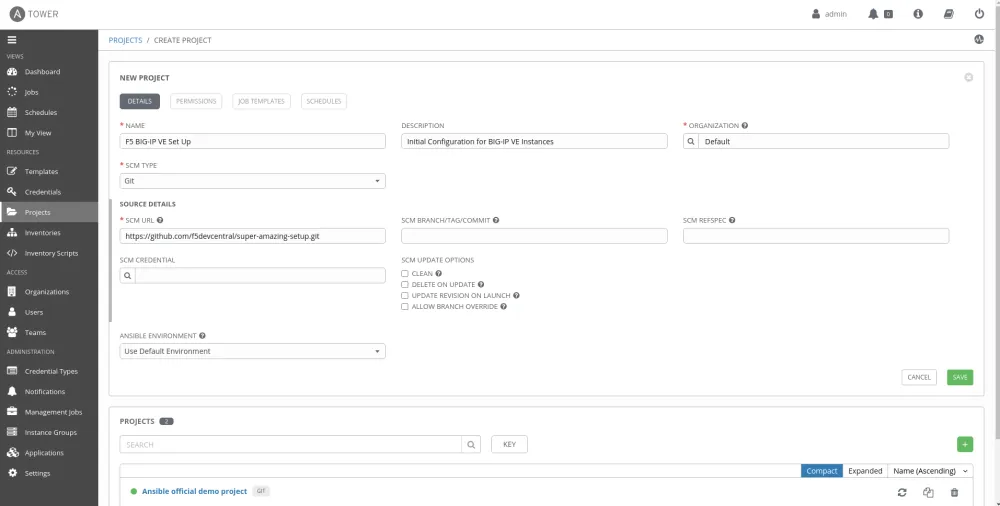

Feeling more comfortable with his Playbooks for F5, he decides to test them in Ansible Tower. Using Tower lets him work in a GUI to better organize his Playbooks and make Ansible more easily accessible for his engineers and administrators. By integrating with source code management (SCM) like GitHub, Ansible Tower can allow for version control of Playbooks for managing his environment.

By creating projects, which are collections of Playbooks managed in Github or some other SCM, Mitchell can run Playbooks to set up and configure an instance of F5 Big-IP VE.

After a few trial runs, Mitchell feels more confident that Ansible can help in providing compliance, better security and efficiency for his environment. Not just with F5 networking, but with other infrastructure as well. He begins the same process with other teams at DSE and finds that even though he’s scaling his automation it gets easier and easier thanks to the features in Ansible Tower that help organize and segment his different teams through RBAC.

Red Hat and DSE are now on the path to rolling out Ansible to the entire company, but the journey has only just begun. The next step is middleware. Mitchell puts down his cup of coffee and picks up a pen.

About the author

Cameron Skidmore is a Global ISV Partner Solution Architect. He has experience in enterprise networking, communications, and infrastructure design. He started his career in Cisco networking, later moving into cloud infrastructure technology. He now works as the Ecosystem Solution Architect for the Infrastructure and Automation team at Red Hat, which includes the F5 Alliance. He likes yoga, tacos and spending time with his niece.

More like this

AI insights with actionable automation accelerate the journey to autonomous networks

IT automation with agentic AI: Introducing the MCP server for Red Hat Ansible Automation Platform

Technically Speaking | Taming AI agents with observability

Get into GitOps | Technically Speaking

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds