In a previous article, we walked you through crafting a user-friendly chatbot, harnessing the power of Azure OpenAI's advanced GPT models and the robust infrastructure of Microsoft Azure Red Hat OpenShift. Building on your previous exploration of deploying AI chatbots with Microsoft Azure and Red Hat OpenShift, you're now ready for GitOps.

This time around, we show you how to enhance your project by integrating GitOps into your workflow. GitOps offers a streamlined approach to project management using Git, simplifying updates, fostering collaboration, and helping to smooth operations. It's all about bringing more structure, security, and ease of management to your chatbot project:

Streamlined updates and rollbacks: With GitOps, every change to your chatbot's configuration and deployment process is tracked in a Git repository. You can update the chatbot or roll it back to a previous version with the simplicity of a Git commit. It's like having a time machine for your project, making updates and fixes faster and safer.

Enhanced collaboration: GitOps uses tools and practices that developers are already familiar with, such as pull requests. This encourages team members to review and discuss changes before they're applied, contributing to higher quality code and more innovative solutions.

Consistent environments: By defining your infrastructure and deployment processes as code stored in Git, you can have consistency across every environment, from development to production. This eliminates the "it works on my machine" problem, making deployments predictable and reducing surprises.

Improved security and compliance: With everything tracked in Git, you have a complete audit trail of changes, updates, and deployments. This not only enhances security because you can quickly identify and revert unauthorized changes, it also simplifies steps to compliance with regulatory requirements.

Automated workflows: GitOps automates the deployment process. Once a change is merged into the main branch, it's automatically deployed to your environment in Azure Red Hat OpenShift. This reduces the risk of human error and frees up your developers to focus on improving the chatbot instead of managing deployments.

Scalability and reliability: GitOps practices, combined with the power of Azure OpenAI and Azure Red Hat OpenShift, makes it easy to scale your chatbot to handle more users. The infrastructure can automatically adjust to demand, ensuring that your chatbot remains responsive and reliable even during peak times.

By integrating GitOps into your AI chatbot project, you're not just building a more capable chatbot — You're creating a more efficient, collaborative, and secure development life cycle. This approach means that your chatbot can evolve rapidly to meet users' needs while maintaining high standards of quality and reliability.

Prerequisites

To more seamlessly integrate your AI chatbot within the Azure Red Hat OpenShift environment using the power of GitOps, it's important that all prerequisites are in place and deployment steps are followed meticulously.

Before diving into the deployment process, your setup should meet the following conditions:

- Azure Subscription: An active subscription to access Azure resources and services

- Azure OpenAI Service: Access to Azure OpenAI services, including the necessary API keys for integration with the ChatBot

- Azure CLI: Installed and configured to communicate with your Azure resources

- Azure Red Hat OpenShift: Cluster deployed

Bootstrap Red Hat OpenShift GitOps / ArgoCD

GitOps relies on a declarative approach to infrastructure and application management, using Git as the single source of truth. To initialize OpenShift GitOps (powered by ArgoCD) in your environment, follow these steps:

Clone the Git repository containing the necessary GitOps configuration files for the ChatBot demo:

Apply the bootstrap configuration to your cluster. This initializes the OpenShift GitOps operator and sets up ArgoCD, preparing your cluster for the ChatBot deployment:

$ until kubectl apply -k bootstrap/base/; do sleep 2; done |

Deploy the AI chatbot in Azure Red Hat OpenShift with GitOps

With the prerequisites out of the way, you're now ready to deploy the chatbot application. The GitOps methodology automates the application deployment by referencing configurations stored in a Git repository. This approach leads to greater consistency, reproducibility, and traceability for your deployments.

- Deploy the chatbot application: Use the following command to apply the Kubernetes manifests from the gitops/ directory. These manifests describe the necessary resources and configurations for running the chatbot app on your Azure Red Hat OpenShift cluster through OpenShift GitOps and ArgoCD.

$ kubectl apply -k gitops/ |

This command triggers the OpenShift GitOps tooling to process the manifests, setting up the underlying infrastructure and the chatbot application itself.

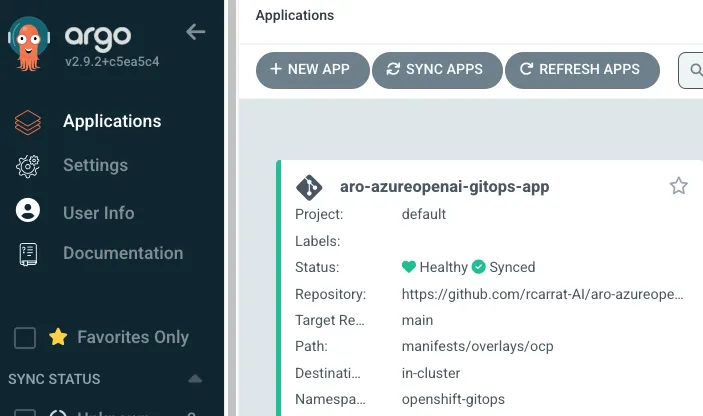

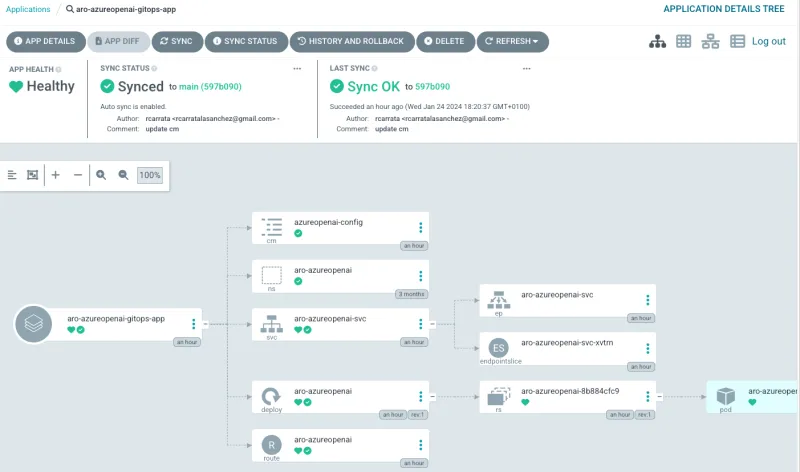

- Verify deployment: After applying the command, monitor the deployment process within the ArgoCD dashboard. There, you see resources being created and the application status transitioning to Healthy once everything is successfully deployed.

- Access the chatbot application: Once deployed, the chatbot is accessible through a URL exposed by an Azure Red Hat OpenShift route. You can find this URL in the OpenShift console under the Routes section of your project.

Conclusion

By following these steps, you’ve successfully deployed an AI chatbot application in Azure Red Hat OpenShift using a GitOps workflow. This approach not only simplifies the deployment process, but also enhances the manageability and scalability of your application, enabling your chatbot to evolve and grow within the dynamic Azure and OpenShift ecosystem.

About the authors

Roberto is a Principal AI Architect working in the AI Business Unit specializing in Container Orchestration Platforms (OpenShift & Kubernetes), AI/ML, DevSecOps, and CI/CD. With over 10 years of experience in system administration, cloud infrastructure, and AI/ML, he holds two MSc degrees in Telco Engineering and AI/ML.

Courtney started at Red Hat in 2021 on the OpenShift team. With degrees in Marketing and Economics and certificates through AWS and Microsoft she is passionate about cloud computing and product marketing.

More like this

Implementing best practices: Controlled network environment for Ray clusters in Red Hat OpenShift AI 3.0

Solving the scaling challenge: 3 proven strategies for your AI infrastructure

Technically Speaking | Platform engineering for AI agents

Technically Speaking | Driving healthcare discoveries with AI

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds