As customers continue to expect customized, real time experiences, the applications that power those experiences require lower latency and near real time processing. Edge computing brings the application infrastructure from centralized data centers out to the network edge - as close to the consumer as possible. This use case stretches beyond just telecommunications to include healthcare, energy, retail, remote offices and more.

Both the applications and their underlying infrastructures need to adapt themselves to this new edge model. One of Red Hat’s answers to that edge challenge was the introduction of an architecture called Distributed Compute Nodes (DCN) back in Red Hat OpenStack Platform 13. This architecture allows operators to deploy the computing resources (compute nodes) hosting the workloads, close to the consumers’ devices (at edge sites, for example) while centralising the control plane in a more traditional datacenter such as a national or regional site.

While this model is proven to be efficient as well as cost and operationally effective, it was missing a key component; there was no support for persistent storage or image management at the edge sites. Workloads had to rely on local ephemeral disks, which can be fine for some stateless applications, but may not be the best option for applications that need reliable persistent storage at the edge.

On top of pure application requirements, storage at the edge introduces many new benefits such as fast boot, copy on write, storage encryption, snapshots, virtual machine (VM) migration, volume migration, volume backups and more—features that were once reserved for large, distant core datacenters.

Red Hat OpenStack Platform 16.1 introduced the support of persistent storage and image management at the edge. Let’s see what makes that possible.

High level architecture

Bringing persistent storage to edge sites requires key design considerations.

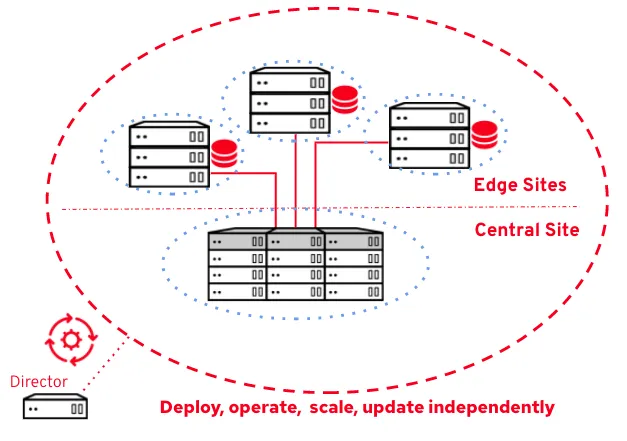

First and foremost is how you design the actual storage backend architecture. With Red Hat OpenStack Platform we took the approach to have dedicated backends, with each storage backend only serving the local-to-site compute nodes.

This helps ensure proper performance and scale by avoiding stretch configurations and preventing latency-sensitive data from transmitting over high latency links. This also helps with proper isolation, both in terms of security and operations where a specific edge site storage failure does not impact the other sites. Each compute consumes its local-to-site storage backend.

Another key design consideration is the image management system. While Cinder persistent storage covers user data, images are also required close to the compute nodes in order to boot workloads quickly and efficiently. Red Hat chose to leverage a recent Glance innovation called “multistores” allowing Glance to manage multiple storage backends. With a dedicated backend per site approach, this allows images to be hosted on each site.

Last but not least, how do you deploy the architecture? Which services are deployed at the edge and, most importantly, how do you manage Day 2 operations such as config updates, scale and updates? The Red Hat OpenStack Platform deployment tool (director) is now edge-aware—it can deploy multiple sites and manage them independently.

DCN storage with Red Hat Ceph Storage high level architecture

Now that we have highlighted the key design considerations for storage at the edge, the next question is, “What storage backend should I use?”

Red Hat Ceph Storage, a feature-rich OpenStack backend, is a strong candidate to consider. Its software-defined, highly distributed and self-healing qualities make Ceph Storage a solid solution for edge storage. The fact that it supports x86 and its hyper-convergence capabilities fit well with edge use cases. Standardizing hardware and cost reduction are two possible benefits you can achieve by converging compute and storage services on the same nodes, which is important for edge cases where sites can be limited by space, cooling and power.

Red Hat OpenStack Platform 16.1 fully supports DCN with Red Hat Ceph Storage at the edge.

So how does it work? Let’s have a look at a high level architecture.

The key here is that we use a dedicated Ceph cluster per site as well as at the central site; there is no stretch configuration whatsoever.

In terms of deployment, we focused on the hyper-converged model in order to reduce footprint and standardize the nodes’ hardware specifications. The minimum number of nodes per site is three, which is the minimum required by Ceph Storage. You can add more hyper-converged nodes or add compute-only nodes if the current storage capacity allows, keeping in mind the right balance between the compute and storage resources for optimal performance.

In terms of workload scheduling, we kept the Availability Zone (AZ) approach in which each site is configured as a dedicated AZ. To schedule a workload on a specific site, the user selects the corresponding AZ at boot time.

As we added persistent storage with Cinder, each site gained a dedicated storage AZ too. The user specifies the AZ at volume creation time to select the site on which a volume should be created (and therefore on which Ceph cluster). Both Nova and Cinder AZ names match and we disabled cross AZ attach in order to prevent a VM on site A mounting a volume on site B.

On a pure operational perspective, Red Hat Ceph Storage can be deployed by the same tool as Red Hat OpenStack Platform, which improves Day 1 and Day 2 operator experience. Operational aspects are managed by a single tool, and you don’t need to independently manage dozens of Ceph clusters.

Bringing image management at the edge with Glance multistore

As mentioned earlier, image management is a key aspect of any edge deployment. In an edge distributed approach, the solution needs to support distributed images management across sites while keeping the user experience as simple as possible.

A user usually treats Glance images individually and does not deal with multiple image entries because the same image is also present on other sites. The DCN architecture leverages Glance multistore (e.g., backend) so that users interact with a single image entry regardless of its locations across sites. Each Glance store maps to a Ceph Storage backend and each site includes its own backend which turns out with a Glance store per site (including the central site)

To keep track of the images' copies, Glance adds location metadata (e.g., the list of location URL and respective store name). These metadata are stored in the control plane database.

The metadata keeps track of all image locations; in this example the image is available in central, site1 and site2. These three sites can boot workload from this image at any moment and leverage features such as copy-on-write. Should the user want to boot a workload on site3, they have the option to copy the image to that site (more on this later).

How is that reflected in the CLI?

As an OSP administrator you can list the available stores.

$ glance stores-info +----------+----------------------------------------------------------------------------------+ | Property | Value | +----------+----------------------------------------------------------------------------------+ | stores | [{"id": "site1", "description": "site1 RBD backend"}, {"default": "true", "id": | | | "central", "description": "central RBD backend"}, {"id": "site2", "description": | | | "site2 RBD backend"}, {"id": "site3", "description": | | | "site3 RBD backend"}] | +----------+----------------------------------------------------------------------------------+

The command returns four stores: central, site1, site2 and site3, with central being the default (if no store is specified, Glance will use the central site).

Running openstack image show will return all the locations (i.e., stores):

$ openstack image show my_image (...) locations=' [{u'url': u'rbd://dbb41ef0-15d2-11ea-a69a-244201a99610/images/35cb2a43-eb89-4bed-99a2-c4376133a492/snap', u'metadata': {u'store': u'central'}}, {u'url': u'rbd://fa9b85d0-15da-11ea-a69a-244201a99610/images/35cb2a43-eb89-4bed-99a2-c4376133a492/snap', u'metadata': {u'store': u'site1'}}]' {u'url': u'rbd://fnhk9d7h-39b5-11ea-a69a-244201a99610/images/35cb2a43-eb89-4bed-99a2-c4376133a492/snap', u'metadata': {u'store': u'site2'}}]' (...) stores='central,site1,site2'

In this example, we can see that the image my_image is present in central, site1 and site2.

The location field shows the location details with the different Ceph RBD URIs:

rbd://CEPH_CLUSTER_ID/images/IMAGE_ID/

The CEPH_CLUSTER_ID is unique as we have a dedicated cluster per site, “images” correspond to the default Glance Ceph pool, IMAGE_ID corresponds to the Glance image ID, which is common to all image copies.

It also mentions which stores the copy is stored in:

'metadata': {u'store': u'STORE_NAME'}

The stores field is a shortened version that lists all the stores on which the image is present.

The Glance CLI was also improved to deal with this new approach. The following capabilities were added to help users manage distributed images:

-

Import an image to multiple sites at upload time.

-

glance image-create-via-import --disk-format qcow2 --container-format bare --name image_name --uri http://server/image.qcow2 --import-method web-download --stores <list,of,stores>

-

-

Copy an existing image to another site.

-

glance image-import <existing-image-id> --stores <list of comma separated stores> --import-method copy-image

-

-

Delete an image copy from a specific site.

-

glance stores-delete <image-id> --stores <store-id>

-

In terms of architecture, a dedicated set of Glance API services is deployed at each site and has access to different storage locations in the following ways:

-

The Central Glance API service can write to its local Ceph cluster as its default store and can also write to every DCN Ceph cluster as an additional image store.

-

Each DCN Glance API service (three per site) can write to its local Ceph cluster as its default store and can also optionally write to the central Ceph cluster as an additional image store.

The edge nodes rely on their local glance services when requesting images, keeping the request within the given site.

The central site acts as a hub—it copies images accordingly across sites. Users interact with the central site API, but not directly with the edge API services.

The only time image traffic crosses the WAN is when an image is copied between central and the edge sites. It is impacted by the latency, but this is an asynchronous copy process that does not impact the actual workloads; workloads are booted once the image is copied and only rely on the local-to-site copy.

Edge VM snapshots are supported and can be transferred back to the central site, from there they can be copied to other edge sites and booted from.

More in part two

In this first part, we described the fundamentals and key architecture aspects of Red Hat OpenStack Platform DCN, an OpenStack solution to manage the current and future challenges of edge computing.

We deep dived into edge image management with Glance multi-store as a means to manage distributed images copy across remote sites and storage backends.

In the next and final part of this series, we will describe how Cinder, the OpenStack persistent storage service is designed and configured and how to deploy and operate DCN with Red Hat OpenStack Platform director. Finally we will conclude this series with some hands on commands that outline the final user experience.

About the authors

Gregory Charot is a Senior Principal Technical Product Manager at Red Hat covering OpenStack Storage, Ceph integration as well as OpenShift core storage and cloud providers integrations. His primary mission is to define product strategy and design features based on customers and market demands, as well as driving the overall productization to deliver production-ready solutions to the market. Open source and Linux-passionate since 1998, Charot worked as a production engineer and system architect for eight years before joining Red Hat in 2014, first as an Architect, then as a Field Product Manager prior to his current role as a Technical Product Manager for the Hybrid Platforms Business Unit.

John Fulton works for OpenStack Engineering at Red Hat and focuses on OpenStack and Ceph integration. He is currently working upstream on the OpenStack Wallaby release on cephadm integration with TripleO. He helps maintain TripleO support for the storage on the Edge use case and also works on deployment improvements for hyper-converged OpenStack/Ceph (i.e. running Nova Compute and Ceph OSD services on the same servers).

More like this

Introducing OpenShift Service Mesh 3.2 with Istio’s ambient mode

Friday Five — January 30, 2026 | Red Hat

Data Security 101 | Compiler

Technically Speaking | Build a production-ready AI toolbox

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds