While there is a lot more than just the “Regulatory Technical Standard 25”, abbreviated to RTS 25 from now on, in the EU’s MiFID II regulations, the focus of this blog is all around RTS 25 and achieving compliance with the time synchronisation requirements this entails.

At a high level, the goal of MiFID ii is

to enforce “Better regulated and transparent financial markets” and this is providing a new set of governance around the quality of the information that is collected and reported by financial institutions. One aspect of the data quality, that is mandated under RTS 25, is that the clock accuracy directly impacts the transparency of any institutions records. Knowing when a transaction took place to a high degree of accuracy will impact how useful these data records are, especially for systems such as those conducting high frequency trading where the volume and order of trades is critical for regulatory inspection.

There are a number of different time requirements for different systems listed in RTS 25; however, the most stringent of these is “better than 100 microsecond” accuracy of the system clock when used by applications in timestamping transactions. In this post we will look at the testing conducted by Red Hat and the levels of accuracy that can be achieved leveraging several different setups. It should be noted that the biggest variability on the accuracy that was observed was due to the quality of the network and any congestion that interrupted time stamp messages.

The error introduced by individual network components can be estimated using a setup similar to the configuration depicted below. Leveraging this approach, we can directly measure the delay and asymmetry caused by a single network device and extrapolate this out to give realistic errors expected in large deployments. It should be noted that the accuracy of the time synchronisation is directly impacted by the quality and stability of the components used in building your network. Unsuitable network design and/or gear likely accounts for the largest risk to achieving the required accuracy for compliance.

Time Synchronization and Red Hat Enterprise Linux

The impact of RTS 25 within the data-centre is that a number of Red Hat Enterprise Linux systems will now need to be synchronised to a level of accuracy which was previously not required. There are two main ways to do this using either Network Time Protocol (NTP) or Precision Time Protocol (PTP); for an in-depth discussion of these two protocols and the benefits of each, please see this previous article.

PTP is available from RHEL 6.4+ and 7.0+ and supports both Hardware and Software timestamping. Note that PTP is very sensitive to network jitter, so the hardware (switches and routers) should support PTP as well if using PTP for synchronization. NTP is available via ntp and chrony packages - we prefer chrony for accuracy and it is available from RHEL 6.9+ and 7.0+.

It is worth highlighting that support has been added for the following as of RHEL 7.4+:

- Added support for software and hardware timestamping for improved accuracy of NTP via the chrony package. Read this and this.

- A new ptp_kvm device added to provide support for RHEL 7.4+ virtual guests to accurately synchronise to the host clock. For more details on the ptp_kvm device in Linux please see this.

It is also worth noting that there are many factors outside the control of the Red Hat Enterprise Linux system that affect accuracy, including:

- Accuracy and stability of the time sources (NTP servers and/or PTP grandmasters).

- Stability and asymmetry of network latency.

- Asymmetry in timestamping errors on NTP/PTP clients.

- Stability of the system clock.

- Stability of NIC clock and accuracy of its readings.

- Configuration of NTP/PTP. NTP needs some tuning from the defaults, for example we would expect at least the minpoll and maxpoll settings to be tuned for synchronization in a local network.

Based on these assumptions, the following bounds of accuracy of the system clock relative to the time sources are what we expect to see for a Physical Server running Red Hat Enterprise Linux in a network suitable for accurate synchronization

| Physical Server | |||

| Protocol | SW Timestamping | HW Timestamping | Accuracy |

| NTP | No | No | 100 µs |

| NTP | Yes* | No | 30 µs |

| NTP | No | Yes* | 10 µs |

| PTP | No | No | Not supported |

| PTP | Yes | No | 30 µs |

| PTP | No | Yes | 3 µs |

*Needs RHEL 7.4+

As a follow on, we are also starting a round of testing using Red Hat Virtualization hosting Red Hat Enterprise Linux guests. This is using the new ptp_kvm driver available with RHEL 7.4+ running on top of RHV 4.1+. While Red Hat have not yet collected sufficient data yet to be able to publish a table as above for physical servers, our initial testing has indicated when we use the virtual PTP driver, we see the RHEL guests achieve the same level of accuracy as the RHV host clock synchronisation.

We intend to do some further testing in the future to confirm these initial observations.

Resilience of protocols

As part of the testing, Red Hat conducted some tests to assess the impact of network load on accuracy of synchronization. Part of this was to benchmark the stability we could achieve with the new chrony based NTP solution as NTP is better understood in most existing enterprises and may in some cases be preferred over PTP in order to avoid expensive upgrades to switches/routers with proper PTP support.

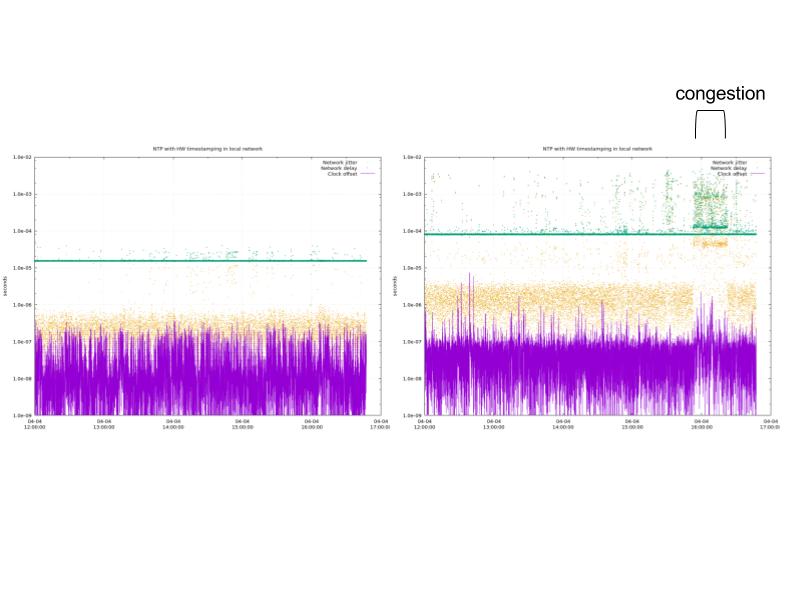

The first set of results show that , an NTP client could most of the time synchronize the clock with stability that was better than 1 microsecond. In periods of heavier network activity the offset of the clock stayed below 10 microseconds.

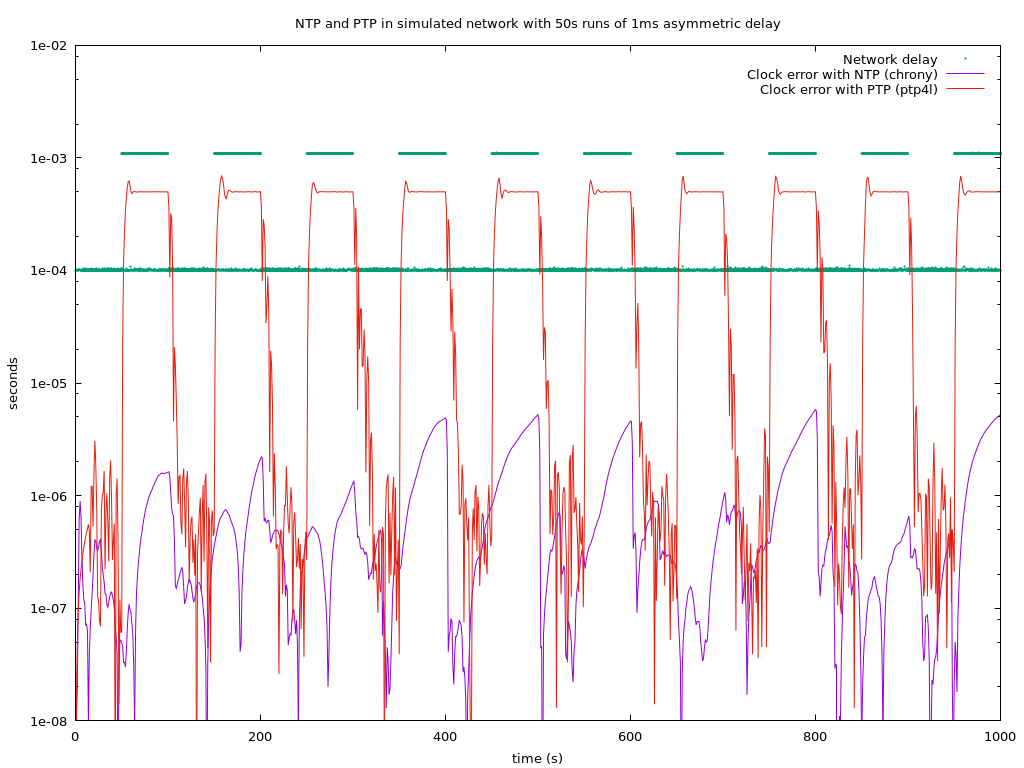

The second test was to establish how important, if using PTP, it is that we have good quality network switches and routers with PTP support, as we rely on these to remove the network jitter and asymmetry.

In a simulated network with no HW timestamping support from the network devices, we introduced a large asymmetric delay for 50 seconds at a time. This is equivalent to simulating very heavy network traffic in one direction. With NTP the clock stayed below 10 microseconds of error, but with PTP the error quickly reaches hundreds of microseconds.

Of course, synchronizing the time accurately is only one step of the challenges provided by MiFID and other industry regulation. The reporting and alerting of this synchronization will be critical to proving compliance, exploring this is something we are looking to do in a future post.

About the author

More like this

Friday Five — February 6, 2026 | Red Hat

AI insights with actionable automation accelerate the journey to autonomous networks

Data Security And AI | Compiler

Data Security 101 | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds