So far in this series I have established that the following are all necessary components of the modernization project planning process:

- code bases that will provide value when modernized to a future state

- a general strategy on how that code should be changed to achieve the future state

- a team that can effectively work in that enterprise environment

- all the information the team needs to start a brief onboarding course so team members are empowered and enabled to work

- a place to track work progress that teams outside of the product team can review if they have questions

- Work is clearly defined and connected to the outcome of the modernization

- a developer contribution guide that gives teams members some direction on how to work together (ie: branching strategies, naming conventions, guidance around test coverage)

In this article we will dive into some of the strategies to make code more "changeable".

At this point, you have some existing code that you wish to refactor and a general technical direction you want to take. Remember, you want to make your application more “changeable” and easier to deploy in a variety of environments by creating more testable logic across the code base, while also making the code and configuration more container-friendly.

In this article, I will discuss a few fundamentals for creating an environment where it’s safe to change code such as:

- adding observability to non-production environments,

- Enforcing that the code is in source control,

- checking your dependencies,

- making sure your choices can support your long-term plan, and

- thinking about a backup plan should technology or platforms involved in the modernization strategy not work out.

Setting the foundation for safer changes

Before you start changing code, however, you need to set up the right environment so you can make changes and recover from disruption in a timely fashion.

Adding observability to non-production environments

There needs to be a place to deploy where it’s okay for things to break. And when they break, the team must have easy access to logs and metrics from this environment to troubleshoot. If this is not possible, then you might reconsider if the application you have chosen is a good candidate for modernization.

Should you set up CI/CD now?

Hopefully you already have continuous integration/continuous deployment systems (CI/CD) already set up (build and deployment pipelines), but if you don't, it’s up to the team to decide if they want to invest time to set this up. CI/CD provides a lot of benefits, but at the start it’s okay to just get going by doing manual deployments, provided they are not too painful for the team.

Remember, if the deployments are complex or time-consuming, at some point you will have spent the same time doing manual deployments as it would have taken to get CI/CD set up.

Based on this logic, it will most likely make sense to get CI/CD set up before getting started. Just don’t lose sight of the migration goals and outcomes in favor of figuring out pipelines, unless your shareholders clearly understand the gains of such an investment.

Red Hat has some interesting open source projects with some pipeline samples that can help in this area. Here is an example of an example of building such a pipeline using Tekton.

Is the code in source control?

The code should be in a version control product like GitHub. There’s a lot of good information out there around using libraries and codesharing, (such as The Twelve-Factor App: Codebase) but for now, let’s just say if the code is not in something like GitHub, it needs to be. Specifically, you need the following concepts:

- The ability to create code branches allowing team members to work on the same code concurrently.

- A way to enforce a review process that must occur before code can be merged into a the branch that is designated as the “main” branch (i.e., has the best working version of the code).

- The ability to revert code to a previous state from the change history.

As previously discussed, it’s important to track work using a tool like Jira. Personally, I like to name commits in source control using the ticket number that describes the work in the task tracking tool. Jira even offers a feature called Smart Commits that further integrates code being checked into source control with the Jira ticket where the work is defined.

Many source code management (SCM) tools (Gitlab, Bitbucket, Github, etc.) provide built-in task-tracking features. These can be great, but some people involved in the software delivery process in an enterprise might not have access to such tools. It’s important that the work being done is observable to all interested parties, otherwise escalations and meetings will ensue.

What do the code base and dependencies look like?

The Twelve-Factor App methodology can be used to help understand how dependencies are managed. There should be a declarative command that allows the application to be built and for dependencies to be packaged into the deployable artifact.

In most enterprise environments, those dependencies should come from an enterprise-blessed repository to ensure the dependencies downloaded are actually the correct ones and not something malicious. If such a repository does not exist, it might be tricky to set up. This is where the Wise Sage can help the team have the right conversations to set this up.

In the Java space, my favorite tool for dependency management is Maven, although Gradle works fine as well. Updating the project to use Maven will benefit the project by giving it a structure that is well-documented and familiar to those who use Maven. This makes learning the code base a bit easier for new developers.

Start by re-organizing the packages by feature

Now, let’s get into cleaning up the code. For example, I will use a now-retired application (Woddrive - it was a fitness application) that I and some friends worked on for a decade. I learned a few cautionary tales on this little side project that are all relevant to this discussion.

Does your legacy code look like this?

This was taken around 2015 from Woddrive. When I started my career the trend was to name packages like this:

- Services

- Controllers

- Model

- Repo

If the top-level packages are named this way, the first task will be to create package names for the functionality available within the application.

Once you get into the feature package, it's okay to defer to stereotypes people are familiar with (i.e., controllers, services) within a feature. This will help developers working with your code find what they are looking for once they delve into a feature. (For more ideas about how to organize code, Sam Newman's book Building Microservices references "seams" in the code as the boundaries for services and includes a guide on how to peel out code.

Speaking of microservices, you might be tempted to say, “If I’m going to group code by features like this, why not just make these features their own applications” (i.e., their own microservices)? An independent deployable service would have less code; therefore, it would be easier to clean up and test. There is truth to this. Plus, difficult team members could be given their own service/code and stop driving the rest of the team crazy by failing to collaborate in a shared code base. Sounds like a dream, doesn’t it? It can easily turn into a nightmare if you are not careful.

A cautionary tale: Don’t rush into microservices

Around 2016, I started hearing a lot about microservices. If Netflix and Amazon are doing it, shouldn’t we all? In fact, a senior member of the architecture team in the enterprise I was consulting at during that time met with our team and told us if we were not coding microservices we were creating technical debt. What choice did I have? I dove straight in.

Luckily, Spring Cloud and Spring Boot had come along, allowing us mere mortal developers to make use of value microservice patterns (e.g., service discovery, circuit breaker).

Once again I will refer to my now-defunct application Woddrive. I thought it better to get some experience with Microservices in my own application before doing it for clients. So, in 2017 I decided to break up the functionality it provided in one big deployable artifact into multiple services. It resulted in a distributed application that made use of ALL of Netflix OSS’s bells and whistles. It was hard to pull together; I needed a bunch of help from my friend Josh Long to get it done. But once it was all running on my local machine, I felt really smart and cool. I think I even got a little taller that week. That all ended when I had to operate it.

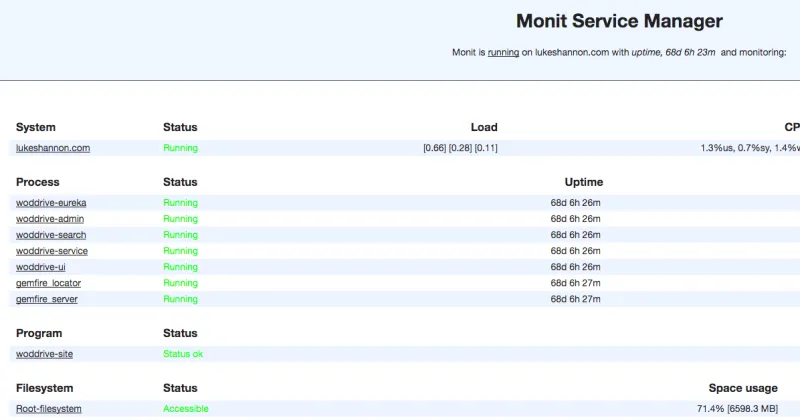

At the time we were still running everything on VMs. Not an ideal situation for microservices. I ended up having to write a bunch of complex shell scripts to get this running on the VMs I was managing. I had to add Monit to keep the system "alive."

But of course, I didn’t keep up with patching the environment, and multiple machines were compromised. I ended up running all the services on a single VM and later collapsing them into three services. Moving those services to the now defunct PWS, a managed version of Tanzu, which was called Pivotal Cloud Foundry back then, helped with the operational complexity. But, as we will discuss in the next section, I learned another lesson with this move.

At the end of it all, I realized it would have been easier to have kept it as a well-composed monolith.

The point is, there is no need to rush into microservices. In fact, Amazon started with a monolithic code base. The move to an API-driven architecture came out of the challenges of dealing with the massive success surrounding their monolith. But operating an at-scale microservice architecture was so hard they ended up coming up with a platform to provision infrastructure on demand that would later become AWS.

My advice is to do a couple of refactor passes on the monolithic code base before spinning out microservices. The good news is that if your packages are organized by feature, you are already creating clean separation points to move code into its own application.

A cautionary tale: Consider the long term and always have a backup plan

As hinted at in the previous section, in 2020 we moved our microservice architecture to PWS. It was a bit of work to adapt our configuration and workflows for the new platform, but nothing too earth-shattering. It turned out to be an awesome move as it ran there with minimal stress for over a year. We could easily obtain logs and metrics, no more worries about machines being compromised, we finally got a good non-production environment, and updating production was more predictable and reliable to do. But in 2021 Pivotal took down PWS.

We got pretty attached to working with PCF for this application: “Here is my code. Run it in the cloud. I don’t care how!” In our modernization effort to get the application on there, we updated the application to make use of all that platform had to offer. When that platform was taken away, the necessary application-/deployment-side work to fill in for all the magic of that platform turned out to be something we just didn’t want to do.

The application was Java-based, so in theory, could run everywhere. The thing is, PCF did a lot for a deployed code base, specifically around how the container was configured and how ingress/egress was managed. We could have run my own instance of that PaaS. But the tiny team of devs (which included me) that worked on this just weren’t up for learning how to operate a PaaS, and the cost of the infrastructure it needed was too high for this use case. So, when faced with the choice and updating it again to run on a new platform, we took an option that exists for some legacy applications: retirement. It was not what we wanted, but we all had bigger tasks on our plates.

There are three lessons here:

- Assume that no technology lasts forever and gravitate towards solutions that have market momentum. Just because a solution is great, does not mean it will last

- Make sure the direction of your migration is sustainable for your budget and organization over time assuming the worst happens (ie: the cost of the solution goes up or the effort to run the solution dramatically increases)

- Have a back-up strategy should the issues outlined in the previous steps become reality

Break up complexity and increase modularity

Now that you have organized your code by feature and then placed the sub-packages of the features into our stereotypes, you can begin by scanning each class, looking for two different things:

- complexity

- obvious bugs

I strongly suggest using tools for this. IntelliJ has a lot of great built-in tools that help with this (the Analyze function is VERY good). Personally, I use the Spring Tool Suite, which is based on Eclipse. This is an old habit: I know all the shortcuts, it works for me and I like it. But I don’t judge—use whatever tool works for you, provided you can get some of the functionality I am about to discuss.

Here are the plug-ins I like in STS:

I will review the ones relevant to this refactor discussion.

Spot Bugs

SpotBugs is a program that uses static analysis to look for bugs in Java code. It will give you real-time feedback on known bugs every time you save the code. At the time of writing, it looks for over 400 different known issues.

Here is a sample of a bug being noted in the project explorer.

Below, you can see the details on this bug. It’s not a critical issue, so maybe it can be fixed once all the critical issues have been fixed.

A team decision can be made on the types of things that the plug-in reports (these settings are configurable in most plug-ins).

I would advise starting with the critical bug first. Fixes can be done iteratively.

SonarLint

Out-of-the-box, SonarLint reports issues on the files you're editing. It will point out known issues and anti-patterns in your code, while you are writing it.

Once again, it might be best to focus on the critical issues. Also, I would not do a scan on your full code base; this can take a very long time and hang your Eclipse during the scan.

The most important thing SonarLint does is provide a complexity score (which is configurable). To lower the score, logic needs to be simplified and/or decomposed into methods or delegate classes.

Encapsulating logic for testability

Now that you have some tools, you can put together a backlog of work in the tracking tool. Remember, the goal is to make the code less complex, clearly encapsulated, and backed with interface contracts to make logic easier to mock, making tests easier to write and more effective. The Project Lead will have to decide on a strategy to reach this goal.

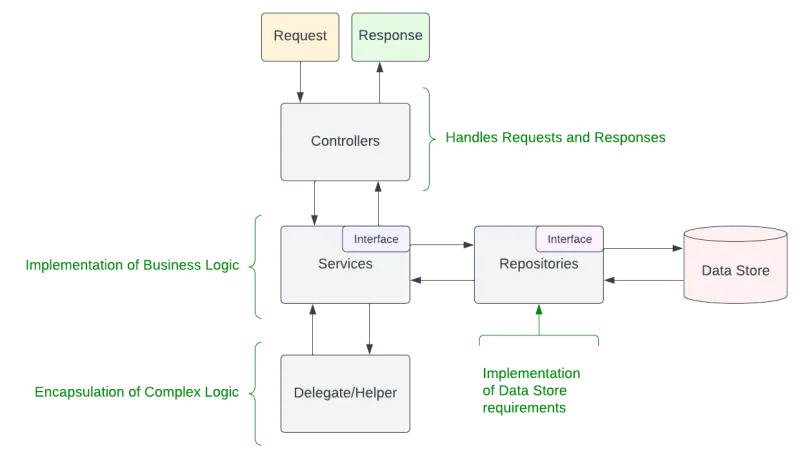

Here is an example of how I like to do it:

- Put processing of requests and responses into controllers. There should be no business logic in controllers.

- Move all logic related to processing requests/responses into services.

- Using complexity scores as a guide, break out services logic into:

- clean and concise methods

- delegate/utility classes

- Consider using interfaces for such classes so, if they can’t be mocked easily, a test implementation can be coded that meets the desired contracts. For an excellent example of perfect interface design and implementation, see Spring Data’s Repository classes

- The service class itself should have an interface defining its contract. This gives you the option to implement a test version of the service if, for whatever reason, the code turns out to be unmockable.

- All CRUD (create, read, update, delete) operations should be in a repository class. I would strongly consider creating an interface, so there is a clear contract.

- Create POJO (plain old Java objects) to contain data passed to and returned from the service layer; these might end up being entity references (e.g., Persistable) so the DTO (data transfer object) version of the object is usually required.

With this in place, you can begin writing the tests.

Should you convert the code to a framework?

Frameworks like Spring or Quarkus provide a lot of benefits. Both provide inversion of control—a powerful pattern that makes it easy to decouple logic from dependencies, making the logic more testable. Both also provide a host of annotations that can make developers' lives easier in the background. As I am more familiar with Spring, I will provide examples from that framework.

From a testing point of view, the Spring Framework provides some magical dependencies that spin up mocks for you without having to write any code yourself. For example, when working with a Spring Boot application, @DataJpaTest provides an easy means for creating a mock data source that can be wired into the repository layer to mock whatever data you want.

Similarly, @WebMvcTest provides tons of useful mocks to send requests to your controllers without any effort on the developer side to figure out how to manage an application server.

I would recommend any Project Lead use a framework that encourages loosely coupled dependencies and use of interfaces and provides functionality to help with test creation. Just remember, converting to a framework can be a major time investment; the Project Lead should ensure the effort is accurately reflected in the time tracking tool, and The Sponsor is aware of where this time and effort is going. The reason for this is there might be a fair number of releases where there is nothing new to see from a Client point of view, and this can make The Client wonder where the effort is going.

No code changes does not mean no modernization

The application might be a vendor package application, meaning the team does not have access to the code. Perhaps the vendor has provided an image or binary. In this case, there can still be modernization of the environment the code runs in. This might involve adding automation or an opinionated Workflow. This is a concept we will come back to.

Next, we'll talk about an optimal way to get all this work done and get into the details around creating some testing to verify quality.

Modernization series

About the author

Luke Shannon has 20+ years of experience of getting software running in enterprise environments. He started his IT career creating virtual agents for companies such as Ford Motor Company and Coca-Cola. He has also worked in a variety of software environments - particularly financial enterprises - and has experience that ranges from creating ETL jobs for custom reports with Jaspersoft to advancing PCF and Spring Framework adoption in Pivotal. In 2018, Shannon co-founded Phlyt, a cloud-native software consulting company with the goal of helping enterprises better use cloud platforms. In 2021, Shannon and team Phlyt joined Red Hat.

More like this

Introducing OpenShift Service Mesh 3.2 with Istio’s ambient mode

Context as architecture: A practical look at retrieval-augmented generation

Data Security 101 | Compiler

Technically Speaking | Build a production-ready AI toolbox

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds