Recently, when my workplace laptop needed a hardware refresh and I wanted to replace it with an ARM-based laptop with Linux (a MacBook), I was tasked with comparing the efficiency of using CPU virtualization versus emulation.

This article presents my research and benchmark data comparing the performance and power consumption of workloads running under virtualization and emulation.

We live in exciting times: After many years with the x86 architecture dominating the market, there are new players. For some years, Amazon has been offering Graviton instances, which consists of using an ARM-based CPU design. This offer is competitively priced compared to x86 instances. CPUs have widely supported running virtual machines (VMs) with so-called hardware virtualization support since the 2000s, but this is limited to VMs of the same CPU architecture. Running VMs of a different CPU architecture still requires more software involvement through a process known as emulation.

The commonly known reason for using virtualization instead of emulation is performance. But what exactly is the impact on performance and power consumption? In use cases like “I want to replicate an x86-issue on my aarch64 system” or “I need to run this old application,” performance is not that important. Here, emulation can be an acceptable fallback, if access to native (bare metal or virtualized) resources is not otherwise possible.

Support engineers have also used virtualization for many years to make things easier: Nested kernel-based virtual machines (KVM) can be used to run “guests on guests.” This can help to replicate OpenStack and OpenShift environments, for testing.

The technology of today

As of Red Hat Enterprise Linux (RHEL) 8, 9 and 10, customers can use QEMU with KVM to run virtualized guests. This allows customers to run guest operating systems where the CPU instructions are directly executed on the CPUs of the host system. In these setups, additional hardware like Network Interface Cards (NIC) can be emulated.

On Fedora, emulation of other architectures is available via the normal repositories. For example, the aarch64 Fedora repos have qemu-system-x86_64, which allows the emulation of guests with the x86_64 architecture.

Measurement set up

For measurements, you can use Fedora as host, as the QEMU emulation packages can conveniently be installed from the normal repositories. The pieces include:

- Performance Co-Pilot (PCP): PCP provides you with the power consumption metrics. These get stored in archive files, from where you can evaluate them

- Python 3 wrapper scripts: These provide the framework, allowing you to run one script to control power consumption monitoring and run the workloads in the virtualization/emulation guests

- Ansible playbooks: These are used to deploy the test scripts on the guest and install required packages

- ‘bzip2’: ‘bzcat’ is used to uncompress data and use that as a CPU-based workload

The 3 most interesting resources are CPU compute, I/O and network throughput. ‘bzip2’ was used to cover the CPU compute resource, which has the most influence on most workloads. In addition to performance, consumed power is also important.

Read about the basic power measurement setup in this article.

Let’s compare emulated CPU performance

Now, let’s focus on CPU power. As an example of a practical workload, let’s use the extraction of a compressed file.

In a loop, you would extract the file httpd-2.4.57.tar.bz2, as many times as you can. With one loop, you would just utilize one CPU, so you’ll also test with multiple parallel loops:

A unified modeling language (UML) diagram showing the program workflow:

After running these tests on emulated guests, you can visualize the results:

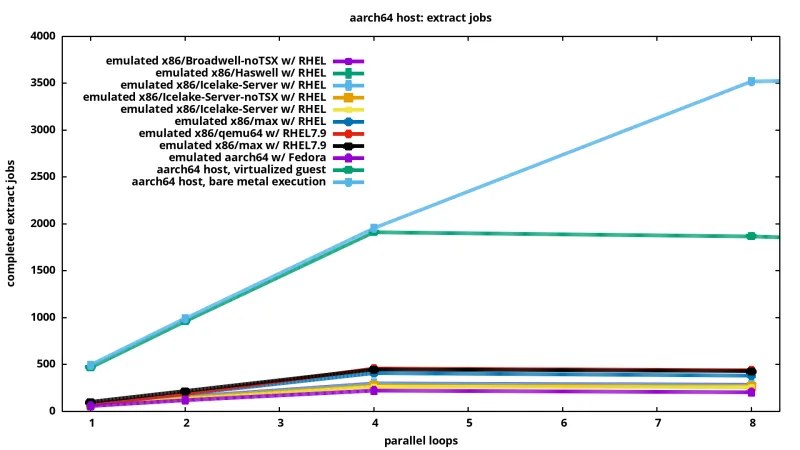

A graph showing the extract performance over various emulated systems

Most of these systems are emulated x86_64 systems, plus one emulated aarch64 system. All of the systems have 4 virtual CPUs and all are emulated on an aarch64 host system running Fedora. The y-scale shows how many times file httpd-2.4.57.tar.bz2 was uncompressed in 5 minutes. For all of these systems, there is an increase in the number of parallel running extract loops and an increase in the number of completed extract jobs—up to 4 parallel loops. When the loops are increased to 8, you no longer have 1 loop per CPU, and the number of overall completed jobs drops.

The top red and black lines show the results for emulation with “-cpu qemu64” and “-cpu max” running on RHEL 7.9. These 2 show the best performance of all emulated systems on the graph.

The y-scale is logarithmic, for better visibility. The other emulated architectures perform more or less equally well. These are emulating newer chipsets like Haswell, Icelake or Broadwell. These emulated CPUs cover the x86_64-v2 or -v3 ABI specification. RHEL 9 requires x86_64-v2 and RHEL 10 requires x86_64-v3. Optimizing newer RHEL for v2/v3 ABI allows Linux distributions to better utilize newer CPUs. When running newer RHEL versions on emulated CPUs, QEMU has to emulate these CPU functions, leading to a difference in performance.

When emulating the CPU, you can use QEMU parameters (“-cpu max”) to enable all the features capable in the emulated CPU. With that, you’ll get the best compatibility (so you can run RH EL7 as well as RHEL 10) and get the best performance of the emulated systems. Learn more about QEMU and CPU configuration.

The “-cpu max” is recommended for best compatibility for CPU flags and performance.

How about virtualization and bare metal?

The virtualization results show the following:

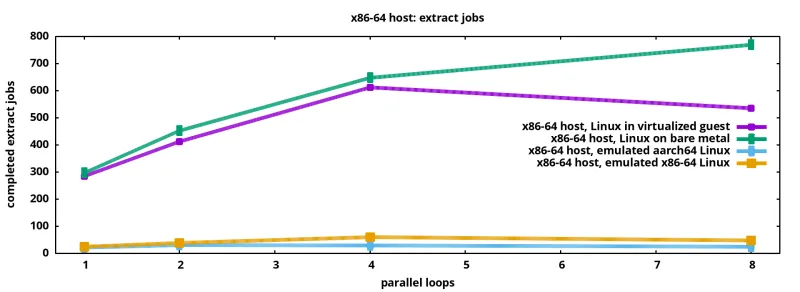

A graph showing completed extract jobs over emulated systems on an aarch64 host

Virtualization and bare-metal performance for this workload are quite comparable and much better than emulation: They complete 4x the extraction jobs of our fastest emulated guest. This illustrates why virtualization is widely used in the enterprise and emulates guests only where absolutely required.

To the right on the graph, 8 threads show that the virtual guest (in green) is not increasing the total number of extractions further, with just 4 cores. The bare-metal system (light blue) has 10 cores, so it scales beyond 4 threads.

Using a x86_64 host system, you see the same pattern: best performance with bare metal, slightly worse performance with virtualized x86-64 guest, and much lower performance with emulated guests of any architecture:

A graph showing completed extract jobs over emulated systems on an x86_64 host

How much energy is used per extract job?

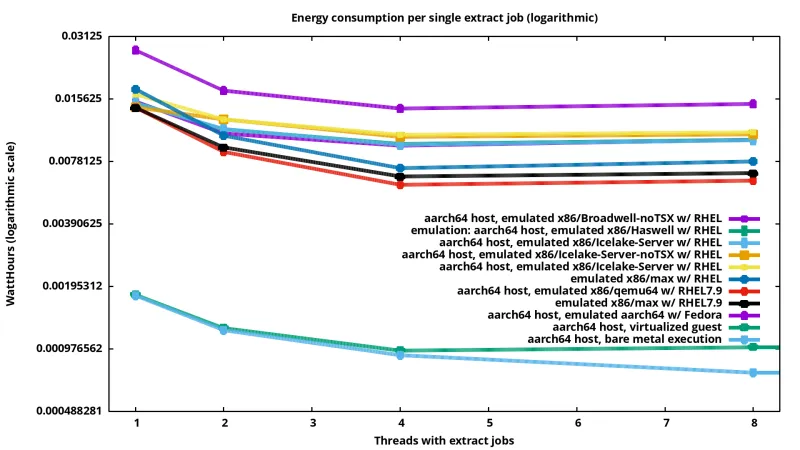

With the help of Performance Co-Pilot, you can measure the energy consumption of the whole system while running the jobs. If you divide the overall consumption through the number of completed jobs, you get the consumption per single extract job:

A graph showing the energy used for a single uncompressed operation

With virtualization, you can do many more uncompressed operations while not consuming more energy, resulting in lower consumption per single uncompressed job.

Final thoughts

There is a long history of computing and systems. Emulation of older systems continues to be an important topic and will be so in the future.

One further detail to consider is the correctness of emulated CPUs. The emulation code is not undergoing any formal validation for compliance with the CPU architecture specifications, so users inevitably hit subtle bugs. This can especially be challenging in multi-vCPU usage, where the concurrent execution may not match well with physical CPU characteristics, thus masking problems or exposing bogus problems. For older CPUs, where the logical circuits are known, some Field Programmable Gate Arrays (FPGA) are getting used to resemble the original CPU.

Another benefit of virtualization over emulation is security. QEMU does not promise any security boundary between host and guest when using emulation. Any bugs in features that are only relevant to emulation scenarios are not considered to qualify for issuance of CVEs.

We compared performance of emulated and virtualized systems for a CPU-bound workload. Virtualized systems performed much better and consumed much less energy per operation. Still, there are use cases when emulation makes sense and the performance impact does not matter or when old hardware is no longer available. Red Hat OpenShift and Red Hat OpenStack environments with hypervisors with multiple architectures can be used, enabling virtualization on the right type of system.

Product trial

Red Hat OpenShift Container Platform | Product Trial

About the author

Christian Horn is a Senior Technical Account Manager at Red Hat. After working with customers and partners since 2011 at Red Hat Germany, he moved to Japan, focusing on mission critical environments. Virtualization, debugging, performance monitoring and tuning are among the returning topics of his daily work. He also enjoys diving into new technical topics, and sharing the findings via documentation, presentations or articles.

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds