Artificial intelligence (AI) applications are becoming increasingly embedded in our day-to-day lives, creating new opportunities and disrupting established industries. Predictive AI in the form of machine learning (ML) has already been delivering increasing benefits for a couple of decades at least, particularly in areas like medicine and fraud detection. With generative AI (gen AI) becoming mainstream, it is hard to grasp the potential breadth of impact this technology will have. Behind the seemingly daily announcements of new breakthroughs is another force that has underpinned and will continue to shape AI innovation—open source software.

Open source software used to be a niche concept, often associated with volunteers coding on weekends and distributing their work for free. Large enterprises were initially sceptical, but as the software world evolved, the communities driving projects like Linux and the Apache Software Foundation proved that open collaboration could produce scalable, secure and cost-effective solutions, which proprietary software models can struggle to provide.

Red Hat has played a leading role in establishing the enterprise credentials of open source software, and today it is hard to find software solutions and applications that don’t rely on open source. Other major tech organizations like Google, IBM, Microsoft, Amazon and NVIDIA are all now heavily involved in open source software projects, along with other technology-dependent businesses like Spotify and Netflix. The most important technologies of the past two decades — the internet, mobile devices, cloud computing and AI — would not be possible without open source software.

As research in AI progressed, we saw the same pattern with leading frameworks, platforms and tooling such as TensorFlow, PyTorch, Kubeflow and Jupyter Notebook being shared freely, with open source communities forming worldwide. The software infrastructure for model training and inference, whether in the cloud or on premise, is also mostly open source. The paradox of all of this is that open source software is so integral to the AI revolution that it is barely commented on.

The efficiency imperative: Addressing AI’s compute and power bottleneck

AI progress is at risk of being throttled by energy and hardware constraints, with leading cloud providers and AI labs reporting shortages of cutting-edge GPUs and specialized hardware. In addition to this, data center power consumption could soon be constrained by the capacity of the electricity grid.

Open source AI software infrastructure can help address these constraints in the following ways:

- Hardware flexibility and vendor neutrality: Open source AI frameworks offer broader compatibility and are generally vendor neutral, supporting communities that use many different architectures. They can be used for workloads across a range of processor types reducing the dependency on hard to source GPUs and other specialized hardware.

- Customisable AI scheduling for resource efficiency: Open source platforms and technologies like Kubernetes, Kubeflow and Apache Airflow offer organizations the ability to dynamically optimize AI workload scheduling around energy usage, resource availability and operational priorities.

- Edge AI and federated learning: Open source edge computing frameworks, such as KubeEdge, process data closer to the data source, and can reduce organizational dependency on centralized cloud resources.

Enterprises must adopt more energy and hardware efficient AI architectures to mitigate compute and power resource shortages which are already occurring and are set to increase. An open source software infrastructure can provide the technical foundation to achieve that.

The adoption challenge: Getting started with sustainable, scalable AI

While most organizations recognize the potential AI has to deliver innovation and competitive advantage, committing to a clear initial use case can be a challenge, especially when data science and machine learning expertise is in short supply.

Following a successful pilot, governance, security and ongoing optimization will be required to expand AI initiatives across the enterprise. A complete and comprehensive AI platform can provide these capabilities. Early low-cost experimentation can spark breakthroughs, but robust platforms and best practices become critical once teams move from proofs of concept to full-scale deployments and into production. The right platform will allow organizations to develop and integrate the new skills and toolsets required, while automating repetitive tasks and providing on-demand resource scaling.

A unified platform can let organizations focus on innovation while providing the reliability, improved security posture and manageability required in a complex IT environment.

Bringing open source AI to the enterprise

Red Hat is well known for its core products—Red Hat Enterprise Linux (RHEL), Red Hat OpenShift and Red Hat Ansible Automation Platform. Red Hat has extended its focus on building leading open source platforms into the AI space with Red Hat AI, which includes the following products:

Red Hat Enterprise Linux AI (RHEL AI) provides enterprises with a more accessible and more efficient open source foundation for gen AI adoption. It simplifies the deployment and management of AI models, offering out-of-the-box support for IBM’s Granite foundation models, providing transparency, improved security and flexibility.

RHEL AI enables organizations to fine-tune foundation models with their own data using the InstructLab framework, offering a low-barrier entry point for enterprises getting started with gen AI, with a pathway to scale into more complex AI architectures like Red Hat OpenShift AI when needed. RHEL AI is fully supported by Red Hat and portable across hybrid cloud environments.

The image below outlines how the workflow for fine-tuning a model with InstructLab in RHEL AI.

Red Hat OpenShift AI is an AI and machine learning (ML) platform built to give enterprises the capability to train and serve AI models at scale. OpenShift AI can manage both gen AI and predictive AI across hybrid cloud environments, integrating with the critical ecosystem partners that enterprises rely on.

Data scientists gain access to a scalable environment for rapid experimentation and streamlined model deployment. With support for multiple AI frameworks and automation of repetitive tasks, they can better focus on innovation and model optimization, accelerating time-to-value.

Application developers and DevOps teams benefit from integration with their CI/CD pipelines and robust automation, with centralized management across hybrid environments. These features enable faster iterations, more reliable releases and simplified scaling, with an open source foundation providing compatibility with existing toolsets.

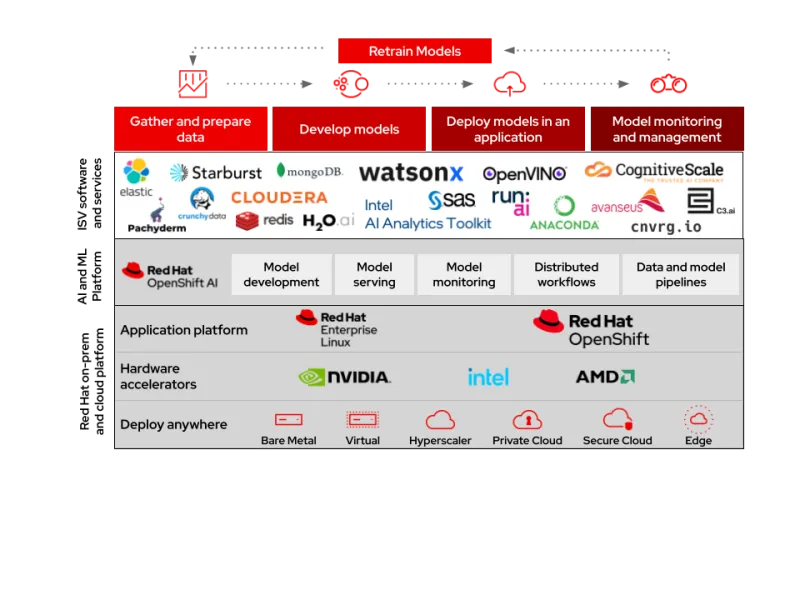

OpenShift AI is a platform that simplifies collaboration between teams, helping deliver AI-powered applications that drive digital transformation and competitive advantage. The image below shows the overarching functionality of OpenShift AI and some of the components and ecosystem available to it.

The future of AI requires efficient, open, scalable infrastructure

Open source software created the foundation for AI’s rapid evolution. As gen AI evolves from hype to real-world adoption, collaboration and transparency are more important than ever.

Large organizations must be ready to adopt practices and technologies which will facilitate the governance and management of AI deployment at scale. Red Hat has proven expertise in delivering enterprise-ready open source solutions—RHEL AI and OpenShift AI exemplify how we are shaping the AI landscape with scalable and flexible platforms.

If you are experimenting with training your own models, scaling enterprise AI platforms or want to learn more about RHEL AI and OpenShift AI, Red Hat can help your organization unlock AI’s full potential in a responsible, cost-effective way.

Product trial

Red Hat Enterprise Linux AI | Product Trial

About the author

Waqaas is an Account Technical Lead at Red Hat, where he has supported some of the company’s largest customers since 2015. His career spans global financial services, energy, and the UK public sector, with expertise in application development, business automation, container platforms, and AI/ML.

He thrives at the intersection of innovation and practicality, bringing strategic vision and technical depth to complex transformation challenges. A lifelong learner and recent MSc graduate in Management and Data Analytics, he brings academic curiosity to his work—whether exploring cloud-native modernization or the future of open source.

A committed mentor and advocate for collaborative growth, Waqaas believes that change is the only constant—and that embracing it with purpose is what drives meaningful progress.

More like this

AI insights with actionable automation accelerate the journey to autonomous networks

Fast and simple AI deployment on Intel Xeon with Red Hat OpenShift

Technically Speaking | Build a production-ready AI toolbox

Technically Speaking | Platform engineering for AI agents

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds