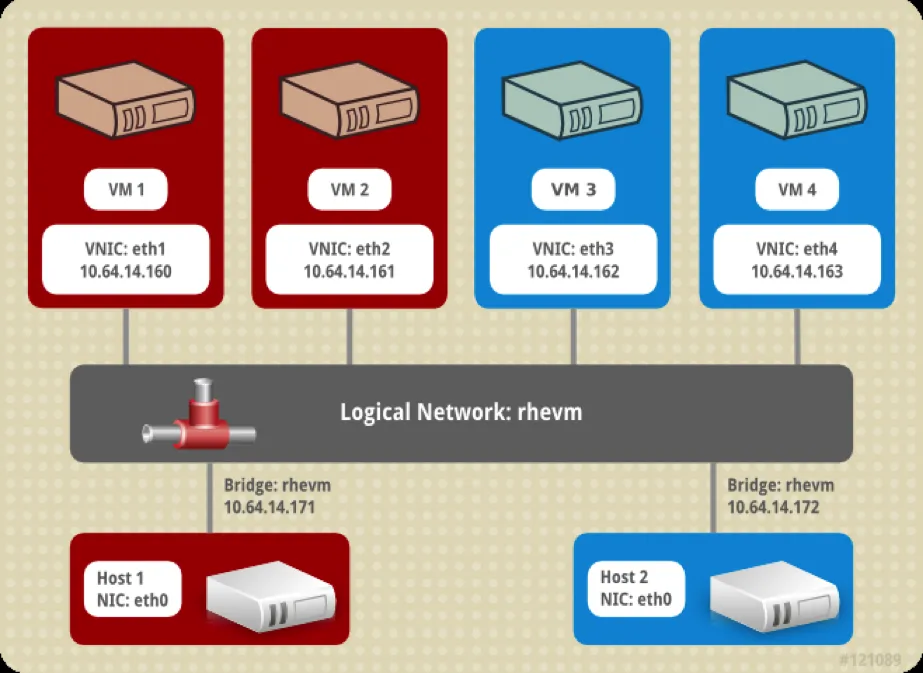

Our previous blog explored the basics of SR-IOV, this write-up will highlight how SR-IOV works in Red Hat Enterprise Virtualization. Red Hat Enterprise Virtualization 3.6 enabled SR-IOV to supercharge the network throughput process. This process is easily explained by looking at an example of a logical network in Red Hat Enterprise Virtualization Manager (RHEV-M).

In Image I below, we can see 2 hosts, each host has 2 VMs for a total of 4 VMs. All the VMs have a vNic that is connected to the ‘rhevm’ logical network. Being connected to the same logical network ensures that the vNics have connectivity to each other and the traffic on the network shares common properties like vlan id and MTU.

In Image II below, when looking at the network setup, on the host level, we can see that there are 6 VMs on the host. Each VM has a vNic that is connected to a virtual bridge on the host. The bridge is connected to a physical NIC. Each bridge represents a ‘logical network’. The host NIC has a logical network ‘A’ configured on top of it and provides connectivity to the other host's NICs with the logical network ‘A’. Configuration properties on the bridges representing network ‘A’ (like VLAN id and MTU) are the same.

VM Interface (vNic)

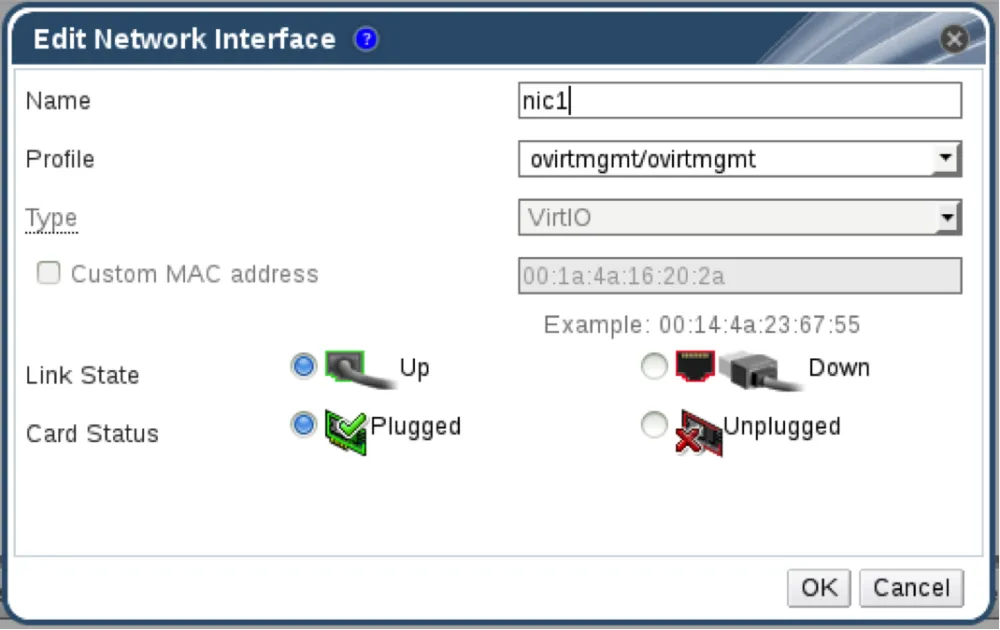

To simplify operations, RHEV-M provides a VM configuration interface as seen in Image III. The property represents the network as the ‘profile’. The first part of the ‘profile’ list box is the profile name and the second one is the network name. The reason we are specifying a profile and not a network is that we may want to connect divergent vNics to the same logical network with varied vNic related properties (like QOS, port mirroring, passthrough, etc). Since each profile is directly related to a specific network, when selecting the profile, we are choosing the logical network. In this dialog we also specify the MAC address we want the vNic to have.

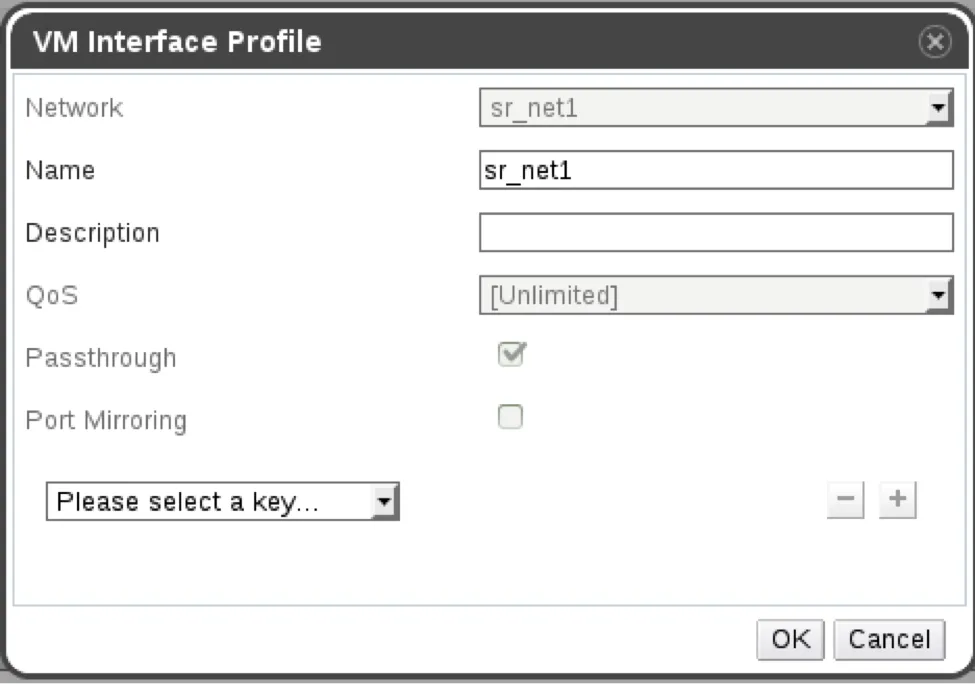

VM Interface Profile (vNic profile)

As seen in Image IV below, A vNic profile is a set of properties that are set on the vNic a profile is attached to. A profile is related to a single network and each network can have more than one profile. To implement SR-IOV, we use the Passthrough vNic profile. Each network can have more than one vNic profile. If we want to attach disparate vNics to the same ‘logical network’ with alternative properties related to the vNic, we can use different profiles. One of the properties on a profile is the ‘Passthrough’. Attaching a profile that is marked as ‘Passthrough’ to a vNic implies that we want the vNic to be directly attached to a Virtual Function (VF).

Passthrough vNic

We can have two different vNics attached to the same ‘logical network’, one with a ‘Passthrough profile’ and the other with a ‘non-Passthrough profile’. In this scenario, the Passthrough profile will be directly connected to a VF and the non-Passthrough one will be connected to a virtual bridge. But since the two profiles are related to the same logical network, both of the vNics should have connectivity to each other and the other vNics connected to the same network. Both of the vNics should send and receive data with the same properties (like vlan id and MTU).

If the ‘Passthrough profile’ is selected as the vNic’s profile, the type of the vNic should be ‘PCI Passthrough’ as seen in Image V. A ‘Passthrough vNic’ means the vNic won’t be connected to a virtual bridge. A VF will be attached to the VM and will be the VMs' vNic. The MAC address specified on the vNic will be applied on the VF. The ‘vlan tag’ specified on the profile’s network will be applied on the VF.

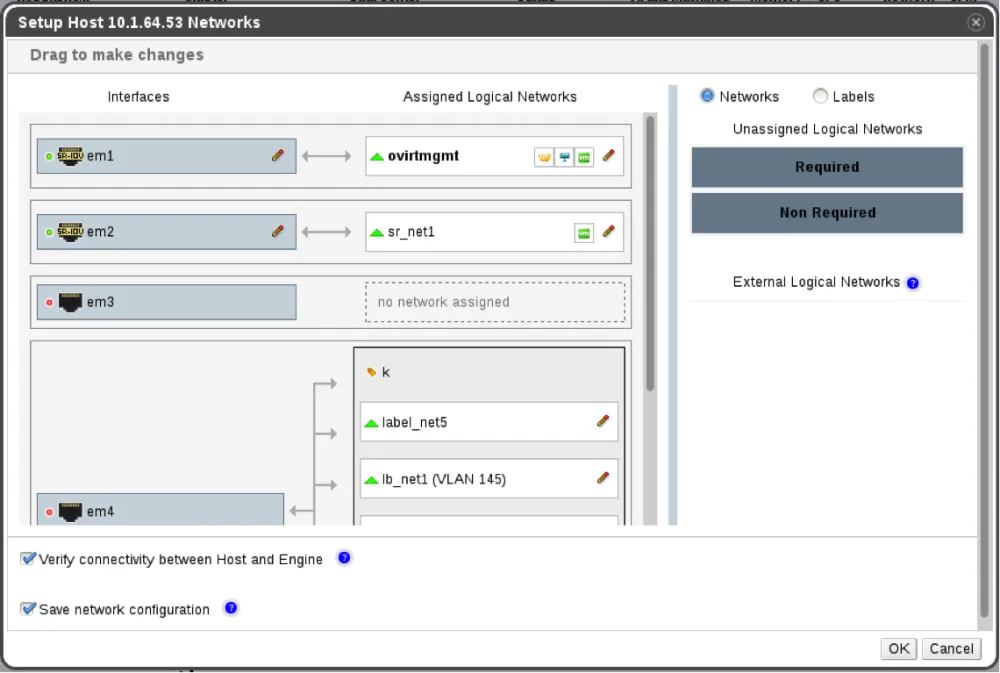

Setup Networks Dialog

The Physical Functions (PFs) are marked with SR-IOV icon. Clicking on the edit icon (pencil) of the PF will open the ‘Host nic VFs configuration’ dialog. The VFs are marked with vFunc icon. Each VF has the name of its PF in the tooltip.

Network Whitelist

The networks’ whitelist is a list of all the logical networks the PF has connectivity to. Instead of specifying a network, a label that represents a group of networks can be used. If a VM has a vNic that is marked as ‘Passthrough’ when running the VM, the oVirt Scheduler finds the best host to run the VM on. In case of ‘Passthrough’ vNic, the Scheduler looks for a host that has a PF with connectivity to the vNic’s selected logical network and an available VF. The network whitelist is used by the scheduler to find out which networks the PF has connectivity to.

The user can specify the PF that has connectivity to ‘All networks’. A VF from this PF can be used regardless of the network that is used by the vNic, . It’s important to understand the difference between the network in this whitelist and the networks that are attached to the physical NICs via the Setup Network dialog.

When attaching a network to the NIC via the Setup Network dialog, actual changes are performed on the host (a virtual bridge is created and attached to the NIC and ifcfg files are changed). When adding a network to the PFs whitelist, no changes are performed on the host. This list is stored only in the database to help the scheduler choose a host for running the VM.

Number of VFs

Let's look at the number of VFs that should be created on the selected PF. Changing the number means removing all the existing VFs on this PF and creating new ones. Therefore, in case the PF has a VF that is in use (attached to a VM or has a virtual bridge attached to it), RHEV blocks changing the number of VFs on this PF. The theoretical maximum number of VFs specified by the vendor on the selected PF is specified under the ‘number of VFs’ text box as seen in Image VII.

By setting up the above processes, we gain various SR-IOV capabilities in Red Hat Enterprise Virtualization including:

- Managing PFs network connectivity whitelist - In one step, the administrator can configure the list of the logical networks each PF has connectivity to. After this process, if the user wants to run a VM with ‘Passthrough vNic’, all the user has to do is just mark the vNic as ‘Passthrough’. The rest of the process such as finding a suitable host and VF is done by the scheduler.

- Scheduling without needing to pin VMs to a host - The user doesn’t have to pin VMs to a specific VF and specific host, the scheduler does all the work.

- Setting VLAN and MAC address on a VF - The ‘vLAN tag’ is one of the properties that the traffic of a logical network has to have. Once we are attaching a VF to a VM, we apply the ‘vLAN tag’ to the logical network it should be connected to. We also specify on a VF the MAC address that was set on the logical vNic declaration.

- Changing and persisting number of VFs (sysfs) via ui/rest - We are using sysfs to modify the number of VFs on a PF, sysfs is not persistent across reboots. Once the host is rebooted, the number of VFs is lost. In RHEV, we implemented the persistence of this number. So if the user specifies the number of VFs via RHEV-M, it will survive the reboot of the host.

- Mixing mode- A PF can have a bridge on top of it, as well as VFs that are directly attached to VMs and also have VFs that have a bridge on top of them. Therefore any mixture is accepted thus providing flexibility and agility.

- Specifying boot order on VFs - We can enable booting a VM with Passthrough vNics from PXE.

Now that we have explored how SR-IOV works, download the Red Hat Enterprise Virtualization 3.6 today to fully take advantage of the above SR-IOV features and benefits.

About the author

More like this

How sovereign is your strategy? Introducing the Red Hat Sovereignty Readiness Assessment tool

Building the foundation for an AI-driven, sovereign future with Red Hat partners

Data Security And AI | Compiler

Data Security 101 | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds