What is retrieval-augmented generation?

Retrieval-augmented generation (RAG) is a technique that improves the quality and relevance of answers from a generative AI application. It works by linking the pre-trained knowledge of a large language model (LLM) to external resources. Specifically, these external resources are segmented, indexed in a vector database, and used as reference material for the LLM to extract from to deliver more accurate answers.

RAG is useful because it directs the LLM to retrieve specific, up-to-date information from your chosen source (or sources) of truth. These sources can include data repositories, collections of text, and pre-existing documentation.

RAG can save money by providing a custom experience without the expense of model training and fine-tuning. It can also save resources by sending only the most relevant information (rather than lengthy documents) when querying an LLM.

RAG vs. regular LLM outputs

LLMs use machine learning and natural language processing (NLP) techniques to understand and generate human language for AI inference. AI inference is the operational phase of AI, where the model is able to apply learning from training and apply it to real-world situations.

LLMs can be incredibly valuable for communication and data processing, but they have disadvantages too:

- LLMs are trained with generally available data but might not include the specific information you want them to reference, such as an internal data set from your organization.

- LLMs have a knowledge cut-off date, meaning the information they’ve been trained on doesn’t continuously gather updates. As a result, that source material can become outdated and no longer relevant.

- LLMs are eager to please, which means they sometimes present false or outdated information, also known as a “hallucination.”

Implementing RAG architecture into an LLM-based question-answering system provides a line of communication between an LLM and your chosen additional knowledge sources. The LLM is able to cross-reference and supplement its internal knowledge, providing a more reliable and accurate output for the user making a query.

Red Hat resources

What are the benefits of RAG?

The retrieval mechanisms built in to a RAG architecture allow it to tap in to additional data sources beyond an LLM’s general training. Grounding an LLM on a set of external, verifiable facts via RAG supports several beneficial goals:

Accuracy

RAG provides an LLM with sources it can cite so users can verify these claims. You can also design a RAG architecture to respond with “I don’t know” if the question is outside the scope of its knowledge. Overall, RAG reduces the chances of an LLM sharing incorrect or misleading information as an output and may increase user trust.

Cost effectiveness

Retraining and fine-tuning LLMs is costly and time-consuming, as is creating a foundation model (to build something like a chatbot) from scratch with domain-specific information. With RAG, a user can introduce new data to an LLM as well as swap out or update sources of information by simply uploading a document or file.

RAG can also reduce inference costs. LLM queries are expensive—placing demands on your own hardware if you run a local model, or running up a metered bill if you use an external service through an application programming interface (API). Rather than sending an entire reference document to an LLM at once, RAG can send only the most relevant chunks of the reference material, thereby reducing the size of queries and improving efficiency.

Developer control

Compared to traditional fine-tuning methods, RAG provides a more accessible and straightforward way to get feedback, troubleshoot, and fix applications. For developers, the biggest benefit of RAG architecture is that it lets them take advantage of a stream of domain-specific and up-to-date information.

Data sovereignty and privacy

Using confidential information to fine-tune an LLM tool has historically been risky, as LLMs can reveal information from their training data. RAG offers a solution to these privacy concerns by allowing sensitive data to remain on premise while still being used to inform a local LLM or a trusted external LLM. RAG architecture can also be set up to restrict sensitive information retrieval to different authorization levels―that is, certain users can access certain information based on their security clearance levels.

How does RAG work?

RAG architectures work by retrieving data from an external source, processing it into an LLM’s context, and generating an answer based on the blended sources. This process includes 3 main stages: data prep, retrieval, and generation.

Step 1: Data prep (for retrieval)

- Source and load documentation: Identify and obtain the source documents you want to share with the LLM, and make sure they’re in a format the LLM understands—often text files, database tables, or PDFs. Regardless of the source format, each document needs to be converted to a text file before embedding it into the vector database. This process is also known as ETL stages―extract, transform, and load. ETL ensures that raw data is cleaned and organized in a way that prepares it for storage, analysis, and machine learning.

Transform: “Text splitting” or “chunking” prepares the documents for retrieval. This means parsing the updated documents and cataloging them into related “chunks” based on distinct characteristics. For example, documents formatted by paragraph may be easier for the model to search and retrieve than documents structured with tables and figures.

Chunking can be based on factors such as semantics, sentences, tokens, formatting, HTML characters, or code type. Many open source frameworks can assist with the process of ingesting documents, including LlamaIndex and LangChain.

Embed: Embeddings use a specialized machine learning model (a vector-embeddings model) to convert data into numerical vectors and enable you to apply mathematical operations to assess similarities and differences between pieces of data. Using embedding, you can convert text (or images) into a vector that captures the core meaning of the content while discarding irrelevant details. The process of embedding may assign a chunk of data with a numerical value (such as [1.2, -0.9, 0.3]) and index it within a greater system called a vector database.

Within a vector database, this numerical value helps the RAG architecture indicate associations between chunks of content and organize that data to optimize retrieval. This indexing aims to structure the vectors so that similar concepts are stored in adjacent coordinates. For example, “coffee” and “tea” would be positioned closely together. “Hot beverage” would be close, too. Unrelated concepts like “cell phones” and “television” would be positioned further away. Distance or closeness between 2 vector points helps the model decide which information to retrieve and include in the output for a user query.

- Store: The combined data from multiple sources (your chosen external documents and the LLM) is stored in a central repository.

Step 2: Retrieval

Once the data is cataloged into the vector database, algorithms search for and retrieve snippets of information relevant to the user’s prompt and query. Frameworks like LangChain support many different retrieval algorithms, including retrieval based on similarities in data such as semantics, metadata, and parent documents.

In open-domain consumer settings, information retrieval comes from indexed documents on the internet—accessed via an information source’s API. In a closed-domain enterprise setting, where information needs to be kept private and protected from outside sources, retrieval via the RAG architecture can remain local and provide more security.

Finally, the retrieved data is injected into the prompt and sent to the LLM to process.

Step 3: Generation

- Output: A response is presented to the user. If the RAG method works as intended, a user will get a precise answer based on the source knowledge provided.

RAG best practices and considerations

For RAG to function at its best, efficiency and standardization are key.

vLLM is an inference server and engine that helps LLMs perform calculations more effectively at scale. vLLM uses a technique called Paged Attention to manage memory, which reduces latency (delay) in the final response. This speed and efficiency make vLLM ideal for use in the “generation” part of a retrieval-augmented generation system to ensure that the user gets their answer as quickly as possible.

To create data standardization across the RAG pipeline, consider using Model Context Protocol (MCP). MCP allows the RAG system to access diverse external data sources without having to create individualized, complex integrations. Creating a standardized interface with MCP makes it easier for a RAG system to be scaled and maintained as new data sources are introduced.

When building a machine learning model, it’s important to find high-quality source documents, because your output is only as good as the data you input. Systems that produce distorted or biased results are a serious concern for any organization that uses AI. Therefore, being mindful that your source documents do not contain biased information–that is, bias that places privileged groups at a systematic advantage and unprivileged groups at a systematic disadvantage–is essential to avoiding biases in your output.

When sourcing data for a RAG architecture, make sure the data you include in your source documents is accurately cited and up to date. Additionally, human experts should help evaluate output before deploying a model to a wider audience and should continue to evaluate the quality of results even after the model is deployed for production use.

How is RAG different from other methods of data training and processing?

Understanding the differences between data-training methods and RAG architecture can help you make strategic decisions about which AI resource to deploy for your needs–and it’s possible you may use more than 1 method at a time. Let’s explore some common methods and processes for working with data and compare them with RAG.

RAG vs. prompt engineering

Prompt engineering is the most basic and least technical way to interact with an LLM. Prompt engineering involves writing a set of instructions for a model to follow in order to generate a desired output when a user makes a query. Compared to RAG, prompt engineering requires less data (it uses only what the model was pretrained on) and has a low cost (it uses only existing tools and models), but is unable to create outputs based on up-to-date or changing information. Additionally, output quality is dependent on phrasing of the prompt, meaning that responses can be inconsistent.

You might choose to use prompt engineering over RAG if you’re looking for a user-friendly and cost-effective way to extract information about general topics without requiring a lot of detail.

RAG vs. semantic search

Semantics refers to the study of the meaning of words. Semantic search is a technique for parsing data in a way that considers intent and context behind a search query.

Semantic search uses NLP and machine learning to decipher a query and find data that can be used to serve a more meaningful and accurate response than simple keyword matching would provide. In other words, semantic search helps bridge the gap between what a user types in as a query and what data is used to generate a result.

For example, if you type a query about a “dream vacation,” semantic search would help the model understand that you most likely want information about an “ideal” vacation. Rather than serving a response about dreams, it would serve a response more relevant to your intention–perhaps a holiday package for a beach getaway.

Semantic search is an element of RAG, and RAG uses semantic search during the vector database retrieval step to produce results that are both contextually accurate and up to date.

RAG vs. pretraining

Pretraining is the initial phase of training an LLM to gain a broad grasp of language by learning from a large data set. Similarly to how the human brain builds neural pathways as we learn things, pretraining builds a neural network within an LLM as it is trained with data.

Compared to RAG, pretraining an LLM is more expensive, and can take longer and require more computational resources―like thousands of GPUs. You may choose to use pretraining over RAG if you have access to an extensive data set (enough to significantly influence the trained model) and want to give an LLM a baked-in, foundational understanding of certain topics or concepts.

RAG vs. fine-tuning

If RAG architecture defines what an LLM needs to know, fine-tuning defines how a model should act. Fine-tuning is a process of taking a pretrained LLM and training it further with a smaller, more targeted data set. It allows a model to learn common patterns that don’t change over time.

On the surface, RAG and fine-tuning may seem similar, but they have differences. For example, fine-tuning requires a lot of data and substantial computational resources for model creation--unless you use parameter-efficient fine-tuning (PEFT) techniques.

Meanwhile, RAG can retrieve information from a single document and requires far fewer computational resources. Additionally, while RAG has been proven to effectively reduce hallucinations, fine-tuning an LLM to reduce hallucinations is a much more time-consuming and difficult process.

Often, a model can benefit from using both fine-tuning and RAG architecture. However you might choose to use fine-tuning over RAG if you already have access to a massive amount of data and resources, if that data is relatively unchanging, or if you’re working on a specialized task that requires more customized analysis than the question-answer format that RAG specializes in.

RAG use cases

RAG architecture has many potential use cases. The following are some of the most popular ones.

Customer service: Programming a chatbot to respond to customer queries with insight from a specific document can help reduce resolution time and lead to a more effective customer support system.

Generating insight: RAG can help you learn from the documents you already have. Use a RAG architecture to link an LLM to annual reports, marketing documents, social media comments, customer reviews, survey results, research documents, or other materials, and find answers that can help you understand your resources better. Remember, you can use RAG to connect directly to live sources of data such as social media feeds, websites, or other frequently updated sources so you can generate useful answers in real time.

Healthcare information systems: RAG architecture can improve systems that provide medical information or advice. With the potential to review factors such as personal medical history, appointment scheduling services, and the latest medical research and guidelines, RAG can help connect patients to the support and services they need.

How Red Hat can help

Red Hat® AI is our portfolio of AI products built on solutions our customers already trust. This foundation helps our products remain reliable, flexible, and scalable.

Red Hat AI can help organizations:

- Adopt and innovate with AI quickly.

- Break down the complexities of delivering AI solutions.

- Deploy anywhere.

Developer collaboration and control

Red Hat AI solutions allow organizations to implement RAG architecture into their LLMOps process by providing the underlying workload infrastructure.

Specifically, Red Hat® OpenShift® AI—a flexible, scalable MLOps platform—gives developers the tools to build, deploy, and manage AI-enabled applications. It provides the underlying infrastructure to support a vector database, create embeddings, query LLMs, and use the retrieval mechanisms required to produce an outcome.

With supported, continuous collaboration, you can customize your AI model applications for your enterprise use cases quickly and simply.

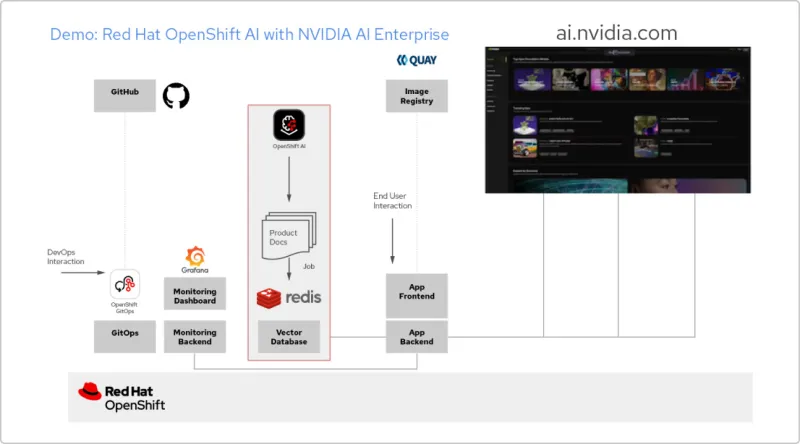

Solution pattern: AI apps with Red Hat & NVIDIA AI Enterprise

Create a RAG application

Red Hat OpenShift AI is a platform for building data science projects and serving AI-enabled applications. You can integrate all the tools you need to support retrieval-augmented generation (RAG), a method for getting AI answers from your own reference documents. When you connect OpenShift AI with NVIDIA AI Enterprise, you can experiment with large language models (LLMs) to find the optimal model for your application.

Build a pipeline for documents

To make use of RAG, you first need to ingest your documents into a vector database. In our example app, we embed a set of product documents in a Redis database. Since these documents change frequently, we can create a pipeline for this process that we’ll run periodically, so we always have the latest versions of the documents.

Browse the LLM catalog

NVIDIA AI Enterprise gives you access to a catalog of different LLMs, so you can try different choices and select the model that delivers the best results. The models are hosted in the NVIDIA API catalog. Once you’ve set up an API token, you can deploy a model using the NVIDIA NIM model serving platform directly from OpenShift AI.

Choose the right model

As you test different LLMs, your users can rate each generated response. You can set up a Grafana monitoring dashboard to compare the ratings, as well as latency and response time for each model. Then you can use that data to choose the best LLM to use in production.

The official Red Hat blog

Get the latest information about our ecosystem of customers, partners, and communities.