In this post, we will present confidential virtual machines (CVMs) as one of the use cases of confidential computing as well as the security benefits expected from this emerging technology. We will focus on the high level requirements for the Linux guest operating system to ensure data confidentiality both in use and at rest.

This blog follows the recent release of Red Hat Enterprise Linux 9.2 running on Azure Confidential VMs. CVMs are also a critical building block for the upcoming OpenShift confidential containers in OpenShift 4.13 (dev-preview).

For additional details on OpenShift confidential containers in Azure we recommend reading this blog.

Confidential computing using virtual machines

Confidential computing is a set of security technologies that aims to provide “confidentiality” of workloads. This means that the running workload and, more importantly, its data in use, is isolated from the environment where the workload runs. This means that higher privilege layers of the software and hardware stack (hypervisor, virtual machine monitor, host operating system, DMA capable hardware, etc.) can’t read or modify the workload’s memory "at will". The workload or the virtual machine hosting it must explicitly allow access to portions of the data when required.

From all different ways of using confidential computing technology, CVMs put the least restrictions on the workload. In practice, almost every application which runs today in a VM or a cloud instance can be placed in a CVM with no changes to the workload itself.

CVMs would not be possible without the corresponding hardware technologies. Currently all major CPU vendors provide such solutions or are in the progress of developing one:

- AMD is offering the third generation of their “Secure Encrypted Virtualization” technology called SEV-SNP (Secure Nested Pages). This is one of the most mature offerings on the market which provides confidentiality and data integrity guarantees. Previous generations of the technology were SEV-ES (Encrypted State) and SEV.

- Intel has just started shipping a confidential computing technology called Trust Domain Extensions (TDX) with the Sapphire Rapids processor family. On the feature level, the technology is similar to AMD’s SEV-SNP, but the implementation details differ significantly.

- Since 2015, Intel has offered a technology called Software Guard Extensions (SGX) which allows for memory encryption on the process level.

- IBM Z offers Secure Execution (SE).

- Power offers Protected Execution Facility (PEF).

- ARM is working on the Confidential Compute Architecture (CCA).

- Confidential VM Extensions (COVE) were suggested for RISC-V platform.

While CVMs are intended to be isolated from the host they run on, no additional security guarantees are provided for other attack vectors. The data in use is meant to be protected from the host, but it is still available within the guest for its workloads to process. This means, for example, that remotely exploitable vulnerabilities in workloads are still a security threat even when your workload runs in a CVM.

Confidential virtual machines and public cloud vendors

Public cloud providers are no strangers to confidential computing in general and confidential virtual machines in particular:

- Microsoft announced the general availability of Intel SGX-based DCsv2-series VMs in April, 2020. Note, while Intel SGX is a confidential computing technology, it is aimed at protecting individual processes, and applications have to be modified.

- Google Cloud announced the general availability of AMD SEV based C2D/N2D CVMs in June, 2020.

- Microsoft announced the general availability of AMD SEV-SNP based DCasv5 and ECasv5 CVMs in July, 2022.

- Microsoft announced a preview of Intel TDX based DCesv5-series and ECesv5-series CVMs in April, 2023.

- Google Cloud announced a private preview of AMD SEV-SNP feature for N2D CVMs in April, 2023.

- Amazon Web Services (AWS) announced the general availability of AMD SEV-SNP feature for their M6a, C6a, and R6a instance types in May, 2023.

It is not a coincidence that public cloud vendors are getting involved with confidential computing technologies. This specific guarantee of confidentiality makes it possible for very sensitive workloads which traditionally had to stay on-premise to migrate to the cloud. Previously, it was not possible to eliminate the risk of unauthorized access to the data in use by the compromised (or malicious) hypervisor, host operating system and host administrators. Existing regulations and policies may not allow for such risk. CVMs allow cloud providers to guarantee that under no circumstances even the most privileged layers of the virtualization stack can get access to customer’s data.

Confidential virtual machines and guest operating systems

While modern CVM technologies such as AMD SEV-SNP and Intel TDX allow running unmodified workloads inside VMs and keeping the confidentiality guarantee, the same does not apply to guest operating systems. This is because the technologies are not fully transparent. In particular, some relatively small parts of the guest memory should be explicitly made available to the host for input/output operations, and the guest kernel is responsible for allowing this.

The guest operating system is also responsible for providing greater security to the data at rest. As access to storage volumes is normally arbitrated by the host, the only feasible way for delivering confidentiality is encryption. On top of protecting the workload’s data, all application executables which are allowed to access the data within the guest operating system must be protected as well. In case this is not done, a malicious host may simply swap the workload’s binary and get access to the data when the binary runs. As the same applies to system level workloads, using full disk encryption (instead of encrypting specific data volumes) seems to be appealing. The question is then if it's actually possible to encrypt everything? Since we can’t rely on the host doing decryption for us, at least some code doing the decryption has to be stored in clear:

But how is the cleartext part of the storage volume protected from the host then? While it doesn’t seem to be possible to hide it, the true requirement is to make sure the untrusted host cannot modify it unnoticed. To achieve that, code signing technologies like UEFI SecureBoot can be utilized. SecureBoot is a technology which guarantees that only the code trusted by the platform vendor is executed when the system boots, allowing it to prevent attacks on the system before the operating system is fully loaded.

Also, while not an absolute must, VMs are generally expected to be able to boot unattended. For CVMs this more or less becomes a requirement as it’s hard to come up with a way for the VM user to provide the volume encryption key in a more secure fashion. For example, typing a password on a console, emulated by the host, is not really secure as the host can easily “sniff” the password. All this, in turn, means that the key to decrypt the encrypted part of the storage volume must be automatically accessible to the genuine VM but to no one else. The most common way this is currently achieved is by using a virtual Trusted Platform Module (vTPM) backed by hardware. The confidential computing technology must make it possible to “attest” the vTPM device to prove its genuineness.

Booting Linux in a more secure manner

Now, let’s take a look at a modern Linux operating system and how it can meet the mandatory requirements for the secure boot flow and volume encryption to be able to run inside a CVM.

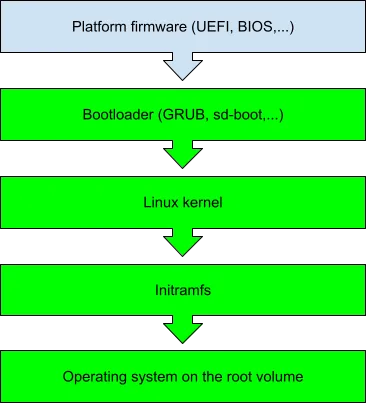

First, let’s take a look at the standard Linux boot chain:

These are the main blocks involved in the chain:

- Platform firmware is the software that runs first when the system is powered on. On modern x86 platforms including CVMs, the standard type of the firmware is UEFI, the legacy BIOS is not widely used.

- The next link in the boot chain is the bootloader. For booting in the UEFI environment with SecureBoot feature enabled, general purpose Linux distributions normally use a combination of two bootloaders. ‘Shim’ is usually used as the first stage bootloader, it is signed by Microsoft and embeds a distribution-specific certificate which is used to sign both the second stage bootloader and the Linux kernel. For the second stage bootloader, more feature-rich bootloaders such as GNU GRUB or systemd-boot are used, their purpose is to locate the desired Linux kernel, initramfs, and the kernel command line and initiate the Linux boot process.

- When Linux kernel boots, it first runs the ‘init’ tool from the initramfs which is a small version of the operating system with the set of kernel modules essential for finding the root volume device.

- When the device is found, ‘init’ from the initramfs mounts it and switches to the ‘init’ process from the root volume

- This will finally start all the processes in the operating system to bring it up to the desired initial state.

As previously mentioned, the guest operating system is responsible for the security of data at rest and this includes system data. Some examples of the data requiring protection are:

- SSH host keys which uniquely identify the instance of the operating system

- Configuration files with sensitive data

- Random seed data which affect secure random number generation

- Password hashes—even if a malicious actor is not able to decrypt the hashes, it may try to change them in order to gain access

- Private parts of service certificates, private keys and such

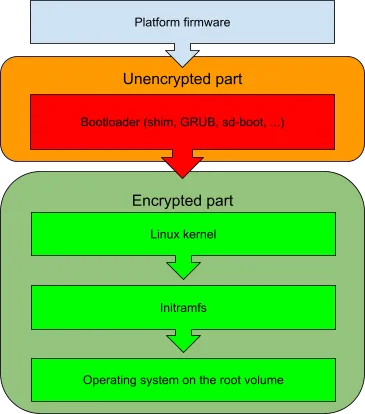

While each of these artifacts can probably be protected individually, it seems to be more practical to try to protect the root volume as a whole instead, in particular because it will include many other potentially sensitive files. As platform firmware does not do root volume decryption, some parts of the boot chain will have to stay unencrypted.

What parts would that include?

As a bare minimum, the first object in the boot chain after firmware must be stored in clear. In theory, it would’ve been possible to make the bootloader decrypt the rest of the system:

GRUB, for example, already has limited support for decrypting volumes. To make this suitable for CVMs, it would need to be extended with full TPM support and this is quite complex.

Another option would be to have Linux kernel and initramfs blocks stored in clear:

The main benefit of the approach is the ability to use any standard Linux tools and drivers in the initramfs. In particular, we can use standard LUKS technology for volume encryption, standard TPM libraries and tools to work with TPM devices and so on. As a downside, the authenticity of Linux kernel and initramfs will have to be proved additionally to the authenticity of the bootloader.

To prove the authenticity of the unencrypted artifacts in the boot chain, CVMs usually rely on the already mentioned UEFI SecureBoot technology. In a nutshell, the authenticity of the Linux boot chain is proven by the following boot-up sequence:

- Platform firmware ships with Microsoft issued certificates. It loads and jumps into the bootloader.

- First stage bootloader (shim) is signed by Microsoft and ships with operating system vendor certificates (e.g. Red Hat, Fedora, Canonical, etc.).

- First stage bootloader checks that the second stage bootloader (e.g. GRUB, sd-boot) binary is signed by the operating system’s vendor and loads it.

- Second stage bootloader checks that the Linux kernel binary is signed by the operating system’s vendor and loads it.

- The Linux kernel boots in “lockdown” mode to prevent unauthorized modification of the kernel so, for example, only signed kernel modules are allowed to be loaded.

While this may be sufficient for regular deployments, CVMs have to guarantee confidentiality of the data and not only safety of the kernel itself. In particular, initramfs and the kernel command line must now be checked before they are used and especially before the password to decrypt the encrypted part of the root volume is revealed.

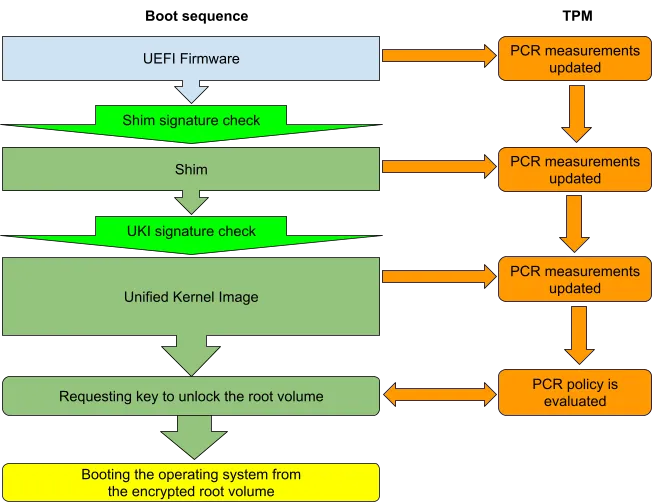

To extend the SecureBoot process to cover initramfs and the kernel command line, a concept called Unified Kernel Image (UKI) was proposed. The image combines the Linux kernel, initramfs and the kernel command line into one UEFI binary which is signed by the vendor certificate:

The Unified Kernel Image is a UEFI binary, this means that it can be loaded directly from the firmware or, to avoid the need to sign it with Microsoft’s certificate, directly from the shim bootloader. In this scenario, the only thing which needs to be loaded from the unencrypted part of the root volume is the UKI and the signature on it guarantees that it wasn’t tampered with.

While UKI effectively helps to solve the issue of proving that no objects in the boot chain were altered by a malicious actor, the concept brings some limitations as the flexibility of the boot process is reduced. In particular:

- Initramfs must be created by the operating system vendor at the time when the UKI is built. This implies that e.g. the list of kernel modules included in the initramfs is “fixed” and it is not possible to add new ones at the end system.

- Kernel command line is also made constant. For example, it becomes impossible to set the desired root volume by specifying the “root=” option on the kernel command line. This means that a different way to discover the root volume is needed.

We will try to go through these limitations in detail in the next post and see how these can be overcome.

Root volume key security

To keep the data of the root volume secure, CVMs' architecture must verify that the key to the encrypted part of the root volume is only revealed to the guest operating system in a “good” state. But what does this really mean? Which particular parts of the operating system need to be checked?

First and most obvious, SecureBoot must be turned on as without it there’s no way to prove the authenticity of the running kernel and the userspace environment. Unfortunately, this is not enough. Even with SecureBoot enabled, it is possible to load a “traditional” signed Linux kernel with an arbitrary initramfs. A compromised or malicious host can use a genuine, signed kernel with specially crafted initramfs to extract the password for the root volume. This means that the authenticity of the whole environment, including the initramfs and the kernel command line, must be proved to ensure the root volume key is safe.

The standard way for checking the authenticity of the boot chain is known as “Measured boot”: a distinct hash value from each component in the chain is recorded in TPM’s Platform Configuration Registers (PCRs) and can be examined later. PCRs are a crucial part of TPM, as their values can only be “extended” with a one-way hash function which measures both the existing value and the newly added one. This confirms that the resulting value can only be obtained by measuring the exact same components in the exact same sequence. Different PCR registers measure different aspects of the boot process, for example:

- PCR7, among other things, keeps the information about the SecureBoot state and all the certificates which were used in the boot process.

- PCR4 contains hashes for all UEFI binaries which were loaded.

- For systemd-stub based UKIs, PCR11 contains the measurements for the Linux kernel, initramfs, kernel command line and other UKI sections.

TPM devices allow the creation of a PCR-based policy which has to be satisfied before a secret (e.g. root volume key) is revealed. The main advantage of this approach is that the policy is evaluated by the hardware and thus cannot be circumvented by a malicious guest operating system. Combined with the UKI idea, the overall boot sequence will look like this:

The need for attestation

From a user perspective, you may need to have an assurance that the VM you have just created on a public cloud is really a CVM with an operating system of your choice. In particular, you need to make sure that the host hasn’t started a non-confidential VM with a specially crafted guest operating system to steal your secrets instead. Modern confidential computing technologies, such as AMD’s SEV-SNP or Intel’s TDX, allow you to generate a hardware signed report which includes the measurements of the booted guest operating system (e.g. PCR values). The report can later be checked by an attestation server. We discuss the implications of the attestation process in a separate blog post series.

Conclusion

In the blog post we’ve reviewed the basic requirements for guest operating systems in order to run securely within a confidential virtual machine. Besides the need to enable the particular confidential computing technology responsible for securing data in use, the guest operating system has to support SecureBoot flow in order to preserve the confidentiality of the data at rest. In the next blog post of this series, we will go deeper into the implementation details. We will use Red Hat Enterprise Linux 9.2 running on Azure Confidential VMs as an example.

執筆者紹介

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

仮想化

オンプレミスまたは複数クラウドでのワークロードに対応するエンタープライズ仮想化の将来についてご覧ください