In the previous post I explained how to performance test MongoDB pods on Red Hat OpenShift with OpenShift Container Storage 3 volumes as the persistent storage layer and Yahoo! Cloud System Benchmark (YCSB) as the workload generator.

The cluster I’ve used in the prior posts was based on the AWS EC2 m5 instance series and using EBS storage of type gp2. In this blog I will compare these results with a similar cluster that is based on the AWS EC2 i3 instance family that is using local attached storage (sometimes referred as “instance storage” or “local instance store”).

Before we run test and compare results, I would like to explain the differences between the AWS instance types and the storage types mentioned above.

m5 + EBS vs i3 + instance store

Please note that Red Hat does not recommend one instance type over another. Use your experience, familiarity of AWS infrastructure, your application needs and common sense to decide what instance type to use. What follows is my experience with AWS instance types plus feedback I've received from other people using AWS instances.

In AWS’s documentation, the m5 instance type is part of the “General Purpose Instances” instance group. I find these instances can be a good choice when a balance between compute, memory and networking for a variety of workloads is needed. These type of instances use EBS (Elastic Block Storage) as the storage layer. An EBS volume is exposed as a block device to the instance. It can only be attached to a single instance at a time. AWS provides automatic replication of the volume but only in the same AZ (Availability Zone). So, basically the volume has at least one more copy in the same AZ.

EBS volume performance capabilities are based on the volume size. The larger the volume, the more Input/Output Per Second (IOPS) it can provide, within a maximum IOPS cap. EBS volumes also have the notion of IOPS burstiness for volumes smaller than 1TB.

You can read more about this in the Amazon EBS Volume Types documentation. But, in short, gp2 volumes have a notion of a burst bucket of 3000 IOPS for 30 minutes that gets refilled on a daily basis. So for volumes larger than 1TB that have higher baseline performance than 3000 IOPS anyhow, this is not an issue. But for volumes smaller than 1TB, you can quickly get into a situation where you used your daily burst bucket and now your volume IOPS are basically throttled down to a very low rate (until your burst buckets gets filled again).

The i3 instance type is part of the Storage Optimized Instance group. Unlike m5, the storage it uses is directly attached to the instance as a block device. The i3 type is considered a good fit for a variety of databases types and workloads (SQL, NoSQL, OLTP, Data Warehouse).

The i3 direct attached storage has the advantage of lower latency storage, however, it does come with a caveat—if you stop the instance, the data on the attached device will not be saved. While this sounds scary, let's explain a little bit more about this situation.

In the lifetime of an AWS instance, there are certain states that the instance can be in. Two events are important for our case, stop and reboot.

As explained, if you stop the instance, the data on the direct attached storage will not be saved, however in the case of a reboot, the data is intact.

These events and states can be monitored and planned for. However, unscheduled events are where OpenShift Container Storage 3 can shine. Since OpenShift Container Storage 3 provides three copies of each Volume it means that the PVs (Persistent Volume) that I use are protected even during an unscheduled event such as “stop” (very rare, but possible). So while my instance is in a “stop” state, I still have 2 other copies of the volume that can be consumed by OpenShift.

I can furthermore strengthen the availability level by spreading the 3 copies over 3 AZs (Availability Zones) in the AWS region I’m using, thus reducing the chances of these unexpected events (like a whole AZ going down).

Performance comparison between m5 and i3

Now that we have gone over what I believe is the biggest caveat of i3, lets compare the performance of our YCSB workload on MongoDB database between two sets of clusters, based on i3 and based on m5.

A few things to take into account:

-

The m5.2xlarge instances have a newer and faster CPU (Skylake based Platinum 8175M@2.5Ghz with turbo @3.5Ghz) than the i3.2xlarge (Broadwell based E5-2686 v4@2.3GHz with turbo@3GHz) instances. Faster and more modern CPUs should mean that CPU intensive workloads will finish faster. (i3en.2xlarge have Skylake based newer CPUs @3.1Ghz, but I have not compared the cost vs this newer instance type).

-

The i3.2xlarge instance types have basically twice the RAM compared to the m5.2xlarge instance type, 61GB of RAM vs 32GB of RAM. More RAM basically means more memory for the pods or for general host caching.

-

Both instances have 8 vCPUs.

-

The i3 storage device size is fixed (single 1.9TB NVMe SSD for the i3.2xlarge), but for the m5 that uses EBS, I’ve used 1TB gp2 volume and for further comparison, I’ve also used a 1.9TB gp2 volume. In either case (for i3 or m5) we are not using all of the storage for our 30 PVs.

-

The i3 instance still uses 3 small (100GB in size) EBS gp2 volumes for the root block device, docker storage and OpenShift storage. The sizes are the defaults from the OpenShift ansible install scripts and can be made smaller to save more on cost, I’ve just used the defaults.

Each cluster type went through 5 runs of tests. Each run is YCSB workloadb, using 8,000,000 records within MongoDB to run 2,000,000 operations in the ratio of 70% reads and 30% writes (update operations).

Each run consists of starting all the 30 jobs at the same time, though the runtime (how long it took to complete the job) is different from one pod to another so only once all 30 YCSB jobs are finished, the test continues to the next run.

I have calculated the average runtime for all 30 MongoDBs per run, and based on that, have created the average of Operations per Second. (I’ve used this method of “average of averages” to help get a more accurate result).

Just to remind, each cluster consists of 1 master, 1 infra node and 6 worker nodes that also runs OpenShift Container Storage 3, so these 6 worker nodes both provide and consume storage.

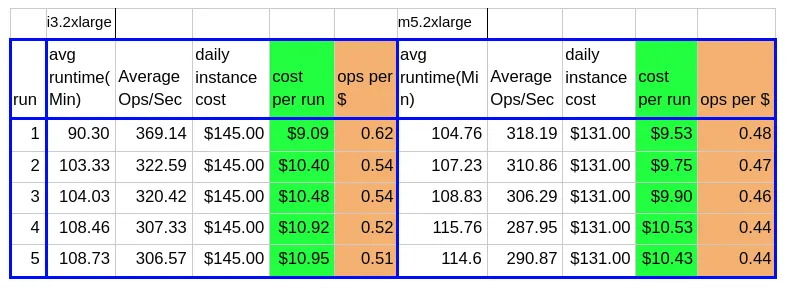

This table shows the comparison between a cluster of i3 and m5 instances:

The data from this table shows that running this type of workload is roughly 35% faster on i3.2xlarge and the bandwidth (Ops/Sec) is also 35% better for the i3.2xlarge instance type.

If we compare the daily cost of the whole cluster, we can illustrate that the per run cost of m5.xlarge instance is roughly 16% more expensive than i3.2xlarge.

Furthermore, what's more impressive is that the price per operation (ops per $) of m5.xlarge instance is roughly 2x of what you pay for an i3.2xlarge instance.

Please note that the daily instance cost I’ve used is coming from my AWS account. Other account holders might have higher or lower prices depending on their level of activity with AWS, whether they use spot instances, etc., but the difference between the instance types should stay roughly the same.

Other data that I’ve collected for this comparison includes, IO device utilization, CPU utilization and network utilization, below are CPU and IO utilization.

The “avg OCS device usage” is the average utilization per run for the device OpenShift Container Storage 3 is using on each working node (1.9TB NVMe SSD in the i3.xlarge case and 1TB gp2 EBS device in the m5.xlarge case).

What these results demonstrate is that the extra RAM the i3.2xlarge instance have is used for file caching and is helping the performance although the instance itself have a slower CPU than the m5.2xlarge instance. This also means that there is more room for expansion (in terms of database size and number of operations) on the i3 instance.

I’ve made another comparison, this time with the m5.2xlarge instance using a 1.9TB gp2 EBS device for OCS3 in each worker node, the same storage size of the i3.2xlarge instance (1.9TB NVMe SSD). While the 30 MongoDB databases I’ve used is not using the whole storage space the OCS3 provides in our configuration, on the EBS/gp2 side, the larger the device, the more IOPS it can provide, but the daily cost is higher because of the larger EBS volume size ($113 with a 1TB EBS volume vs $131 with a 1.9TB EBS volume).

As you can see, having larger EBS gp2 device on the m5.2xlarge side have actually helped in reducing the cost per run and lowered the cost per database operation, but with the increase of daily cost, but it is still not matching the cost per performance the i3.2xlarge instance provides.

The change to 1.9TB gp2 EBS device also increase the OCS3 device utilization in about 16% and decreased the CPU utilization in about 4% in the m5.2xlarge instance.

Conclusion

This blog post was about showing how OpenShift Container Storage can be used to help you reduce the cost of an OpenShift cluster that benefits from a storage abstraction layer that OpenShift Container Storage provides for persistent storage applications.

I’ve explained the operational differences between an EBS volume and a direct attached instance storage, some of the considerations that you should take into account and how OpenShift Container Storage can help overcome some of the availability issues that comes with using direct attached storage instance.

I’ve used YCSB to create load on 30 pods of MongoDB spread across 6 OpenShift worker nodes. As always, I recommend to perform your own testing using your own specific workload and data to test whether using an i3 type instance can fit your needs, but these results demonstrate that with testing, you can get more out of AWS with less money using direct attached storage type instances.

執筆者紹介

Sagy Volkov is a former performance engineer in ScaleIO, he initiated the performance engineering group and the ScaleIO enterprise advocates group, and architected the ScaleIO storage appliance reporting to the CTO/founder of ScaleIO. He is now with Red Hat as a storage performance instigator concentrating on application performance (mainly database and CI/CD pipelines) and application resiliency on Rook/Ceph.

He has spoke previously in Cloud Native Storage day (CNS), DevConf 2020, EMC World and the Red Hat booth in KubeCon.

類似検索

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 4)

Can Kubernetes Help People Find Love? | Compiler

Scaling For Complexity With Container Adoption | Code Comments

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

仮想化

オンプレミスまたは複数クラウドでのワークロードに対応するエンタープライズ仮想化の将来についてご覧ください