As AI becomes an engine of national competitiveness, the concept of sovereign AI—the capacity to operate AI systems free from external influence—is increasingly relevant, but the path to adoption is filled with challenges. A recent survey of over 900 IT leaders and AI engineers about AI adoption exposes a significant "value gap," showing that, despite high enthusiasm (72%), only 7% of Europe, the Middle East, and Africa (EMEA) organizations are delivering results.

The survey highlights that data privacy and infrastructure silos are paralyzing AI development efforts. As a result, sovereign AI has rapidly moved from being a theoretical "cloud challenge" into a practical necessity. By mitigating the specific risks identified in the Red Hat survey, sovereign AI allows regulated enterprises to move confidently from pilot to production without compromising on:

- Regulatory compliance: Adherence to strict regulations like General Data Protection Regulation (GDPR), the EU AI Act, and data residency laws that mandate citizen data remain within specific borders.

- Operational resilience: The ability to continue operations during geopolitical instability or disconnection from the global internet.

- Strategic autonomy: Organizations avoid vendor lock-in and maintain full control over the intellectual property, such as models and weights, generated from sensitive data.

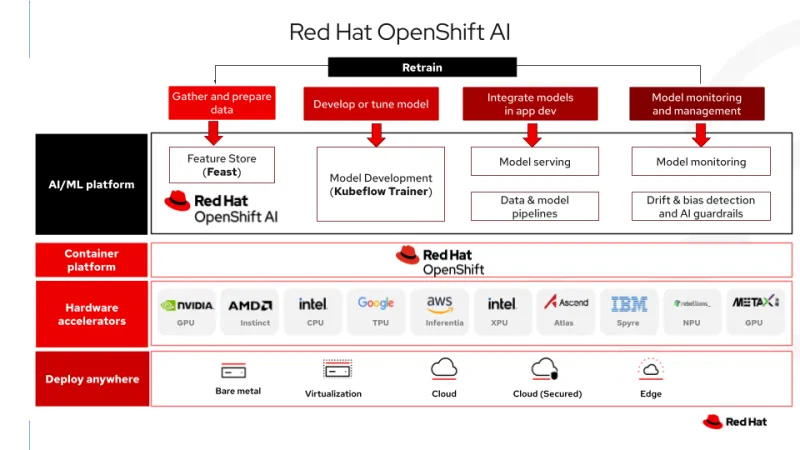

Red Hat OpenShift AI provides a foundation for this sovereignty, enabling organizations to build an "air-gapped" AI factory while maintaining absolute control over security, data, models, and results.

In this article we look at specific examples of sovereign AI challenges our clients are facing, abstract the main themes that need to be addressed, and propose a solution for these problems.

User story: The dilemma of "AI independence"

The protagonist: Dr. Aris (a composite persona based on real customer challenges), chief data officer for the Ministry of Health in a mid-sized European nation.

The challenge: The Ministry possesses a goldmine of data, decades of anonymized patient records, genomic sequences, and local epidemiological history. Dr. Aris wants to build a "National Health LLM" to assist doctors in diagnosing rare diseases specific to their population.

Crucially, the Ministry is facing a "shadow AI" problem. Frustrated researchers are secretly uploading anonymized snippets to public LLMs to get work done, risking data leaks. They need a sanctioned, fully secure, internal platform that is just as easy to use as the public cloud.

The conflict:

- The cloud trap: Leading AI providers offering Models-as-a-Service (MaaS) require sensitive data to be uploaded to US-based public clouds. This can violate the General Data Protection Regulation (GDPR), data residency laws, and national security protocols.

- The DIY nightmare: Dr. Aris attempts to build the platform from scratch. His team is quickly paralyzed by the operational chaos of arbitrating access to the 500-GPU cluster, resulting in constant resource contention where critical experiments wait indefinitely while reserved hardware sits idle.

The solution: The Ministry builds a sovereign AI platform on top of OpenShift AI, also using Kubeflow and Feast.

- The shift: Instead of relying on proprietary cloud APIs, Dr. Aris's team builds a "model factory" on their own, air-gapped, protected infrastructure. OpenShift AI, which includes Kubeflow components, abstracts the GPU cluster hardware, allowing the team to train massive models without sending a single byte across the border. Feast helps centralize feature management across training and inference so the features feeding into models are consistently defined, enabling governance and traceability.

- The result: A data scientist simply submits a training request, and the system automatically spins up a distributed cluster, retrieves features from Feast, trains the model, and tears it down, all inside the air-gapped national data center. Dr. Aris achieves "AI autonomy" using a scalable and disconnected AI platform on his country's own terms.

3 pillars of sovereign AI

To move from "digital colony"—a nation (or community) that relies so heavily on foreign technology infrastructure that it loses control over its own digital economy, data, and future development—to "digital sovereignty," a nation must control 4 key layers of the AI technology stack.

Technical sovereignty (the foundation)

Principle: Sovereignty mandates a transparent chain of custody and resilience against supply chain weaponization. By adopting a hardware-agnostic platform layer, nations can optimize their AI progress through a multi-vendor strategy, so their strategic autonomy is preserved regardless of shifts in the global supply chain. The sovereign platform must decouple the software from the hardware and combine strict ownership of the infrastructure with the flexibility to adapt to market availability. By adhering to open source standards, an organization's AI capabilities can be inspected, audited, and maintained independently of any single vendor's roadmap or hardware monopoly, retaining absolute authority over service continuity.

Validation: The Red Hat AI survey confirms that 92% of IT leaders see enterprise open source as crucial to their AI strategy. It offers the consistency and transparency needed to control the AI supply chain.

Data sovereignty (the asset)

Principle: Data gravity is absolute. Sensitive data must reside on storage media physically located within the sovereign perimeter and subject only to local laws. The challenge is to provide data scientists with the ease of data selection and retrieval found in the cloud, while physically restricting data movement to a secure internal network.

Operational sovereignty (the control)

Principle: The "control plane" must be local. Critical workflows cannot rely on a software-as-a-service (SaaS) console hosted in another continent to manage compute resources or user access. A sovereign platform requires a self-contained control plane that handles identity access management (IAM) and resource orchestration entirely within the local perimeter.

Technical solution

Our solution is built on a layered architecture where Red Hat AI serves as the unifying sovereign platform, orchestrating the training capabilities of Kubeflow and the data management of Feast.

This solution is built on open source standards—specifically Red Hat OpenShift, which provides a Kubernetes foundation, and the Kubeflow project. By using included components—Model Registry, KServe, Pipeline and Training, and Feast for feature serving—organizations can maintain complete ownership of their technology stack. This transparency allows organizations to inspect code for vulnerabilities and contribute directly to the project's roadmap. Our focus here is on how Kubeflow Trainer and Feast support these sovereignty requirements.

The open blueprint for AI sovereignty: Red Hat AI

To achieve true sovereignty, the underlying platform must be as trustworthy as the data it processes. Red Hat AI delivers a hardened, enterprise-grade foundation that addresses the specific needs of protected, self-contained AI factories.

Red Hat AI provides complete infrastructure independence. It supports deployment on air-gapped bare metal, private clouds, or trusted sovereign cloud partners. This allows an organization to choose its own hardware vendors (e.g., NVIDIA, Intel, AMD) and retain absolute authority over service continuity.

- Trusted software supply chain: Sovereignty begins with the source. Red Hat AI provides a catalog of certified, vulnerability-scanned, and digitally signed AI tools so the software running within your air-gapped perimeter is free from known vulnerabilities, a critical requirement for national security.

- Unified MLOps control plane: The platform consolidates the fragmented AI technology stack into a single interface. It helps manage the complex dependencies between the operating system (Red Hat Enterprise Linux), the hardware (GPUs), and the application layer (Kubeflow/Feast), so data scientists can focus on modeling rather than infrastructure plumbing.

- Scalable hardware abstraction: Whether running on bare-metal racks or a virtualized private cloud, Red Hat AI abstracts the physical resources. It uses operators to automatically tune and expose specialized hardware, like the GPUs in a national supercomputer, enabling strong multitenancy without exposing complexity to the user.

With this secure foundation established, we rely on Red Hat OpenShift AI. As a distributed AI platform within the Red Hat AI portfolio, OpenShift AI allows organizations to build, tune, deploy, and manage AI models and applications. It acts as the central nervous system that orchestrates 3 critical, integrated capabilities: a high-performance training engine, a precise data management layer, and an optimized model serving framework.

Integrated compute: Kubeflow Trainer

For a sovereign AI factory, relying on public cloud infrastructure is often not an option due to strict control and data residency requirements. To maintain true sovereignty, you must own and operate the hardware. This independence comes with the responsibility of managing it effectively, however, including scheduling complex distributed jobs, handling node failures, and using high-value supercomputing assets efficiently.

Kubeflow Trainer (a component of OpenShift AI) solves this operational paradox. It brings cloud-native ease of use to your private infrastructure, serving as the high-performance engine that streamlines distributed training on Kubernetes. It replaces fragmented workflows with the unified TrainJob API, allowing data scientists to scale frameworks like PyTorch and TensorFlow without rewriting complex infrastructure code.

- Simplification: By abstracting the underlying sovereign infrastructure, it provides a single, consistent interface for massive distributed training tasks.

- Reliability: Built on the Kubernetes JobSet API, it makes sure that the entire group is managed correctly (all-or-nothing scheduling) if one node in a distributed training cluster fails. This helps reduce resource waste, since large-scale training jobs either run completely or restart cleanly.

- Integration: It natively integrates with Kueue (part of OpenShift AI's scheduling stack) to manage job quotas and queuing, dynamically allocating GPU resources from the underlying OpenShift node pool so national compute assets are used most efficiently.

Sovereign data: Feast Feature Store

While true data sovereignty requires a comprehensive data strategy, a specialized component is needed to bridge the gap between raw data and model consumption. Complementing the compute engine, Feast serves as the solution's "memory." Running on top of OpenShift, Feast decouples the model from the raw data infrastructure to improve compliance and reproducibility.

Feast manages the "point-in-time" correctness, so the model is trained on exactly the data available at a specific historical moment, preventing data leakage and enabling full auditability.

- Offline store (e.g. MinIO): It connects securely to the air-gapped S3-compatible object store to handle high-throughput historical data for training.

- Online store (e.g. Redis): It manages low-latency features for inference, so real-time decisions are made within the sovereign perimeter.

- Feature registry: It provides a single source of truth for feature definitions, so critical metrics (e.g., "Patient Age") are calculated identically by every data scientist across the platform, maintaining the integrity of the sovereign intelligence.

Completing the lifecycle: Sovereign model serving

True sovereignty extends beyond training, and must encompass the entire MLOps lifecycle. Once a model is trained by Kubeflow, it must be deployed to process live data without ever leaving the secure perimeter.

OpenShift AI closes this loop with integrated model serving capabilities. By leveraging tools like KServe, vLLM, and llm-d support for distributed inferencing within the platform, organizations can deploy their model artifacts immediately to the same air-gapped, sovereign cluster where they were trained. This meanst:

- Inference stays in-house: With vLLM and llm-d, user queries (e.g., a doctor's diagnostic request) and live data streams are processed locally, never traversing a public API. These technologies optimize GPU memory usage via PagedAttention and allow massive foundation models to be sharded across multiple smaller GPUs. This optimized capability makes it financially and technically feasible for companies to host high performance generative AI (gen AI) on their own existing infrastructure, avoiding the need to rent expensive—and non-sovereign—cloud APIs.

- Unified sovereignty: From hardware acceleration to model monitoring, the entire flow, Gather (Feast) → Train (Kubeflow) → Serve (OpenShift AI), executes on sovereign infrastructure, under your control.

This capability connects the "develop" phase directly to the "integrate" and "monitor" phases, meaning that a regulated entity can run an end-to-end, world-class AI factory entirely in-house.

Architecture

The diagram below illustrates how OpenShift AI acts as the sovereign platform layer, encapsulating the orchestration, security, and hardware management required to run Kubeflow and Feast in an air-gapped environment.

Wrapping up

Achieving sovereign AI requires more than just local hardware, it requires a software architecture that respects the importance of data and the complexity of modern AI workflows.

By using technologies like Kubeflow Trainer and Feast within OpenShift AI, organizations can build a sovereign AI factory that is:

- Hardened by design: Data flows directly within the protected perimeter from storage to compute, governed by Red Hat's enterprise-grade role-based access control (RBAC) and optional Federal Information Processing Standards (FIPS) compliance.

- Scalable: Using the power of distributed training on Kubernetes with automated hardware management provided by OpenShift AI and Kubeflow Trainer.

- Reproducible: Using feature stores to support auditable data lineage.

This solution empowers nations and enterprises to harness the power of AI without compromising their independence, turning the challenge of sovereignty into a competitive advantage.

Ready to build your own sovereign AI factory?

- Get technical: Want to see the code behind the architecture? There's a detailed technical tutorial on the Red Hat Developer blog: Improve RAG retrieval with Feast and Kubeflow Trainer.

- Discover the platform: If you want a higher level overview, visit Red Hat OpenShift AI to explore how our enterprise-grade platform helps organizations build, deploy, and manage sovereign and protected AI applications at scale.

リソース

適応力のある企業:AI への対応力が破壊的革新への対応力となる理由

執筆者紹介

Umberto Manganiello is a Staff Engineer at Red Hat since 2025. Prior to this, he spent over 15 years as a Principal Architect and Engineer in the Financial and Telecommunications sectors. He specializes in designing high-availability systems that operate at massive scale, leveraging deep expertise in Kubernetes, Kafka, and Cloud modernization. Currently, he applies this architectural discipline to the challenges of MLOps, with a focus on GenAI, OpenShift AI, and Kubeflow, blending cloud-native resilience with AI model training workflows.

類似検索

Red Hat Enterprise Linux now available on the AWS European Sovereign Cloud

AI quickstarts: An easy and practical way to get started with Red Hat AI

Technically Speaking | Build a production-ready AI toolbox

Technically Speaking | Platform engineering for AI agents

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

仮想化

オンプレミスまたは複数クラウドでのワークロードに対応するエンタープライズ仮想化の将来についてご覧ください