In part one of our series about Distributed Compute Nodes (DCN), we described how the storage backends are deployed at each site and how to manage images at the edge. What about the OpenStack service (i.e. Cinder) that actually manages persistent block storage?

Red Hat OpenStack Platform has provided an Active/Passive (A/P) Highly Available (HA) Cinder volume service through Pacemaker running on the controllers.

Because DCN is a distributed architecture, the Cinder volume service also needs to be aligned with this model. By isolating sites to improve scalability and resilience, a dedicated set of Cinder volume services now runs at each site, and they only manage the local-to-site backend (e.g., the local Ceph).

This brings several benefits:

Consistency with the rest of the architecture that dedicates storage backend and service per site.

Limited impact to other sites when a specific Cinder volume service issue occurs.

Improved scalability and performances by distributing load as each set of services only manages its local backend.

More deployment flexibility. Any local-to-site change to the Cinder volume configuration only requires to apply the update locally.

In terms of deployment, Pacemaker is used at the central site. However it is not used at the edge so Cinder’s newer Active/Active (A/A) HA deployments are used instead.

For this reason, any Edge Cinder volume driver must have true A/A support, like the Ceph RADOS Block Device (RBD) driver. Active/Active volume services require a Distributed Lock Manager (DLM), which is provided by etcd service deployed at each site.

To summarize when Cinder is deployed with DCN, the Cinder scheduler and API services run only at the central location, but there is a dedicated set of cinder-volume services running at each site in its own Cinder Availability Zone (AZ).

This diagram shows the central site running Cinder API, scheduler and volume A/P as well as three edge sites all running dedicated Cinder volumes A/A. Each site is isolated within its own AZ. The Cinder database remains on the central site.

Checking the Cinder volume status

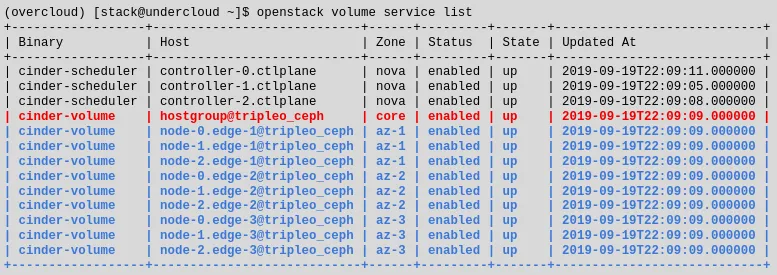

We can check the actual Cinder volume status report with the CLI

In the command output above, the lines in black and red represent the services running at the central site including the A/P Cinder volume service.

The lines in blue represent all of the DCN sites (in the example there are three sites, each with its own AZ). A variation of the above command is cinder --os-volume-api-version 3.11 service-list. When that mircroversion is specified, we get the same output but with an extra column for the cluster name.

To help manage Cinder A/A, new command line cluster options are available in microversion 3.7 and newer.

The cinder cluster-show output shows detailed information about a particular cluster. The cinder cluster-list output shows a quick health summary of all the clusters, whether they are up or down, the number of volume services that form each cluster, and how many of those services are down because the cluster will still be up even if two services are down.

An example output for cinder --os-volume-api-version 3.7 cluster-list --detail is below.

$ cinder --os-volume-api-version 3.11 cluster-list --detail +-------------------+---------------+-------+---------+-----------+----------------+----------------------------+-----------------+----------------------------+----------------------------+ | Name | Binary | State | Status | Num Hosts | Num Down Hosts | Last Heartbeat | Disabled Reason | Created At | Updated at | +-------------------+---------------+-------+---------+-----------+----------------+----------------------------+-----------------+----------------------------+----------------------------+ | dcn1@tripleo_ceph | cinder-volume | up | enabled | 3 | 0 | 2020-12-17T16:12:21.000000 | - | 2020-12-15T23:29:00.000000 | 2020-12-15T23:29:01.000000 | | dcn2@tripleo_ceph | cinder-volume | up | enabled | 3 | 0 | 2020-12-15T17:33:37.000000 | - | 2020-12-16T00:16:11.000000 | 2020-12-16T00:16:12.000000 | | dcn3@tripleo_ceph | cinder-volume | up | enabled | 3 | 0 | 2020-12-16T19:30:26.000000 | - | 2020-12-16T00:18:07.000000 | 2020-12-16T00:18:10.000000 | +-------------------+---------------+-------+---------+-----------+----------------+----------------------------+-----------------+----------------------------+----------------------------+ $

Deployment overview with director

Now that we’ve described the overall design, how do we deploy this architecture in a flexible and scalable way?

A single instance of Red Hat OpenStack Platform director running at the central location is able to deploy and manage the OpenStack and Ceph deployments at all sites.

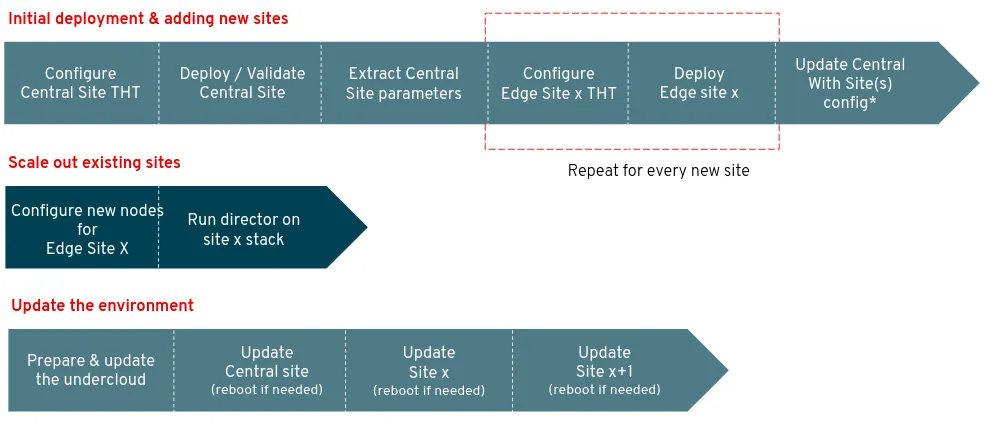

In order to simplify management and scale, each physical site maps to a separate logical Heat stack using the director’s multi-stack feature.

The first stack is always for the central site and each subsequent stack is for each edge site. After all of the stacks are deployed, the central stack is updated so that Glance can add the other sites as new stores (see part 1 of this blog series).

When the overall deployment needs software version updates, each site is updated individually. The workload on the edge sites can continue to run if the central control plane becomes unavailable, then each site may be updated separately.

As seen in the darker sections of the flow chart above, scaling out a site by adding more compute or storage capacity doesn’t require updating the central site. Only that single edge site needs to be updated in order to have new nodes. Let’s look next at how edge sites are deployed.

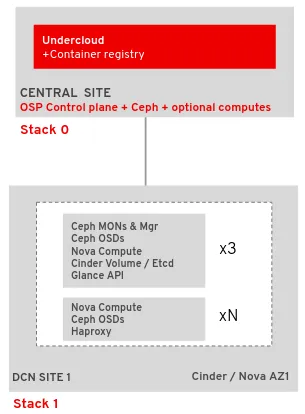

In the diagram above, the central site is hosting the three OpenStack controllers, a dedicated Ceph cluster and an optional set of compute nodes.

The DCN site is running two types of hyper-converged compute nodes:

The first type is called DistributedComputeHCI. In addition to hosting the services found on a standard Compute node, it hosts the Ceph Monitor, Manager and OSDs.

It also hosts the services required for Cinder volume including the DLM provided by Etcd. Finally, this node type also hosts the Glance API service. Each edge site that has storage should have three nodes of this type at minimum so that Ceph can establish a quorum.The second type of node is called DistributedComputeHCIScaleOut, and it is like a traditional hyper-converged node (Nova and Ceph OSDs). In order to contact the local-to-site Glance API service, the Nova compute service is configured to connect to a local HA proxy service which in turn proxies image requests to the Glance services running on the DistributedComputeHCI roles.

If compute capacity to a DCN site is needed but no storage then the DistributedComputeScaleOut role may be used instead; the non-HCI version does not contain any Ceph services but is otherwise the same.

Both of these node types end in “ScaleOut“ because after the three DistributedComputeHCI nodes are deployed, the other node types may be used to scale out.

End user experience

So far we only covered the infrastructure design and operator experience.

What is the user experience once Red Hat OpenStack Platform DCN is deployed and ready to run workloads?

Let’s have a closer look at what it’s like to use the architecture. We’ll run some user’s daily commands such as upload an image into multiple stores, spin up a boot from volume workload, take a snapshot and then move the snapshot to another site.

Import an image to DCN sites

First, we confirm that we have three separate stores.

glance stores-info +----------+----------------------------------------------------------------------------------+ | Property | Value | +----------+----------------------------------------------------------------------------------+ | stores | [{"default": "true", "id": "central", "description": "central rbd glance | | | store"}, {"id": "http", "read-only": "true"}, {"id": "dcn0", "description": | | | "dcn0 rbd glance store"}, {"id": "dcn1", "description": "dcn1 rbd glance | | | store"}] | +----------+----------------------------------------------------------------------------------+

In the above example we can see that there’s the central store and two edge stores (dcn0 and dcn1). Next, we’ll import an image into all three stores by passing --stores central,dcn0,dcn1 (the CLI also accepts the --all-stores true option to import to all stores).

glance image-create-via-import --disk-format qcow2 --container-format bare --name cirros --uri http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img --import-method web-download --stores central,dcn0,dcn1Glance will automatically convert the image to RAW. After the image is imported into Glance we can get its ID.

IMG_ID=$(openstack image show cirros -c id -f value)We can then check which sites have a copy of our image by running a command like the following:

openstack image show $IMG_ID | grep propertiesThe properties field which is output by the command will contain image metadata including the stores field showing all three stores: central, dcn0, and dcn1.

Create a volume from an image and boot it at a DCN site

Using the same image ID from the previous example, create a volume from the image at the dcn0 site. To specify we want the dcn0 site, we use the dcn0 availability zone.

openstack volume create --size 8 --availability-zone dcn0 pet-volume-dcn0 --image $IMG_IDOnce the volume is created identify its ID.

VOL_ID=$(openstack volume show -f value -c id pet-volume-dcn0)Create a virtual machine using this volume as a root device by passing the ID. This example assumes a flavor, key, security group and network have already been created. Again we specify the dcn0 availability zone so that the instance boots at dcn0.

openstack server create --flavor tiny --key-name dcn0-key --network dcn0-network --security-group basic --availability-zone dcn0 --volume $VOL_ID pet-server-dcn0From one of the DistributedComputeHCI nodes on the dcn0 site, we can execute an RBD command within the Ceph monitor container to directly query the volumes pool. This is for demonstration purposes, a regular user would not be able to do so.

sudo podman exec ceph-mon-$HOSTNAME rbd --cluster dcn0 -p volumes ls -l NAME SIZE PARENT FMT PROT LOCK volume-28c6fc32-047b-4306-ad2d-de2be02716b7 8 GiB images/8083c7e7-32d8-4f7a-b1da-0ed7884f1076@snap 2 excl

The above example shows that the volume was CoW booted from the images pool. The VM, therefore, will boot quickly as only the changed data needs to be copied-- the unchanging data only needs to be referenced.

Copy a snapshot of the instance to another site

Create a new image on the dcn0 site that contains a snapshot of the instance created in the previous section.

openstack server image create --name cirros-snapshot pet-server-dcn0Get the image ID of the new snapshot.

IMAGE_ID=$(openstack image show cirros-snapshot -f value -c id)Copy the image from the dcn0 site to the central site.

glance image-import $IMAGE_ID --stores central --import-method copy-imageThe new image at the central site may now be copied to other sites, used to create new volumes, booted as new instances and snapshotted.

Red Hat OpenStack Platform DCN also supports encrypted volumes with Barbican so that volumes can be encrypted at the edge on a per tenant key basis while keeping the secret securely stored in the central site (Red Hat recommends to use a Hardware Security Module).

If an image on a particular site is not needed anymore, we can delete it while keeping the other copies. To delete the snapshot taken on dcn0 while keeping its copy in central, we can use the following command:

glance stores-delete $IMAGE_ID --stores dcn0Conclusion

This concludes our blog series on Red Hat OpenStack Distributed Compute Nodes. You should now have a better understanding on the key edge design considerations and how RH-OSP implements them as well as an overview of the deployment and day 2 operations.

Because what matters is the end user experience, we concluded with a typical number of steps users would take to manage their workloads at the edge.

For an additional walkthrough of design considerations and a best-practice approach to building an edge architecture, check out the recording from our webinar titled “Your checklist for a successful edge deployment.”

執筆者紹介

Gregory Charot is a Senior Principal Technical Product Manager at Red Hat covering OpenStack Storage, Ceph integration as well as OpenShift core storage and cloud providers integrations. His primary mission is to define product strategy and design features based on customers and market demands, as well as driving the overall productization to deliver production-ready solutions to the market. Open source and Linux-passionate since 1998, Charot worked as a production engineer and system architect for eight years before joining Red Hat in 2014, first as an Architect, then as a Field Product Manager prior to his current role as a Technical Product Manager for the Hybrid Platforms Business Unit.

John Fulton works for OpenStack Engineering at Red Hat and focuses on OpenStack and Ceph integration. He is currently working upstream on the OpenStack Wallaby release on cephadm integration with TripleO. He helps maintain TripleO support for the storage on the Edge use case and also works on deployment improvements for hyper-converged OpenStack/Ceph (i.e. running Nova Compute and Ceph OSD services on the same servers).

類似検索

Deterministic performance with Red Hat Enterprise Linux for industrial edge

Red Hat Enterprise Linux delivers deterministic performance for industrial TSN

What Can Video Games Teach Us About Edge Computing? | Compiler

How Do Roads Become Smarter? | Compiler

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

仮想化

オンプレミスまたは複数クラウドでのワークロードに対応するエンタープライズ仮想化の将来についてご覧ください