table { border: #ddd solid 1px; } td, th { padding: 8px; border: #ddd solid 1px; } td p { font-size: 15px !important; }

Storage reliability plays a critical role in managing business-critical applications. A reliable storage solution can help enterprises avoid unnecessary downtime.

With Red Hat OpenShift Container Storage continuing to evolve, it became time to verify, in situ, the combined reliability of OpenShift Container Storage and Red Hat OpenShift Container Platform when it comes to high availability (HA) in a hardware configuration supported out of the box (mentioned in the Red Hat OpenShift Container Storage Planning Guide).

And so we did.

Physical layout for benchmarking

The physical layout we chose for benchmarking included:

Software

-

VMWare vSphere 6.7.0.44000

-

OpenShift Container Platform 4.4.3 on the server side

-

OpenShift Container Platform 4.4.7 on the client side

-

OpenShift Container Storage 4.4.0

-

Red Hat Ansible Automation Platform 2.9 for automated UPI deployment

Hardware

-

Two physical sites (Site A and Site B)

-

2 physical servers on Site A

-

2 physical servers on Site B

-

-

One witness site (Site C)

-

1 physical server

-

-

10GbE network connectivity between site

-

Distributed vSAN configuration

-

vSAN Default Storage Policy

-

Accessible from Site A

-

Accessible from Site B

-

Active workloads during test

It was important to configure the environment so that some storage activity would occur permanently while allowing verification that some key components of the Red Hat OpenShift Platform infrastructure would remain operational. To do so, we configured the following parameters and workload in the environment:

-

The monitoring and alerting solution was configured to use OpenShift Container Storage.

-

ocs-storagecluster-ceph-rbd storage class

-

-

The Openshift Container Platform internal registry was configured to use OpenShift Container Storage.

-

ocs-storagecluster-cephfs storage class

-

-

S3 workload continuously running (OBC).

-

openshift-storage.noobaa.io storage class.

-

-

Block workload continuously running (RWO PVC)

-

ocs-storagecluster-ceph-rbd storage class.

-

-

File based workload continuously running (RWX PVC).

-

ocs-storagecluster-cephfs storage class

-

The following schematic illustrates the infrastructure used during our benchmark.

We performed active/passive and active/active site configuration tests.

Active/passive site configuration

Test 1

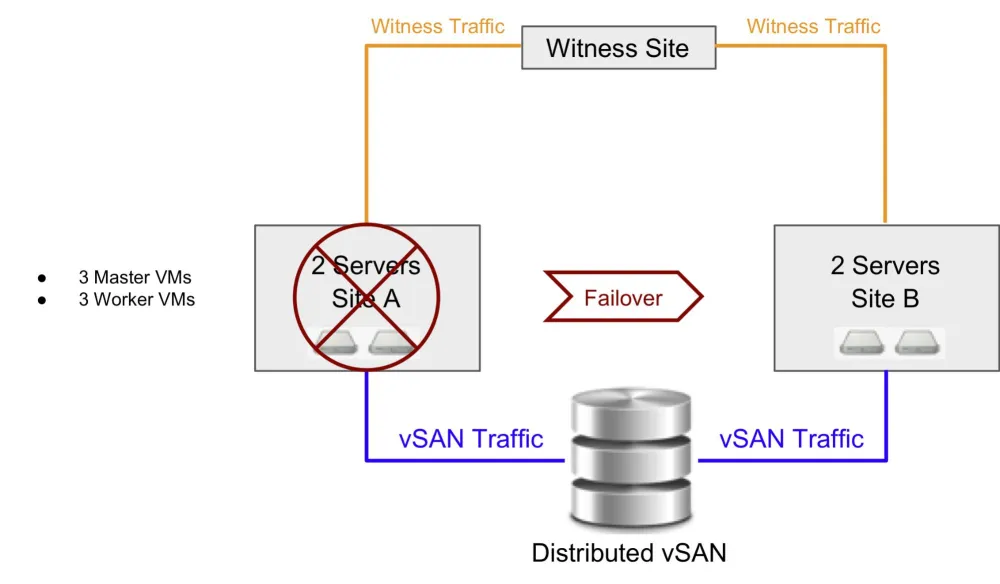

The first test entailed deploying all the virtual machines (VMs) of the Red Hat OpenShift Platform clusters, consisting of three master nodes and three worker nodes, in an active/passive configuration as far as vCenter is concerned. All the Openshift Container Platform VMs were abruptly isolated by shutting down all network ports on the physical servers at the switch level. This resulted in the following critical condition:

-

The witness site lost contact with all the vSphere nodes on Site A.

-

All pods running on all Openshift Container Platform nodes lost contact with each other.

After about 30 seconds, vCenter reported the critical condition and initiated a failover of all the VMs on the surviving physical nodes on the second site. The following diagram depicts the test scenario.

Once vCenter detected the loss of the two physical nodes on the failed site, it initiated the failover of all the VMs on the surviving site. The six virtual machines were restarted in less than 10 minutes.

Active/active site configuration

Test 1

The first test consisted of deploying three VMs on one site (master nodes) and three VMs on the other site (worker nodes) in an active/active configuration as far as vCenter is concerned. All the worker node VMs were abruptly isolated by shutting down all network ports on the physical servers at the switch level. This resulted in the following critical condition:

-

The witness site lost contact with all the vSphere nodes on Site A.

-

All pods running on the Openshift Container Platform worker nodes lost contact with each other.

-

All Openshift Container Platform master nodes lost contact with the Openshift Container Platform worker nodes.

After about 30 seconds, vCenter reported the critical condition and initiated a failover of all the VMs on the surviving physical nodes on the second site. The following diagram depicts the test scenario.

Once vCenter detected the loss of the two physical nodes on the failed site, it initiated the failover of all the VMs on the surviving site. The three VMs were restarted, and the entire cluster was operational in under 10 minutes.

Test 2

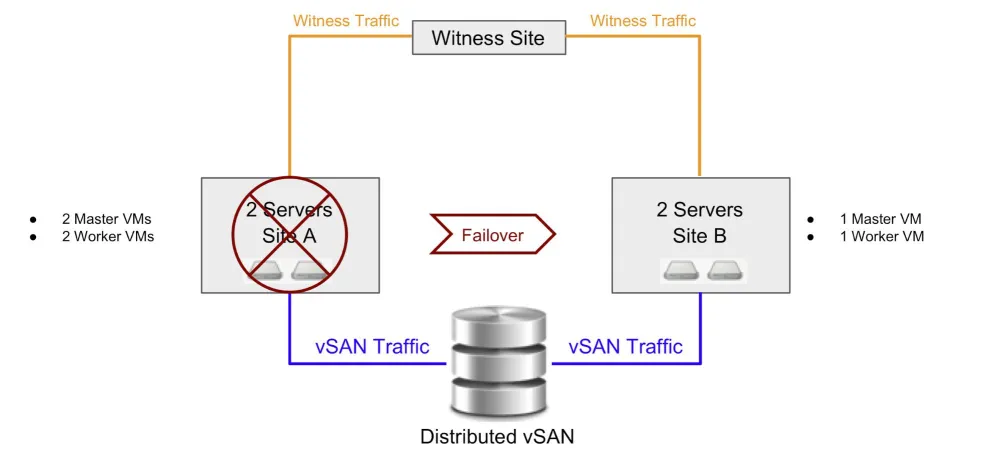

The second test consisted of deploying two VMs on one site (one master node and one worker node) and four VMs on the other site (two master nodes and two worker nodes) in an active/active configuration as far as vCenter is concerned. The two physical servers of the site running one master node and one worker node were abruptly isolated by shutting down all network ports on the physical servers at the switch level. This resulted in the following critical condition:

-

The witness site lost contact with all the vSphere nodes on Site A.

-

Two Openshift Container Platform nodes, one master and one worker, were disconnected from the Openshift Container Platform cluster.

After about 30 seconds, vCenter reported the critical condition and initiated a failover of all the VMs on the surviving physical nodes on the second site. This diagram depicts the test scenario.

Once vCenter detected the loss of the two physical nodes on the failed site, it initiated the failover of all VMs on the surviving site. The two VMs were restarted and the cluster was operational in under 10 minutes.

Test 3

The third test consisted of deploying four VMs on one site (two master nodes and two worker nodes) and two VMs on the other site (one master node and one worker node) in an active/active configuration as far as vCenter is concerned. The two physical servers of the site running two master node and two worker node VMs were abruptly isolated by shutting down all network ports on the physical servers at the switch level. This resulted in the following critical condition:

-

The witness site lost contact with all the vSphere nodes on Site A.

-

Four Openshift Container Platform nodes, two masters and two workers, were disconnected from the Openshift Container Platform cluster.

After about 30 seconds, vCenter reported the critical condition and initiated a failover of all the VMs on the surviving physical nodes on the second site. This diagram depicts the test scenario.

Once vCenter detected the loss of the two physical nodes on the failed site, it initiated the failover of all the VMs on the surviving site. The four VMs were restarted, and the entire cluster was operational in under 10 minutes.

Summary

In every test scenario we tried, the OpenShift Container Platform environment and the OpenShift Container Storage environment were restored to operational condition in less than 10 minutes. This table summarizes the tests that were performed.

|

Site A |

Site B |

Comment |

Result |

Time |

|

3 M and 3 W |

None |

Shutdown Site A |

OK |

< 10 minutes |

|

3 M |

3 W |

Shutdown Site B |

OK |

< 10 minutes |

|

2 M and 2 W |

1 M and 1 W |

Shutdown Site B |

OK |

< 10 minutes |

|

2 M and 2 W |

1 M and 1 W |

Shutdown Site A |

OK |

< 10 minutes |

Key: M = Master node, W = Worker node

In all test cases, the only I/O that were lost were the ones in flight. At no point was an I/O committed to the disk lost, and both the cluster monitoring solution as well as the registry remained operational as soon as the OpenShift Container cluster regained operational status.

These results demonstrate OpenShift’s reliability in a one disaster recovery scenario, and may also be a good starting point to test other redundancy projects.

執筆者紹介

Jean Charles Lopez, a Senior Solutions Architect at Red Hat, has been in the field of storage for over 30 years. He has dealt with the challenges of each era over different operating systems and in different environments—from OpenStack to OpenShift. He likes to share his passion for storage while promoting open source solutions.

Lopez has been continuously working with Ceph since 2013. He has authored Ceph courseware and spoken at events such as the Open Infrastructure Summit, Large Installation System Administration Conference (LISA) and Massive Storage Systems and Technology Conference (MSST).

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

仮想化

オンプレミスまたは複数クラウドでのワークロードに対応するエンタープライズ仮想化の将来についてご覧ください