The creation and delivery of intelligent and stream-based applications requires having a robust streaming platform that can support ingesting and transforming large amounts of data at a fast pace.

Red Hat OpenShift Streams for Apache Kafka can support enterprise organizations in solving digital experiences challenges. This article is part of a series that offers technical solutions to commonly known use cases, such as replacing batch data with real-time streams processing, streamlining application modernization and managing event-driven architectures.

Intelligent applications can quickly change how users, customers or partners interact with an organization and its products. Delivering more interactive experiences is a must in this modern and digital world. Now, designing and developing intelligent or AI-driven (artificial intelligence) applications requires recognizing, learning and predicting user needs. Users want action-oriented, adaptive and responsive applications that provide valuable information and allow completing tasks more quickly and seamlessly. Moreover, training intelligent applications requires learning from historical data, and stream processing technologies allow for collecting large amounts of data for aggregation, transformation and analysis.

Delivering AI-driven applications requires designing and creating machine learning (ML) algorithms that can support prediction and detection for streaming data. ML algorithms are normally trained with historical data, and are then deployed to be used with real-time data. Training the models requires ingesting large amounts of data, and this process can be complex and time consuming.

Architectural patterns for training AI/ML models and the creation of intelligent applications are a great way to start when planning and choosing technologies for this endeavor. The streaming analytics architecture pattern allows simplifying the process of collecting streams of data for AI/ML modeling and data-in-motion analysis. More can be said about the building blocks for producing actionable insights with a streaming analytics solution, and in the next section we will dive into some of the details.

The building blocks of a streaming analytics solution

The steps for preparing the data to be used for model training or for data analysis can be simplified by using technologies designed for digital experiences. One key reason is that the process flips from storing data first and processing it later to processing the data as it comes and then storing it. This reduces the time between ingestion and analysis, and also reduces the storage requirements since it’s only using the data that is relevant for the business when making decisions.

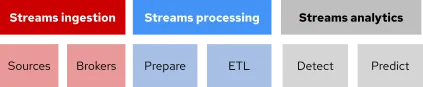

The streaming analytics solution architecture proposes three building blocks for data consumption described below:

-

Streams ingestion: Refers to ingestion of all the data streams from a variety of sources. This can be achieved through messaging solutions, such as Apache Kafka.

-

Streams processing: Refers to preparing, aggregating and transforming data streams before sending them for analysis. Stream processing platforms, such as Apache Kafka components, can support the aggregation and transformation of data.

-

Streams analytics: Refers to the process transforming and analyzing data with the goal of making real-time decisions. This step can be achieved by a variety of analytic frameworks that can support evaluation, prediction and detection.

Apache Kafka for streams ingestion and processing

Digital experiences changed the way we look at data storage and analysis. Data volumes increased and storing it all was not an option anymore. Visualization of all the data became a complex problem that could not be solved with old analytics solutions. Analyzing data in real time or in motion became a requirement, as actions and decision making needed to happen almost immediately. This is where stream processing became the preferred framework for ingesting, processing and transforming flows of data, or better known as data streams.

Apache Kafka is a distributed streams processing platform that uses the publish/subscribe method to move data between microservices, cloud-native or traditional applications and other systems. The solution differentiates itself from others in the market due to its high throughput and low latency when moving data streams. This higher throughput makes the process feel like real-time message delivery, while moving a lot of data fast allows for low latency. Apache Kafka is also a great solution for edge, as it delivers the same benefits across your entire hybrid cloud environment.

This technology was designed to allow ingesting and collecting data streams from multiple sources while simultaneously storing and aggregating the data for processing. The Kafka ecosystem complements the core functionality through frameworks and tools dedicated to supporting the transformation and transport of data using multiple protocols and data formats.

The technology can also fit multiple use cases, including supporting AI/ML applications. Regardless, if you’re doing model training or model inference, Apache Kafka and its component ecosystem can be a great tool for creating a full flow for streaming analytics.

AI/ML tools and frameworks for detection and prediction

Previously, organizations only stored the data that was important for the business and disregarded the rest of the details. This changed over time as the flow of data and the number of digital interactions grew. The term “big data” became popular, and suddenly all companies needed to have a solution for it. Detecting anomalies, predicting abnormal patterns and creative corrective actions was the promise of big data.

Big data, or analyzing vast amounts of data, came with many challenges for ingesting, storing, transforming and analyzing data. It required people developing and deploying intelligent workflows that could support the ingestion and interpretation of all this data. Ingestion, aggregation and transformation of data can be solved with tools like Apache Kafka and its components.

The next step then is to decide how organizations should do AI/ML modeling, and implement those models into real-life situations through intelligent applications. One of the challenges of AI/ML modeling is that it requires having a cycle approach, which means collecting historical data for model training and ingesting real-time data for actionable insights. AI/ML modeling requires high computational power, tons of storage, and a development environment where data scientists can play and train models and developers can design intelligent applications.

There are many pre-defined frameworks, workflows and architectures available for addressing many of the challenges of big data. One thing to keep in mind is choosing an AI/ML platform that can be highly integrated with an application development platform, and hence providing an environment where developers, data scientists and IT professionals can come together to collaborate.

An application development platform can support data scientists in accessing the data sources, and also in preparing the models for production. Bringing AI/ML models to production requires having the knowledge of software developers for application development, and the IT knowledge for running it on the right hardware. There is a high dependency on IT professionals in the deployment and maintenance of intelligent applications. In addition, there is a need to collaborate with software developers to ensure input data hygiene and successful ML model deployment in application development processes.

How Red Hat can help

Red Hat Cloud Services offers a variety of tools designed to support developers in creating, deploying and maintaining cloud-native applications. One of those application use cases is stream-based and intelligent applications that can support big data scenarios. Developers using Red Hat Cloud Services can have access to a development environment with the services required for ingesting, aggregating, transforming and analyzing streams of data.

Red Hat OpenShift Streams for Apache Kafka has been designed to support developers incorporating streaming data into applications to deliver real-time experiences. The service is fully hosted and managed by Red Hat, and allows developers to focus on streaming data rather than on maintaining or configuring the infrastructure. OpenShift Streams for Apache Kafka together with Kafka Streams and OpenShift Connectors support developers in ingesting, aggregating and transforming data for analysis.

Once developers are able to stream data within the architecture, the next step should be to feed AI/ML models for training and testing purposes. Here is where Red Hat OpenShift Data Science can help.

Red Hat OpenShift Data Science provides a supported, self-service environment where data scientists and machine learning engineers can carry out their daily work, from gathering and preparing data to testing and training ML models. With OpenShift Data Science, customers can access a range of AI/ML technologies from Red Hat partners and independent software vendor (ISV) offerings, enabling corporations to build their own flexible sandbox environment containing some of the latest data science tools.

All Red Hat Cloud Services are natively integrated with OpenShift, providing a streamlined developer experience across open hybrid cloud environments. The combination of an enterprise Kubernetes platform, cloud-native application delivery and managed operations allows teams to focus on their expertise, accelerate time to value and reduce operational expenses.

Visit the Red Hat OpenShift Streams for Apache Kafka page to learn more, and visit Red Hat’s trial center to get started building Kafka applications and deploying analytic models.

About the author

Jennifer Vargas is a marketer — with previous experience in consulting and sales — who enjoys solving business and technical challenges that seem disconnected at first. In the last five years, she has been working in Red Hat as a product marketing manager supporting the launch of a new set of cloud services. Her areas of expertise are AI/ML, IoT, Integration and Mobile Solutions.

More like this

IT automation with agentic AI: Introducing the MCP server for Red Hat Ansible Automation Platform

General Availability for managed identity and workload identity on Microsoft Azure Red Hat OpenShift

Data Security 101 | Compiler

Technically Speaking | Build a production-ready AI toolbox

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds