In the ever-evolving landscape of technology, the hunt for optimal performance and scale is a constant challenge. With Red Hat Satellite 6.13 and 6.14 versions, we have embarked on an exciting journey to push the boundaries and elevate our capabilities. In this article, we'll take you behind the scenes to explore how we work to enhance performance and scale our operations.

1. Load testing and performance tuning

To provide a better user experience even during peak usage periods, we conduct rigorous load testing. By simulating scenarios of high traffic (for example, by running remote execution jobs using dnf -update command), we can identify potential weaknesses and address them proactively.

Performance tuning is an ongoing process, where we fine-tune configurations and parameters to achieve optimal performance. For example, in order to recommend Puma tunings (Puma is a Ruby application server used by Satellite), we tested different values of the threads_min and threads_max of the tunables. After spending weeks on this kind of testing with different parameters, we found that setting the minimum Puma threads to 16 resulted in about 12% less memory usage as compared to setting it to threads_min=0. This is just one example taken from the Red Hat Satellite Tuning Guide.

With every release, we scale the number of clients connected to the Satellite server to test the performance of its features. For example, in the latest testing release cycle, we scaled the server to manage 48k clients/hosts. This means there were more than 48k clients/hosts registered with the Satellite server with no external capsules. The goal of this testing was to see how Red Hat Satellite 6.14 behaves under pressure by putting it under load on Satellite and executing remote execution jobs on those content hosts. We discovered that in this setup, 95% of Satellite work is keeping up with maintaining regular hosts, and only 5% remains for remote execution (ReX). Overall, Satellite behaved very well without any external capsules.

Let’s take a quick look at performance improvements over the last couple versions.

Registration comparison: Satellite 6.11 vs. Satellite 6.12

Registration on 6.12 seems to be slightly faster than on 6.11. At the same time, more registrations in the batch fail, so registration being faster might be just a consequence of Satellite having more resources due to more failed registrations.

In lower concurrency levels, we have seen slightly higher CPU usage with 6.12, but at higher concurrency CPU usage is far smaller. Again, this might be a consequence of the higher error rate.

Backup and restore comparison: Satellite 6.13

In its default configuration (no tuning applied) Satellite 6.13 performed better in both the restore scenarios (~6% online and ~9% offline) and slightly worse in the backup scenarios (~-12% online and ~-1% offline).

Satellite installer comparison: Satellite 6.13 vs. Satellite 6.14

Satellite installer takes ~1% longer in 6.14 compared to 6.13.

RPM sync time: Satellite 6.12

We have seen 57% improvement in RPM sync time accompanied with 47% higher CPU usage during that stage of the test. We have also seen a generic improvement of 23% in memory consumption.

Hammer host list comparison: Satellite 6.10 vs. Satellite 6.11 vs. Satellite 6.12

There wasn't a significant performance change when using the hammer host list test case.

Version | Seconds to list 100 hosts |

Satellite 6.10 | 3.01 |

Satellite 6.11 (RHEL 7) | 3.09 |

Satellite 6.11 (RHEL 8) | 3.24 |

Satellite 6.12 | 3.20 |

2. Continuous performance testing

Continuous performance testing (CPT) has a myriad of benefits, such as verifying that Satellite features are performing properly before they are released. Moreover, by running the tests automatically and frequently, we create a quick feedback loop so engineers are aware of possible performance regressions.

CPT can also validate Satellite's code in continuous deployment pipelines to help avoid performance regressions. The goal of CPT is to spot problems early and fix them before they affect users or customers.

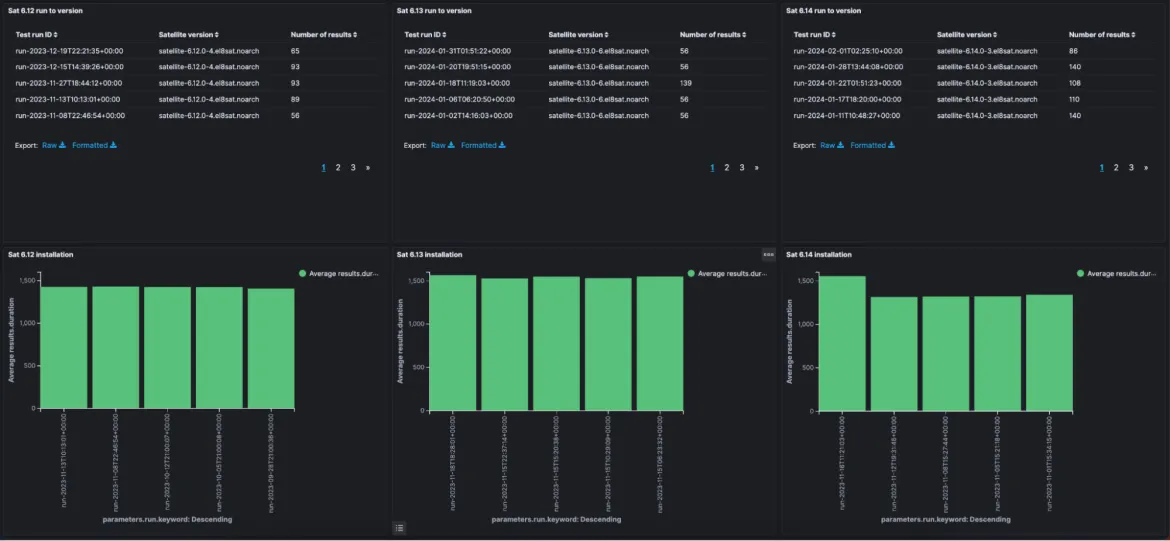

The performance team analyzes CPT results to get an idea about the performance of a specific version of Satellite and reformulate accordingly. We run CPT jobs using Jenkins and then upload the results to OpenSearch which we use for visualization purposes. For example, this screenshot shows the results obtained from the different test runs from three different versions of Satellite: 6.12, 6.13 and 6.14.

3. Create and update the Satellite Tuning Guide

The Red Hat Satellite Tuning Guide is designed to help you fine-tune Satellite to meet your particular requirements and deliver a better user experience. As you navigate through the guide, you'll find ways to optimize your system's performance at various levels. Remember, performance tuning is an ongoing process, and with the right strategies in place, you are more likely to have a high-performing, scalable and reliable system for your users. To do this, your performance team should conduct testing numerous times.

4. Verify fixes for performance bugs

Verifying fixes for performance and scale-related bugs is a crucial step in the software development lifecycle. The goal is to make sure that identified issues have been effectively addressed and resolved. Here are the steps that the performance team follows to verify performance-related fixes:

- Reproduce the issue on the previous version (that does not have the fix) to validate the exact steps on how to trigger it

- Conduct comprehensive performance testing to verify the fix

- Test the fix across all applicable environments and versions

- Analyze resource utilization

- Measure key metrics

- Compare results

By following these steps, we can systematically verify performance bug patches and make sure that the fixes have a positive impact on the overall performance of the software. Regular collaboration between development, quality engineering, customer support and performance teams is essential for effective bug verification and continuous improvement.

5. Collaboration

Effective collaboration between the performance team and other teams (engineering, support, technical account managers, etc.) is crucial for maintaining and improving Satellite's performance. We all take part in regular cross-team meetings where we share insights, updates and potential challenges. This way, we encourage a collaborative environment where different teams can provide input and feedback on performance-related issues.

Conclusion

Our recent performance and scale efforts are testament to our commitment to provide a top-notch user experience for Red Hat customers. As technology advances, so do we. The journey towards optimal performance and scalability is ongoing, and we're excited about the future possibilities that these improvements continue to unlock. Stay tuned for more updates as we continue to push the boundaries and help shape the future of technology at Red Hat.

About the authors

Imaanpreet Kaur joined Red Hat in 2017 and is a software engineer with Red Hat's Performance and Scale Engineering team. Kaur does performance testing, reviews, and checks on Red Hat Satellite and Ansible Tower.

I started as a quality engineer for some Red Hat Enterprise Linux packages, then worked on Red Hat Satellite 5 and 6 and finally moved to Performance and Scale engineering where I'm working on multiple products, testing their limits. I like gardening and graphs.

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech