Architecting and designing containerized deployments is challenging in part because of the plethora of functional and nonfunctional requirements you must consider. Podman is an excellent choice for containerized use cases as it has been designed and built from the ground up with security and configurability in mind.

Podman runs smoothly on multiple operating systems and offers seamless integration into modern Linux systems. It is extremely adaptable and fits into all kinds of environments, from a developer workstation to a Linux server to automotive to devices at the far edge.

[ Download now: Podman basics cheat sheet ]

Here are five facts about Podman that stress out why Podman may be a perfect fit for your IT architecture.

Fact 1: Podman is portable, reliable, and robust

Since day one, Podman has supported running rootless containers. That means you can run containers without root privileges. Other container engines have followed Podman's example, but Podman integrates best in all kinds of environments, including workstations, servers, CI/CD pipelines, Kubernetes, edge computing, Internet of Things (IoT), automotive and more.

Running rootless makes Podman portable as it is quite common in large enterprises or research labs to not use root privileges. In fact, Podman has paved the way for containers in such environments.

Running rootless containers also makes Podman more secure than other container engines that rely on root privileges: in the worst-case scenario of an attacker breaking out of the container, the attacker does not have root privileges and can do much less harm. If you are curious about how rootless Podman works in detail, please refer to What happens behind the scenes of a rootless Podman container? written by my colleagues Dan, Matt, and Giuseppe.

Another major feature of Podman is that it has a daemonless architecture. That means Podman does not have a client-server model like Docker or containerd but uses a more traditional fork-exec model to run containers. I consider Podman's architecture to be more robust and reliable because it erases a single point of failure for the entire system.

On the contrary, if a daemon goes down, no container is reachable anymore. Among other benefits of the fork-exec model, it integrates well into the Linux kernel's audit system. This way, the system logs exactly which user executed which containers. Podman really paved the way for running containers in high-security environments, including research labs, banks, and insurance companies. A daemon running as root generally does not meet these requirements.

[ Get hands on with Podman in this tutorial scenario. ]

Fact 2: Podman is compatible with Docker

Podman is compatible with Docker both on the command line and the REST API. Whether you have shell scripts calling the Docker client or a Python program using the Docker bindings, migrating to Podman is straightforward.

Docker made modern container technology successful. Today's container standards governed by the Open Containers Initiative are all based on earlier technology and protocols from Docker and the open source community. While not being a protocol or a specification, the Docker client command-line interface (CLI) is the de facto standard for working with containers in the terminal.

Podman inherited the CLI from Docker, which simplifies migrating from Docker to Podman. Some Linux distributions even ship a podman-docker package, which creates an alias from docker to podman.

Podman also ships with a Docker-compatible REST API. Whether you have a Python program using the docker-py bindings or use docker-compose, Podman has you covered. Migrating from Docker to Podman usually boils down to pointing the DOCKER_HOST environment variable to the Podman socket. I recommend checking out Using Podman and Docker Compose and Podman Compose or Docker Compose: Which should you use in Podman? for more on running docker-compose with Podman.

[ Learn how to build a flexible foundation for your organization. Download An architect's guide to multicloud infrastructure ]

Fact 3: Podman is cross platform

While containers really are a Linux technology, you can also run Podman on other operating systems. Although I'd love everybody to be running Linux, many developers' machines are still powered by MacOS or Windows.

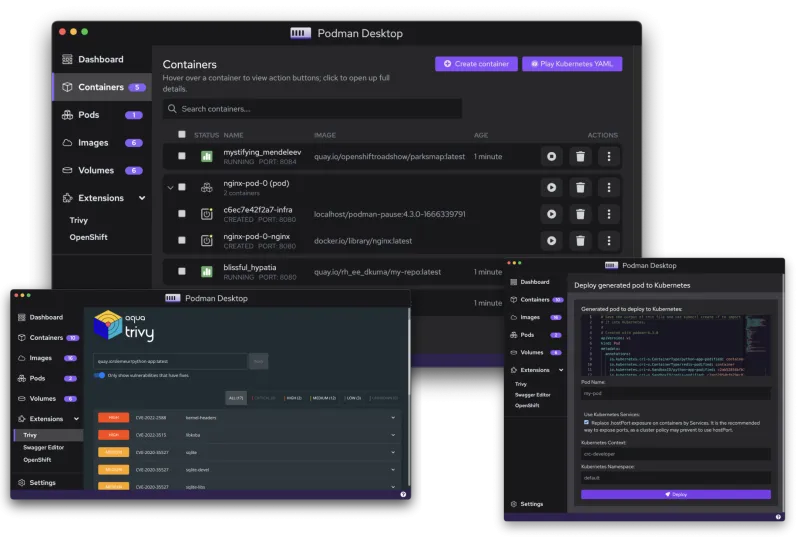

The easiest way to run Podman on a Mac or a Windows machine is by installing Podman Desktop. Download the installer, follow the instructions, and you are ready to go! Note that Podman Desktop is 100% open source and has no license fees attached. It also ships with a number of cool features, such as running Kubernetes YAML and an elaborate extension mechanism. Although I feel at home in a Linux terminal, I really love Podman Desktop's modern look and feel.

[ Learn more about running Podman on MacOS and Windows. ]

Fact 4: Podman is configurable

Podman can run in practically any kind of computing environment, from traditional servers to small Internet of Things (IoT) devices. Running in vastly different places and devices implies very different requirements and settings. That is why Podman is very configurable and adjustable for varying requirements.

Several configuration files allow setting the defaults for how Podman and its containers run and behave. The most important configuration files are:

- storage.conf, which controls storage-related options and attributes, such as the backing driver (overlay) and where the containers and images are stored on the system.

- containers.conf is the most feature-rich configuration file. It is mostly used to control Podman's behavior (for example, logging drivers) and default container settings (such as environment variables).

- registries.conf is a complex yet powerful configuration file to control and manage container registries. For instance, it allows specifying mirrors for registries. If you are curious, take a look at my article How to manage Linux container registries.

Fact 5: Podman runs well at the edge

Podman is well known for its tight integration with systemd. Running containerized workloads in systemd is a powerful method for reliable, rock-solid deployments. The systemd integration further lays the foundation for more advanced Podman features, such as auto updates and rollbacks or running Kubernetes workloads in systemd. This integration allows systemd to manage service dependencies, monitor the lifecycle and service state, and possibly also restart services in case of failure.

Such robust, self-healing, and self-updating deployments are crucial for edge deployments, where distributed and partially disconnected nodes must operate independently at times. Podman v4.3 pushes things even further with custom on-failure actions for health checks. Feel free to read more about this feature in Podman at the edge: Keeping services alive with custom healthcheck actions, which I wrote with my colleagues Preethi and Matt.

[ Learn best practices for implementing automation across your organization. Download The automation architect's handbook. ]

Conclusion

I often compare Podman to a Swiss Army Knife. It large a large repertoire of features, fits easily into my pocket, and can also do heavy lifting if needed. What I find most fascinating is that Podman runs practically everywhere: on large servers, developer workstations, and laptops but also in very small edge computing devices. If you want to join the community and learn more, please visit the Podman and Podman Desktop websites.

About the author

More like this

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 4)

The Containers_Derby | Command Line Heroes

Can Kubernetes Help People Find Love? | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds