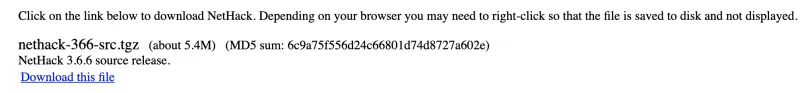

The chances are that you've seen references to hashes or checksums when you've downloaded software from the Internet. Often, the software will be displayed, and then near the link is a checksum. The checksum may be labeled as MD5, SHA, or with some other similar name. Here is an example using one of my favorite old games from the 1990s named Nethack:

Many people don't know exactly what this information means or how to work with it. In this article, I discuss the purpose of hashing, along with how to use it.

[ Readers also enjoyed: Getting started with GPG (GnuPG) ]

Goals of cryptography

In this first section, I want you to unlearn something. Specifically, I want you to break the association in your head between the word encryption and the word confidential. Many of us conceive of these two words as being synonymous when that is actually not the case. Cryptography, which includes encryption, can provide confidentiality, but it can also satisfy other goals.

Cryptography actually has three goals:

- Confidentiality - to keep the file content from being read by unauthorized users

- Authenticity - to prove where a file originated

- Integrity - to prove that a file has not changed unexpectedly

It is that third concept, integrity, that we are interested in here. In this context, integrity means to prove that data has not changed unexpectedly. Proving integrity is useful in many scenarios:

- Internet downloads such as Linux distributions, software, or data files

- Network file transfers via NFS, SSH, or other protocols

- Verifying software installations

- Comparing a stored value, such as a password, with a value entered by a user

- Backups that compare two files to see whether they've changed

What is hashing?

Cryptography uses hashing to confirm that a file is unchanged. The simple explanation is that the same hashing method is used on a file at each end of an Internet download. The file is hashed on the web server by the web administrator, and the hash result is published. A user downloads the file and applies the same hash method. The hash results, or checksums, are compared. If the checksum of the downloaded file is the same as that of the original file, then the two files are identical, and there have been no unexpected changes due to file corruption, man-in-the-middle attacks, etc.

Hashing is a one-way process. The hashed result cannot be reversed to expose the original data. The checksum is a string of output that is a set size. Technically, that means that hashing is not encryption because encryption is intended to be reversed (decrypted).

What kind of hash cryptography might you use with Linux?

Message Digest and Secure Hash Algorithm

In Linux, you're likely to interact with one of two hashing methods:

- MD5

- SHA256

These cryptography tools are built into most Linux distributions, as well as macOS. Windows does not typically include these utilities, so you must download them separately from third party vendors if you wish to use this security technique. I think it's great that security tools such as these are part of Linux and macOS.

Message Digest versus Secure Hash Algorithm

What's the difference between the message digest and secure hash algorithms? The difference is in the mathematics involved, but the two accomplish similar goals. Sysadmins might prefer one over the other, but for most purposes, they function similarly. They are not, however, interchangeable. A hash generated with MD5 on one end of the connection will not be useful if SHA256 is used on the other end. The same hash method must be used on both sides.

SHA256 generates a bigger hash, and may take more time and computing power to complete. It is considered to be a more secure approach. MD5 is probably good enough for most basic integrity checks, such as file downloads.

Where do you find hashing in Linux?

Linux uses hashes in many places and situations. Checksums can be generated manually by the user. You'll see exactly how to do that later in the article. In addition, hash capabilities are included with /etc/shadow, rsync, and other utilities.

For example, the passwords stored in the /etc/shadow file are actually hashes. When you sign in to a Linux system, the authentication process compares the stored hash value against a hashed version of the password you typed in. If the two checksums are identical, then the original password and what you typed in are identical. In other words, you entered the correct password. This is determined, however, without ever actually decrypting the stored password on your system. Check the first two characters of the second field for your user account in /etc/shadow. If the two characters are $1, your password is encrypted with MD5. If the characters are $5, your password is encrypted with SHA256. If the value is $6, SHA512 is being used. SHA512 is used on my Fedora 33 virtual machine, as seen below:

How to manually generate checksums

Using the hash utilities is very simple. I will walk you through a very easy scenario to accomplish on a lab computer or whatever Linux system you have available. The purpose of this scenario is to determine whether a file has changed.

First, open your favorite text editor and create a file named original.txt with a line of text that reads: Original information.

[damon@localhost ~]$ vim original.txt

[damon@localhost ~]$ cat original.txt

Original information.

[damon@localhost ~]$

Next, run the file through a hash algorithm. I'll use MD5 for now. The command is md5sum. Here is an example:

[damon@localhost ~]$ md5sum original.txt

80bffb4ca7cc62662d951326714a71be original.txt

[damon@localhost ~]$

Notice the resulting checksum value. This value is large enough that it's difficult to work with. Let's store that value for future use by redirecting it into a file:

[damon@localhost ~]$ md5sum original.txt > hashes.txt

[damon@localhost ~]$ cat hashes.txt

80bffb4ca7cc62662d951326714a71be original.txt

[damon@localhost ~]$

At this point, you have an original file. Copy that file to the /tmp directory with the name duplicate.txt. Copy the file by using the following command (be sure to copy, not move):

[damon@localhost ~]$ cp original.txt /tmp/duplicate.txt

[damon@localhost ~]$

Run the following command to create a checksum of the copied file:

[damon@localhost ~]$ md5sum /tmp/duplicate.txt

80bffb4ca7cc62662d951326714a71be /tmp/duplicate.txt

[damon@localhost ~]$

Next, append the hash result to our hashes.txt file and then compare the two. Be very careful to use the >> append redirect operator here, because > will overwrite the hash value of the original.txt file.

Run the following command:

[damon@localhost ~]$ md5sum /tmp/duplicate.txt >> hashes.txt

[damon@localhost ~]$ cat hashes.txt

80bffb4ca7cc62662d951326714a71be original.txt

80bffb4ca7cc62662d951326714a71be /tmp/duplicate.txt

[damon@localhost ~]$

The two hash results are identical, so the file did not change during the copy process.

Next, simulate a change. Type the following command to change the /tmp/duplicate.txt file contents, and then rerun the md5sum command with the >> append operator:

[damon@localhost ~]$ hostname >> /tmp/duplicate.txt

[damon@localhost ~]$ md5sum /tmp/duplicate.txt >> hashes.txt

[damon@localhost ~]$

You know that the duplicate.txt file is no longer identical to the original.txt file, but let's prove that:

[damon@localhost ~]$ cat hashes.txt

80bffb4ca7cc62662d951326714a71be original.txt

80bffb4ca7cc62662d951326714a71be /tmp/duplicate.txt

1f59bbdc4e80240e0159f09ecfe3954d /tmp/duplicate.txt

[damon@localhost ~]$

The two checksum values are not identical, and therefore the two files from which the checksums were generated are not identical.

In the above example, you manually compared the hash values by displaying them with cat. You can use the --check option to have md5sum do the comparison for us. I've included both methods below:

[damon@localhost ~]$ cat hashes.txt

80bffb4ca7cc62662d951326714a71be original.txt

80bffb4ca7cc62662d951326714a71be /tmp/duplicate.txt

1f59bbdc4e80240e0159f09ecfe3954d /tmp/duplicate.txt

[damon@localhost ~]$ md5sum --check hashes.txt

original.txt: OK

/tmp/duplicate.txt: FAILED

/tmp/duplicate.txt: OK

md5sum: WARNING: 1 computed checksum did NOT match

[damon@localhost ~]$

You can repeat the above steps substituting sha256sum for the md5sum command to see how the process works using the SHA algorithm. The sha256sum command also includes a --check checksum option that compares the resulting hashes and displays a message for whether the files differ.

Note: If you transfer files between Linux, macOS, and Windows, you can still use hashing to verify the files' integrity. To generate a hash value on macOS, run the md5 command. To do this in Windows, you must download a third party program. Personally, I use md5checker. Be sure to understand licensing for these utilities. You may be able to use the PowerShell cmdlet get-filehash, depending on the version of PowerShell you have installed.

[ Free course: Red Hat Satellite Technical Overview. ]

Wrap up

Hashing confirms that data has not unexpectedly changed during a file transfer, download, or other event. This concept is known as file integrity. Hashing does not tell you what changed, just that something changed. Once hashing tells you two files are different, you can use commands such as diff to discover what differences exist.

About the author

Damon Garn owns Cogspinner Coaction, LLC, a technical writing, editing, and IT project company based in Colorado Springs, CO. Damon authored many CompTIA Official Instructor and Student Guides (Linux+, Cloud+, Cloud Essentials+, Server+) and developed a broad library of interactive, scored labs. He regularly contributes to Enable Sysadmin, SearchNetworking, and CompTIA article repositories. Damon has 20 years of experience as a technical trainer covering Linux, Windows Server, and security content. He is a former sysadmin for US Figure Skating. He lives in Colorado Springs with his family and is a writer, musician, and amateur genealogist.

More like this

Getting started with socat, a multipurpose relay tool for Linux

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 4)

What Is Product Security? | Compiler

Technically Speaking | Security for the AI supply chain

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds