As an architect, you must design systems that resist intrusion and eavesdropping. For users, networks, devices, and services to communicate privately across your system, the U.S. National Institute of Standards and Technology (NIST) developed and published the Federal Information Security Modernization Act of 2014 (FISMA). FISMA provides standards, guidelines, and directives to work toward when designing your organization's infrastructure. These rules are generally called systems and communication (SC) protection policies.

[ Modernize your IT with managed cloud services. ]

NIST SP 800-53, Security and Privacy Controls for Information Systems and Organizations, is particularly relevant. It is designed to help federal agencies protect their information and information systems and is widely used as a reference for implementing FISMA requirements. It provides a catalog of security and privacy controls for all U.S. federal information systems, except systems related to national security (which are covered by the National Security Strategy).

NIST SP 800-53 references SC-8, Transmission Confidentiality and Integrity. It's critical that you establish a transmission confidentiality and integrity plan for your organization. Your plan must include encryption techniques for establishing a publicly trusted public key infrastructure (PKI) in protected environments.

Wildcard certificates, which are public-key certificates that can be used across a domain's subdomains, have long been discouraged by the Internet Engineering Task Force (IETF), according to RFC 6125, Section 7.2. The IETF encourages using multi-domain certificates or designing applications able to present the proper certificate by analyzing the Server Name Indication (SNI) field, which contains the information about the host addressed by the client. Due to this deprecation, wildcard certificates are recommended to be used only in non-production environments.

This article addresses three ways to achieve encryption in protected environments using Red Hat OpenShift in situations where using wildcard certificates is considered unsafe.

[ Do you know the difference between Red Hat OpenShift and Kubernetes? ]

What are secure routes and TLS termination?

A route is an OpenShift concept that works with the Kubernetes Ingress resource. It exposes a service by giving it an externally reachable hostname. OpenShift's documentation on configuring routes states, "A route allows you to host your application at a public URL. It can either be secure or unsecured, depending on the network security configuration of your application."

The ingress consumes a defined route and the endpoints identified by its service to provide named connectivity. This is called an ingress backed by routes or routing through an ingress object. As the ingress object is created, OpenShift automatically translates it into a route. Session affinity is enabled by default, and cookies configure session persistence. (Read Kubernetes 101 for OpenShift developers for more insight on these concepts.)

Secure routes in OpenShift Container Platform allow you to use various types of transport layer security (TLS) termination to serve certificates to the client. Three types of secured routes that are available and described below are edge, reencryption, and pass-through. (The examples below are based on the OpenShift documentation.)

[ Hybrid cloud and Kubernetes: A guide to successful architecture ]

1. Edge routes

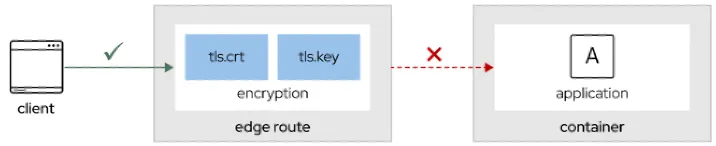

With an edge route, the Ingress Controller sets up an ingress pod to terminate TLS encryption before forwarding traffic to the destination pod. The route specifies the TLS certificate and key that the ingress pod uses for the route. The default certificate is used if the user does not explicitly provide the certificate.

With edge routes, the TLS termination occurs at the router. The command-line interface (CLI) command oc create route configures this.

Create an edge route with a custom certificate

Use the following prerequisites and procedures to configure an edge route.

Prerequisites

- A certificate/key pair in a Privacy Enhanced Mail (PEM)-encoded files, with the certificate valid for the route host

- A separate certificate authority (CA) certificate in a PEM-encoded file that completes the certificate chain

- The name of the service to be exposed

Procedure

This procedure creates a route resource with a custom certificate and edge TLS termination. The following assumes that the certificate/key pair is in the tls.crt and tls.key files in the current working directory. You can specify a CA certificate to complete the certificate chain if needed. Substitute the actual path names for tls.crt, tls.key, and (optionally) ca.crt. Substitute the name of the service to be exposed for the frontend. Substitute the appropriate hostname for www.example.com.

Create a route resource:

$ oc create route edge --service=frontend --cert=tls.crt --key=tls.key \ --ca-cert=ca.crt --hostname=www.example.comThe resulting route resource should look similar to the following:

apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: frontend

spec:

host: www.example.com

to:

kind: Service

name: frontend

tls:

termination: edge

key: |-

-----BEGIN PRIVATE KEY-----

[...]

-----END PRIVATE KEY-----

certificate: |-

-----BEGIN CERTIFICATE-----

[...]

-----END CERTIFICATE-----

caCertificate: |-

-----BEGIN CERTIFICATE-----

[...]

-----END CERTIFICATE-----2. Reencryption routes

You can use the oc create route command to configure a secure route using reencrypt TLS termination with a custom certificate in OpenShift Container Platform. To do this, specify the --tls-termination=reencrypt flag, and provide the path to your custom certificate using the --cert and --key flags.

Create a reencrypt route with a custom certificate

Use the following prerequisites and procedures to configure a reencrypt route.

Prerequisites

- Certificate/key pair in PEM-encoded files, with the certificate valid for the route host

- Separate CA certificate in a PEM-encoded file that completes the certificate chain

- Separate destination CA certificate in a PEM-encoded file

- Name of service that is going to be exposed

Procedure

This procedure creates a route resource with a custom certificate and reencrypt TLS termination. The following assumes that the certificate/key pair are in the tls.crt and tls.key files in the current working directory. Specifying a destination CA certificate is necessary to enable the Ingress Controller to trust the service's certificate. Also, select a CA certificate if needed to complete the certificate chain. Substitute the actual path names for tls.crt, tls.key, cacert.crt, and (optionally) ca.crt. Substitute the name of the service resource if it must be exposed for the frontend. Substitute the appropriate hostname for www.example.com.

Create a route resource:

$ oc create route reencrypt --service=frontend --cert=tls.crt --key=tls.key \ --dest-ca-cert=destca.crt --ca-cert=ca.crt --hostname=www.example.comThe resulting route resource should look similar to the following:

apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: frontend

spec:

host: www.example.com

to:

kind: Service

name: frontend

tls:

termination: reencrypt

key: |-

-----BEGIN PRIVATE KEY-----

[...]

-----END PRIVATE KEY-----

certificate: |-

-----BEGIN CERTIFICATE-----

[...]

-----END CERTIFICATE-----

caCertificate: |-

-----BEGIN CERTIFICATE-----

[...]

-----END CERTIFICATE-----

destinationCACertificate: |-

-----BEGIN CERTIFICATE-----

[...]

-----END CERTIFICATE-----[ Learn ways to simplify your security operations center. ]

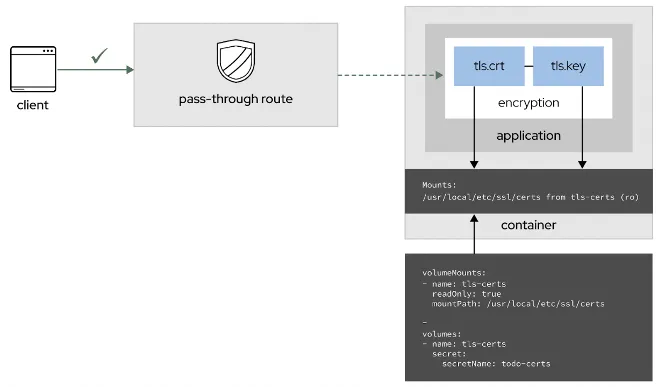

3. Pass-through route

Finally, you can create a secure route using pass-through termination by sending encrypted traffic straight to the destination without the router providing TLS termination. With this method, no key or certificate is required on the route. Use the command listed above to configure the route. The destination pod serves certificates for the traffic at the endpoint. As with reencryption, the connection's full path is encrypted. This is currently the only method that can support requiring client certificates, also known as two-way authentication.

Create a pass-through route

Use the following prerequisites and procedures to configure a pass-through route.

Prerequisites

- Name of the service to be exposed

Procedure

Create a route resource:

$ oc create route passthrough route-passthrough-secured --service=frontend \ --port=8080The resulting route resource should look similar to the following:

apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: route-passthrough-secured

spec:

host: www.example.com

port:

targetPort: 8080

tls:

termination: passthrough

insecureEdgeTerminationPolicy: None

to:

kind: Service

name: frontendDetails:

- The object name is

route-passthrough-securedand is limited to 63 characters. - The

terminationfield is set topass-through. This is the only requiredtlsfield. insecureEdgeTerminationPolicyis optional. The only valid values areNone,Redirect, or leave it empty for disabled.

[ Check out Red Hat's Portfolio Architecture Center for a wide variety of reference architectures you can use. ]

Recommended practices

There are four methods for ensuring a secure end-to-end TLS:

- Using a reencrypt route with a route-specific or wildcard certificate

- Using a pass-through route with named certificates and certificate management

- Using Service Mesh ingress gateway and Mutual TLS authentication (mTLS)

- Keycloak (RH-SSO) and TLS

As illustrated above, the edge secure route method encrypts the connection from the client to the reverse proxy, but it stays unencrypted from the reverse proxy to the pod. Therefore, this approach is sometimes interpreted as not meeting recommended requirements for complete end-to-end encryption.

Wrap up

My next three articles in this series will explain wildcard certificates, why using EV Multi-Domain certificates is a better choice, and how to use Cert-Manager in OpenShift to manage certificates.

Those articles explore options for architecting and configuring end-to-end encryption for applications hosted on the OpenShift platform. While the general consensus is to discourage the use of wildcard certificates and express a preference toward certain architectures (for example, service mesh with mTLS or pass-through secure routes with named certificates), I hope to initiate a conversation to help organizations to find the right solution within their own technology landscape and requirements.

[ Become a Red Hat Certified Architect and boost your career. ]

About the author

Cheslav Versky got his start in systems engineering by hacking an early programmable calculator with one hundred steps of volatile memory, and 15 save registers. He later earned a degree in pure math, served a stint at a pioneering genomics startup, and spent over 20 years designing and implementing high-availability and data protection strategies for many global brand names, such as Apple, Visa, Chevron, and others.

Cheslav is currently an Associate Principal Solution Architect and Team Lead for the Navy/USMC at Red Hat, where he concentrates on helping the Navy to better coordinate and operate information systems critical for tactical operations while improving overall efficiency by leveraging the open source way of collaboration to innovate faster

More like this

Chasing the holy grail: Why Red Hat’s Hummingbird project aims for "near zero" CVEs

Elevate your vulnerabiFrom challenge to champion: Elevate your vulnerability management strategy lity management strategy with Red Hat

Data Security And AI | Compiler

Data Security 101 | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds