As a Solutions Architect, I enjoy creating and adding custom configurations to my Red Hat Enterprise Virtualization(RHEV) environment using a feature called hooks. A hook is a custom script that executes at a certain point during a RHEV event. You can attach scripts to several events. To see the full list of RHEV hooks, do a directory listing of “/usr/libexec/vdsm/hooks” on a RHEV hypervisor and you will see the below list.

| RHEV Hooks | |

| after_device_create | after_vm_set_ticket |

| after_device_destroy | after_vm_start |

| after_device_migrate_destination | before_device_create |

| after_device_migrate_source | before_device_destroy |

| after_disk_hotplug | before_device_migrate_destination |

| after_disk_hotunplug | before_device_migrate_source |

| after_get_all_vm_stats | before_disk_hotplug |

| after_get_caps | before_disk_hotunplug |

| after_get_stats | before_get_all_vm_stats |

| after_get_vm_stats | before_get_caps |

| after_hostdev_list_by_caps | before_get_stats |

| after_ifcfg_write | before_get_vm_stats |

| after_memory_hotplug | before_ifcfg_write |

| after_network_setup | before_memory_hotplug |

| after_network_setup_fail | before_network_setup |

| after_nic_hotplug | before_nic_hotplug |

| after_nic_hotplug_fail | before_nic_hotunplug |

| after_nic_hotunplug | before_set_num_of_cpus |

| after_nic_hotunplug_fail | before_update_device |

| after_set_num_of_cpus | before_vdsm_start |

| after_update_device | before_vm_cont |

| after_update_device_fail | before_vm_dehibernate |

| after_vdsm_stop | before_vm_destroy |

| after_vm_cont | before_vm_hibernate |

| after_vm_dehibernate | before_vm_migrate_destination |

| after_vm_destroy | before_vm_migrate_source |

| after_vm_hibernate | before_vm_pause |

| after_vm_migrate_destination | before_vm_set_ticket |

| after_vm_migrate_source | before_vm_start |

| after_vm_pause | |

To illustrate the process of using hooks, I am going to provide storage to my virtual cluster by adding CONNECTED_MODE to the hypervisors. This customized configuration will enhance performance of the Infiniband connected iSCSI storage server. Why do I need a hook for this process? RHEV supports iSCSI and Infiniband without the need for customized scripts. At the moment, Red Hat Enterprise Virtualization Manager (RHEV-M) only supports DATAGRAM_MODE for Infiniband, with a maximum MTU of 2044. If a user requires a higher number of MTU, we need to use the CONNECTED_MODE for the Infiniband iSCSI network for best performance.

Configuring RHEV Networking to use the Infiniband Device for iSCSI Storage

I will assume that you have an Infiniband switch, cables, and cards already installed in your hypervisors, and that you have a iSCSI storage server connected to the Infiniband network using IpoIB. You have also created the iSCSI logical network in RHEV-M’s Networks tab. To do the configuration, you need to follow the following steps:

- In Maintenance mode, install the following packages on the hypervisor

- [root@rhev2 ~]# yum -y install rdma ibutils libibverbs-utils infiniband-diags

- [root@rhev2 ~]# echo “alias ib0 ib_ipoib” > /etc/modprobe.d/ipoib.conf

- Reboot.

- Check the status of your Infiniband device with the following two commands: “ibstat” and “ibstatus”. The device should be physically connected and you will see the following results:

[root@rhev2 ~]# ibstat

CA 'mthca0'

CA type: MT25204

Number of ports: 1

Firmware version: 1.1.0

Hardware version: a0

Node GUID: 0x0005ad00000c5a30

System image GUID: 0x0005ad00000c5a33

Port 1:

State: Active

Physical state: LinkUp

Rate: 20

Base lid: 7

LMC: 0

SM lid: 1

Capability mask: 0x02590a68

Port GUID: 0x0005ad00000c5a31

Link layer: InfiniBand

===========================

[root@rhev2 ~]# ibstat

CA 'mthca0'

CA type: MT25204

Number of ports: 1

Firmware version: 1.1.0

Hardware version: a0

Node GUID: 0x0005ad00000c5a30

System image GUID: 0x0005ad00000c5a33

Port 1:

State: Active

Physical state: LinkUp

Rate: 20

Base lid: 7

LMC: 0

SM lid: 1

Capability mask: 0x02590a68

Port GUID: 0x0005ad00000c5a31

Link layer: InfiniBand

- In RHEV-M, click on Hosts, then click on Network Interfaces screen. Here you can finish up the configuration.

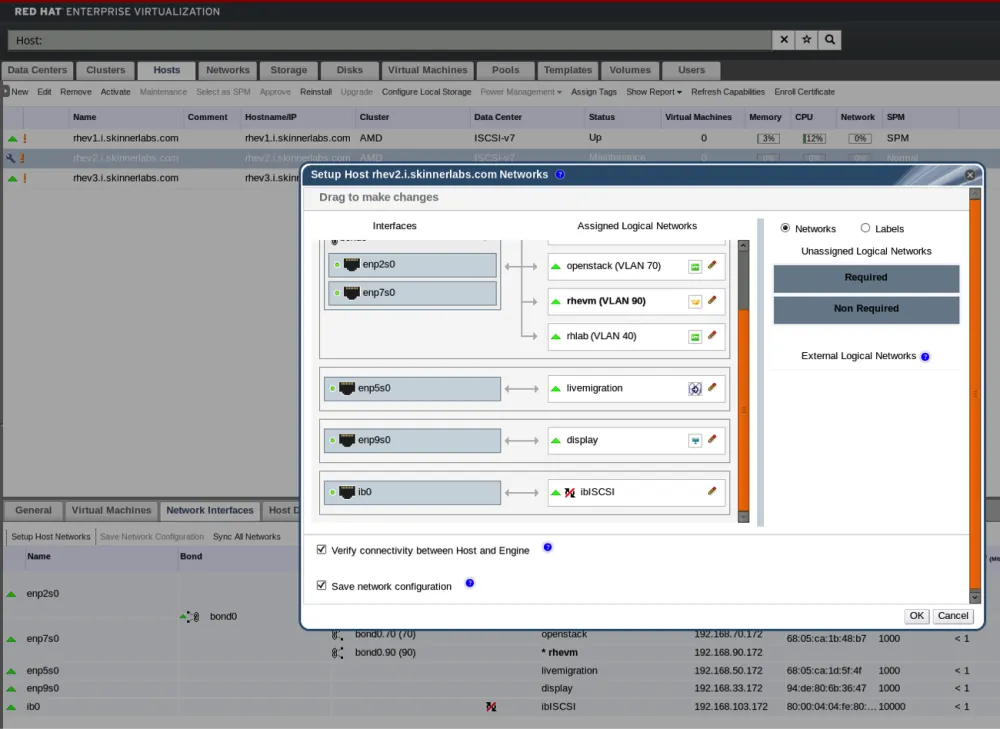

- Follow the steps in the Setup Host Networks' screen, assigning your ib0 device an IP address and your per-defined logical iSCSI network.

- Look at your /etc/sysconfig/network-scripts/ifcfg-ib0 file on your RHEV hypervisor and see that VDSM has created the correct Infiniband device. You can confirm what MODE your Infiniband card is running in by looking at the contents of this file: /sys/class/net/ib0/mode

Through the following command: [root@rhev2 ~]# cat /sys/class/net/ib0/mode. The content should say datagram.

This is a working configuration and things are all running as expected, but we want to optimize our configuration. Follow the following steps to fine tune your configuration:

Perfect, but there is a problem. Since we told RHEV-M to change the MTU for that network, it attempted to do so by updating the /etc/sysconfig/network-scripts/ifcfg-ib0 file with an “MTU=65520”. However, we are still in DATAGRAM_MODE and our actual MTU is still set to 2044 since DATAGRAM_MODE only supports an MTU of 2044.

You can confirm this by running the following command on your hypervisor:

[root@rhev2 ~]# ip addr | grep ib0

7: ib0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 2044 qdisc pfifo_fast state UP qlen 256

inet 192.168.103.173/24 brd 192.168.103.255 scope global ib0

RHEV-M will also show an error on our host as seen in Image II below. It will complain because the hypervisor ib0 device has an MTU of 2044 and the logical iSCSI network in the DATACENTER has been configured to have an MTU of 65520.

Even if we try to use the RHEV-M to fix the error, there are currently no Infiniband mode options in the Network Interfaces → Setup Host Networks drag and drop wizard tab as seen in Image III below.

Image III

The fix is straightforward and a great use case for RHEV hooks. We need to convince VDSM to add two simple options to our /etc/sysconfig/network-scripts/ifcfg-ib0 file.

"TYPE=Infiniband"

"CONNECTED_MODE=yes"

If you look at the network-related events in /usr/libexec/vdsm/hooks you will currently find three.

after_network_setup

after_network_setup_fail

before_network_setup

We need to decide where to install our hook script. Since our hook script will be modifying the /etc/sysconfig/network-scripts/ifcfg-ib0 file, we cannot use the before_network_setup event, because it will be overwritten by VDSM when the network starts. The after_network_setup_fail would be useful for failure recovery or notification type scripts. The only event left is the after_network_setup event.

Below is a simple bash script written to update the /etc/sysconfig/network-scripts/ifcfg-ib0 with the appropriate settings to allow the device to support CONNECTED_MODE. It has some basic error checking and as you can see, is hard coded to the ib0 device only. Hook scripts need to return either a 1 for an error or 0 for success. Once we update the file we bounce the device for the new options to take effect. This is done only once, when the hook script runs.

#!/bin/bash

# SCRIPT Name: 01-ib-connected-mode.sh

# DESCRIPTION: VDSM hook to force Infiniband mode from default datagram to connected mode

# REQUIREMENTS: Configure MTU in Network tab of RHEV-M with Custom MTU - should # be 66520 for CONNECTED_MODE

# LOCATION: /usr/libexec/vdsm/hooks/after_network_setup

if ! grep -Fxq connected /sys/class/net/ib0/mode

then

echo "TYPE=Infiniband" >> /etc/sysconfig/network-scripts/ifcfg-ib0

echo "CONNECTED_MODE=yes" >> /etc/sysconfig/network-scripts/ifcfg-ib0

/usr/sbin/ifdown ib0

/usr/sbin/ifup ib0

if grep -Fxq connected /sys/class/net/ib0/mode

then

return 0

else

return 1

fi

else

return 0

fi

Then follow the following steps:

- Copy the hook into the following folder on your hypervisor while in maintenance mode: /usr/libexec/vdsm/hooks/after_network_setup

MAKE SURE THE SCRIPT IS EXECUTABLE!

[root@rhev2 ~]# chmod +x /usr/libexec/vdsm/hooks/after_network_setup/01-ib-connected-mode.sh - Reboot the server to make sure everything starts up as expected.

- Once your hypervisor host has been activated, you should get the following output from these two command line commands on your RHEV hypervisor:

[root@rhev2 ~]# cat /sys/class/net/ib0/mode

connected

and

[root@rhev2 ~]# ip addr | grep ib0

7: ib0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65520 qdisc pfifo_fast state UP qlen 256

inet 192.168.103.173/24 brd 192.168.103.255 scope global ib0

If you look at RHEV-M network settings, the iSCSI device has successfully synced as shown below in image IV.

Image IV

You can see in Image V below what Host Hooks have been applied to your hypervisor by selecting the host hypervisor and clicking on the Host Hooks tab for a list

Image V

The three 50_vmfex hooks are for supporting Cisco VM-FEX hardware. They were introduced into RHEV 3.6 and only execute when VM-FEX hardware is present and can be ignored or removed if using other hardware.

A lot is possible with hooks which allow you to easily extend features and functionality within RHEV. Note: hooks are not officially supported and users can get help in the thriving community forums. Stay tuned for my upcoming blogs posts, “Building a RHEL6 or RHEL7 iSCSI Server” and “Optimizing a RHEL7 iSCSI Server With DM-Cache”. Please follow the below links for more information:

- Download RHEV

- RHEV Documentation

- RHEL Infiniband modes can be found in section 9.6.2 of this document

Über den Autor

Ähnliche Einträge

Friday Five — February 20, 2026 | Red Hat

Innovation is a team sport: Top 10 stories from across the Red Hat ecosystem

Understanding AI Security Frameworks | Compiler

Data Security And AI | Compiler

Nach Thema durchsuchen

Automatisierung

Das Neueste zum Thema IT-Automatisierung für Technologien, Teams und Umgebungen

Künstliche Intelligenz

Erfahren Sie das Neueste von den Plattformen, die es Kunden ermöglichen, KI-Workloads beliebig auszuführen

Open Hybrid Cloud

Erfahren Sie, wie wir eine flexiblere Zukunft mit Hybrid Clouds schaffen.

Sicherheit

Erfahren Sie, wie wir Risiken in verschiedenen Umgebungen und Technologien reduzieren

Edge Computing

Erfahren Sie das Neueste von den Plattformen, die die Operations am Edge vereinfachen

Infrastruktur

Erfahren Sie das Neueste von der weltweit führenden Linux-Plattform für Unternehmen

Anwendungen

Entdecken Sie unsere Lösungen für komplexe Herausforderungen bei Anwendungen

Virtualisierung

Erfahren Sie das Neueste über die Virtualisierung von Workloads in Cloud- oder On-Premise-Umgebungen