In the past, building a fault-tolerant, secure multi-master MySQL service was cumbersome. It required several steps and dependent packages. Replication configuration, data synchronization, and multiple configuration files added to the complexity. Building a solution on a hardened OS like Red Hat Enterprise Linux (RHEL) 8 and using a professionally packaged multi-master MySQL distribution from Percona makes it easy. This guide shows you how.

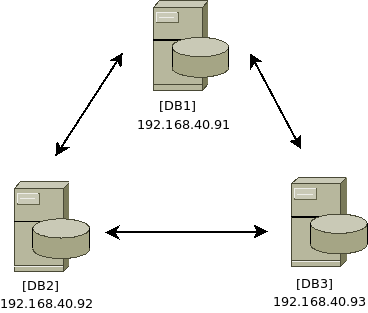

Architectural diagram:

[ You might also enjoy: Keepalived and high availability: Advanced topics ]

Install the cluster software

Run the following commands on all three servers (DB1, DB2, and DB3):

# yum -y install https://repo.percona.com/yum/percona-release-latest.noarch.rpm

# percona-release enable-only pxc-80 release

# percona-release enable tools release

# yum repolist

Updating Subscription Management repositories.

repo id repo name

prel-release-noarch Percona Release release/noarch YUM repository

pxc-80-release-x86_64 Percona XtraDB Cluster 8.0 release/x86_64 YUM repository

rhel-8-for-x86_64-appstream-rpms Red Hat Enterprise Linux 8 for x86_64 - AppStream (RPMs)

rhel-8-for-x86_64-baseos-rpms Red Hat Enterprise Linux 8 for x86_64 - BaseOS (RPMs)

satellite-tools-6.8-for-rhel-8-x86_64-rpms Red Hat Satellite Tools 6.8 for RHEL 8 x86_64 (RPMs)

tools-release-x86_64 Percona Tools release/x86_64 YUM repositoryBecause the RHEL 8 repositories include a version of MySQL, you must disable access to them for the Percona installation to complete successfully.

# yum -y module disable mysql

# yum -y install percona-xtradb-clusterConfigure the cluster

On the DB1 server, start the database service to access it via the MySQL client in order to update the default password and allow any host to remotely connect for management:

# systemctl start mysql

# grep 'temporary password' /var/log/mysqld.log

# mysql -u root -p

Change default root password with ALTER command:

# mysql> ALTER USER 'root'@'localhost' IDENTIFIED BY 'redhatPERCONA';Enable login from any host with UPDATE command:

# mysql> UPDATE mysql.user SET host='%' WHERE user='root';Exit MySQL administration:

# mysql> exitOn the DB1 server, stop the database service:

# systemctl stop mysqlOn the DB2 and DB3 servers, start/stop the MySQL service to create the initial file structures:

# systemctl start mysql

# systemctl stop mysqlOn all database servers in the cluster (DB1, DB2, and DB3) ensure the configuration file /etc/my.cnf contains the following unique fields:

wsrep_cluster_address=gcomm://

wsrep_cluster_name=

wsrep_node_name=Add each database server’s IP address. This is how the cluster communicates and to whom:

wsrep_cluster_address=gcomm://192.168.40.91,192.168.40.92,192.168.40.93Add a unique cluster name. This needs to be the same on all servers in the cluster. This will identify the cluster from other clusters:

wsrep_cluster_name=pxc-clusterAdd a unique node name and its IP address. The cluster must have a way to identify each node:

wsrep_node_name=pxc-cluster-node-db1

wsrep_node_address=192.168.40.91Run this command on each database server to make sure the settings you made are correct:

[DB1]

# grep -e address -e cluster /etc/my.cnf | grep -v \#

wsrep_cluster_address=gcomm://192.168.40.91,192.168.40.92,192.168.40.93

wsrep_node_address=192.168.40.91

wsrep_cluster_name=pxc-cluster

wsrep_node_name=pxc-cluster-node-db1

[DB2]

# grep -e address -e cluster /etc/my.cnf | grep -v \#

wsrep_cluster_address=gcomm://192.168.40.91,192.168.40.92,192.168.40.93

wsrep_node_address=192.168.40.92

wsrep_cluster_name=pxc-cluster

wsrep_node_name=pxc-cluster-node-db2

[DB3]

# grep -e address -e cluster /etc/my.cnf | grep -v \#

wsrep_cluster_address=gcomm://192.168.40.91,192.168.40.92,192.168.40.93

wsrep_node_address=192.168.40.93

wsrep_cluster_name=pxc-cluster

wsrep_node_name=pxc-cluster-node-db3Start the cluster

Once the configuration looks good, the next step is to bootstrap the DB1 server. This is done with a special service called mysql@bootstrap.service:

# systemctl start mysql@bootstrap.serviceLogin to check the status with the following commands:

# mysql -u root -p

# mysql> show status like 'wsrep%';Look for wsrep_cluster_size, which should be 1, since the only server in the cluster right now is DB1.

The bootstrap service generates the TLS security certificates used for secure cluster communications. Copy these files to the /var/lib/mysql on the other two database servers: server-key.pem,ca.pem, and server-cert.pem

# cd /var/lib/mysql

# scp server-key.pem server-cert.pem ca.pem db2:/var/lib/mysql/

# scp server-key.pem server-cert.pem ca.pem db3:/var/lib/mysql/

Configure the firewall for secure cluster operations

The Percona cluster requires the following four ports to be open to function properly: 3306, 4444, 4567, and 4568. Run these firewall commands on all three servers (DB1, DB2, and DB3) to configure them.

Ensure that the firewalld service is running:

# systemctl enable firewalld

# systemctl start firewalldThe default zone is public, so unless you have changed it, you can add the required four ports this way:

# firewall-cmd --add-port 3306/tcp --zone=public --permanent

# firewall-cmd --add-port 4444/tcp --zone=public --permanent

# firewall-cmd --add-port 4567/tcp --zone=public --permanent

# firewall-cmd --add-port 4568/tcp --zone=public --permanentOr, if you prefer, add multiple ports all at once from a single command:

# firewall-cmd --add-port={3306/tcp,4444/tcp,4567/tcp,4568/tcp} --zone=public --permanent

Reload the firewalld service with the following command and then list the ports to confirm the service is configured as expected:

# firewall-cmd --reload

# firewall-cmd --list-ports

3306/tcp 4444/tcp 4567/tcp 4568/tcpYou can find more information on the RHEL 8 firewall here.

Grow the cluster

Now it is time to add more servers to the existing cluster of one that is currently running after being bootstrapped. The goal is to add both DB2 and DB3 to the cluster running on DB1.

One at a time, start the MySQL service on each server, waiting for it to finish before moving to the next server.

On DB2, type:

# systemctl start mysqlDo the same on DB3:

# systemctl start mysqlOn DB1, tail the mysql.log file to watch for new servers joining the cluster. This will let you know if things are working as expected or if errors are occurring during the cluster join phase:

# tail -f /var/log/mysqld.log | grep db3

2021-04-08T17:48:25.892746Z 0 [Note] [MY-000000] [Galera] STATE EXCHANGE: got state msg: 9ea100f1-9892-11eb-af

0e-66802999478c from 0 (pxc-cluster-node-db3)

0: 9e07f773-9892-11eb-a4b8-7bfcd02aca9e, pxc-cluster-node-db3

2021-04-08T17:48:26.405209Z 0 [Note] [MY-000000] [Galera] Member 0.0 (pxc-cluster-node-db3) requested state tr

ansfer from '*any*'. Selected 1.0 (pxc-cluster-node-db1)(SYNCED) as donor.

2021-04-08T17:48:41.023324Z 0 [Note] [MY-000000] [Galera] 1.0 (pxc-cluster-node-db1): State transfer to 0.0 (p

xc-cluster-node-db3) complete.

2021-04-08T17:48:46.677727Z 0 [Note] [MY-000000] [Galera] 0.0 (pxc-cluster-node-db3): State transfer from 1.0

(pxc-cluster-node-db1) complete.

2021-04-08T17:48:46.678022Z 0 [Note] [MY-000000] [Galera] Member 0.0 (pxc-cluster-node-db3) synced with group.In the log file output above, you can see that DB3 joined the cluster, requested state transfer, and successfully synced its data from DB1. Another way to verify the cluster size is to log into any server that is a cluster member and run these commands:

# mysql -u root -p

# mysql> show status like 'wsrep%';Look for wsrep_cluster_size, which should be 3, since all three servers (DB1, DB2, and DB3) have joined the cluster now.

Look for wsrep_incoming_addresses, which should show all three server IP addresses. Again, this is another way to confirm all servers are in the cluster and communicating correctly.

Testing the clusters

Now that you have a three-node cluster up and running, you need to test the active-active-active capabilities.

On server DB1, create a new database with the following command:

# mysql -u root -p

# mysql> create database myACTIVEdb;On server DB2 or DB3, run the following commands to check if the newly created database shows up:

# mysql -u root -p

# mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| myACTIVEdb |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)The new database should show up almost immediately. Regardless of which server writes are performed, the data is replicated to the other database servers. Congratulations, you now have a three-node, active-active-active MySQL Percona server running on RHEL 8!

Cluster maintenance

From time to time, one of the database servers might need maintenance that requires a reboot. You should always shut down the MySQL service properly before rebooting:

# systemctl stop mysqlThis command can take some time, as it prepares the cluster to be in a disrupted state. Let it shut down properly to sync and update the cluster status on all servers.

Reboot the server under maintenance, and then rejoin the cluster with the following command:

# systemctl start mysqlThe health of the cluster is dependent on keeping quorum. Remember always to keep one more than half the size of the cluster up and running. For a cluster of three, a quorum would be two. For a cluster of five, a quorum would be three. Growing the cluster from three to five is easy; just repeat the steps outlined above. It is advisable to have an odd number of servers in the cluster, making quorum easier to manage.

If the entire cluster is off and needs to be restarted, remember to select one server to initiate the cluster. That is done with the bootstrap process. For example, on DB1:

# systemctl start mysql@bootstrap.serviceOn the other two servers, join them once the bootstrap process has completed, with the following command:

# systemctl start mysqlThe cluster is now up and operational.

SELinux

RHEL 8 ships with SELinux in Enforcing mode. Red Hat, Percona, and MySQL have done a good job with SELinux working out of the box in Enforcing mode. No changes need to be made. You can find more details on Percona's website if changes to certain Percona configuration parameters need to be modified.

[ Get this free ebook: Managing your Kubernetes clusters for dummies. ]

Wrap up

RHEL 8 provides a platform for database workloads that is both supportable and offers great performance. Percona has done a nice job of packaging up an easy-to-deploy multi-master implementation of MySQL server with security details in mind.

About the author

More like this

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 6)

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 5)

OS Wars_part 1 | Command Line Heroes

OS Wars_part 2: Rise of Linux | Command Line Heroes

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds