What is post-quantum cryptography?

A new type of computer is being developed that can break many of our existing cryptographic algorithms. As a result, we need to develop new algorithms that are secure against those computers and that will run on our existing computers. This is called “post-quantum cryptography”.

What is a quantum computer?

In 1981, Richard Feynman proposed a new way to model quantum interactions in complex systems. There is a problem when modeling these interactions, however, in that we need to represent each linked particle as a set of probabilities. As we add particles, these arrays grow exponentially. For any sufficiently large system, we can no longer handle the storage and time requirements using existing computers.

Feynman’s suggestion is simple: Build a computer using entangled quantum objects to model the physical object we are interested in. Such a computer could efficiently handle a number of tasks with which we could figure out how to take advantage of changing entangled quantum states.

What is a qubit?

The idea behind a quantum computer is to replace our classical bits with “qubits”. Classical bits can be either 0 or 1, while a qubit takes on a probability of being 1 or 0, usually represented by a unit vector in three-dimensional space. The power of the qubit isn’t a single bit, but multiple bits which are entangled with each other. If you can devise an algorithm in which these qubits interfere with each other in the solution to your problem, you can force these bits to take on the state of your solution instantly.

What do quantum computers have to do with cryptography?

When Feynman proposed quantum computers, such a machine was beyond anyone’s ability to build, but researchers still looked at not only how to build such a computer, but also how it could be used.

In 1994, Peter Shor identified an algorithm that could use a quantum computer to break the RSA and Diffie Hellman cryptographic systems. Shor’s algorithm was then extended to break ECC (Elliptic Curve Cryptography) as well. These algorithms are the building blocks for all our public key exchange and digital signing algorithms. From that time on, we knew our primary public key systems were only secure until someone built a sufficiently large, working quantum computer. (There are quantum computers today which you can hire to run jobs, but they are still too small to be a risk to our existing algorithms.)

With our classical algorithms potentially broken, we will need new algorithms that are based on problems that aren’t easily solved with quantum computers, which is where post-quantum cryptography comes in. These algorithms run on existing computers and are based on problems that are difficult for both a classical computer and a quantum computer to solve.

Why should you care about post-quantum cryptography?

Cryptography is pervasive in our modern world. When you enter your credit card number on the web, that communication is protected by an encrypted channel which depends on both digital signing (to make sure you are giving the credit card to the correct vendor), and public key exchange (to agree on a set of keys used between client and server to encrypt your communication). If a sufficiently large quantum computer were to be built, none of the security guarantees that you normally get with Transport Layer Security (TLS) could be depended on. Some forms of disk encryption also use public keys to provide recovery systems in case your users forget their passwords.

One of the most important things all of this means for the average person is that they need to identify what systems they use that could potentially be vulnerable. This is especially important for corporate IT.

When do you need to care?

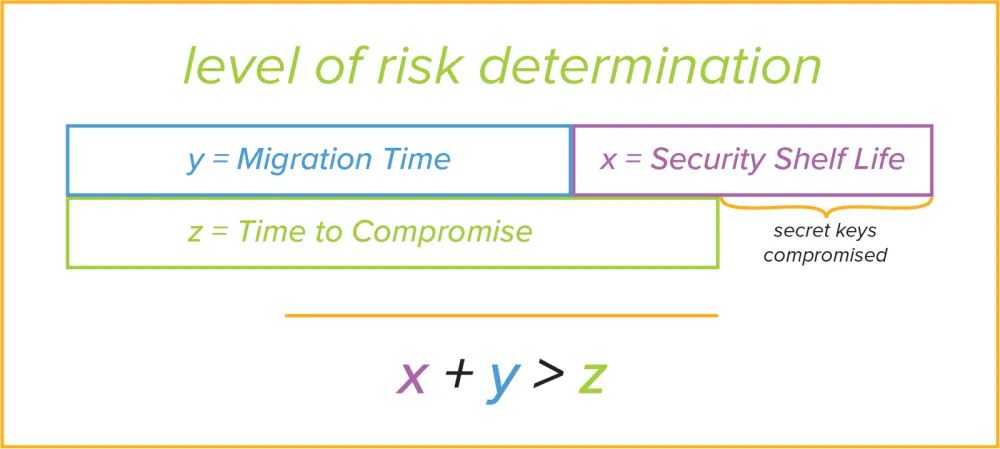

Every talk and article on post-quantum cryptography answers this question with Mosca’s Theorem:

If the sum of the time to migrate to the new algorithm (y) and the time you need the secret to be kept (x) is greater than the time left before we have a quantum computer that can break our public key algorithm (z) then your data will be compromised before its usefulness expires. The problem is there is uncertainty in many of these numbers.

The time you need to keep the secret (x) is usually known based on the application. For your credit card on the internet, for example, this would be maybe two or three years depending on your card’s expiration date. For medical data, on the other hand, it could be decades.

This is further complicated by the fact that some entities (government and private) have begun recording TLS sessions, so even though the TLS session is short lived, the data in that connection may be stored and decrypted in the future. So, for example, If you are doing some aid work in an authoritarian country and you are working with people that could be arrested for working with you, you probably don’t want to trust communicating their names or other identifying information across a VPN or a TLS connection. This time-value is under your control, but you need to evaluate how long you need to keep certain data secret and from what kinds of entities.

The time to deploy can be lengthy (y), starting with standards, then moving on to actual deployment. In some instances it could be decades. The cryptographic community has been working on new standards proposals for years, and we only now expect them to get standardized. The only control you have here is once algorithms and protocols are available, you decide how quickly to deploy them on your systems.

The greatest unknown at this point, however, is the time before we have quantum computers which can break our existing algorithms (z). In 2015 Michael Mosca, a quantum computing researcher at the University of Waterloo, estimated that there was a 1/7 chance that 2048-bit RSA will be vulnerable by 2026 and a 50% chance it would be vulnerable by 2031. He updated that in 2017 to 1/6 chance that 2048 will be compromised by 2027. The research into quantum computers has accelerated — companies like IBM and Google have been building small, experimental quantum computers to help solve some of the intractable problems they and their customers have.

The upshot is this: There are some things you need to care about right now, but others you don’t have to worry about until we have post-quantum algorithms deployed.

Why aren’t post-quantum algorithms deployed already?

The cryptographic community has known about these issues for a while. The good news is there are several new algorithms that can replace our existing key exchange and signature algorithms. The bad news is all the well-studied algorithms have major barriers to deploying them, namely their key size or their encrypted data/signature size, or both, is very large (In some cases megabits large). The community has spent the last decade researching some of the more promising new algorithms that don’t have massive keys and data blobs. In future posts I’ll examine these families in detail, but for now we’ll just note a few facts.

In 2016 the National Institute of Standards and Technology (NIST) started the Post-Quantum Cryptography Standardization process to collect and evaluate potential algorithms. They received 82 submissions, 69 of which were considered complete at the end of 2017. These were evaluated in 2018 and, at the beginning of this year, 30 algorithms were selected for further refinement and evaluation through 2019.

Many of the original 69 were broken in that time frame, and 26 of the original 69 algorithms were selected for round two. In 2020 NIST selected seven finalists and eight alternates. Even among these 15 algorithms, three have now been broken. The number of broken algorithms justifies the wisdom behind proceeding at a careful pace.

We expect NIST to make a final selection in 2022 and to start the standardization process once the selection is made. When that process is complete, protocols like TLS can pick them up and vendors can start to deploy them.

What should I do?

You should first identify any use of the potentially-broken algorithms you have now and determine whether they are protecting long-term data. This means you need to examine your uses of:

RSA, DSA, ECC, DH – the actual vulnerable algorithms

TLS, SSH, S/MIME, PGP, IPSEC – protocols that depend on these vulnerable algorithms

VPNs, Kerberos – protocols that may depend on these vulnerable algorithms

Browsers, encrypted messaging, disk encryption, authentication schemes – applications that (potentially) use these protocols and vulnerable algorithms

You want to:

Make sure the users aren’t trying to protect long-term data.

Create a plan to replace the vulnerable algorithms with post-quantum algorithms as they become available. Prioritize those systems that store or transfer your most sensitive data. This will probably mean updating your old operating systems, and maybe even your old hardware.

Identify systems not in your control (third-party websites, etc.) and make a plan on how you can mitigate your exposure to their systems.

Unfortunately, these new algorithms are not yet available, and won’t be until standards can be updated, but you can still get control over your potential exposures by at least knowing where they are. You can find more information in this Q&A with Matt Scholl from NIST.

What’s next?

In future blog posts I’ll explain how the new post-quantum algorithms work and what to watch for when implementing and deploying them.

About the author

Relyea has worked in crypto security on the Network Security System code used in Mozilla browsers since 1996. He joined Red Hat in 2006. He is also the co-chair of the OASIS PKCS #11 Technical Committee.

More like this

End-to-end security for AI: Integrating AltaStata Storage with Red Hat OpenShift confidential containers

Red Hat to acquire Chatterbox Labs: Frequently Asked Questions

Data Security 101 | Compiler

AI Is Changing The Threat Landscape | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds