Overview

A sandbox is a tightly controlled environment where an application runs. Sandboxed environments impose permanent restrictions on resources and are often used to isolate and execute untested or untrusted programs without risking harm to the host machine or operating system. Sandboxed containers add a new runtime to container platforms keeping your program isolated from the rest of the system using lightweight virtual machines which then start containers inside these pods.

Sandboxed containers are typically used in addition to the security features found within Linux containers.

Why are sandboxed containers important?

Sandboxed containers are ideal for workloads that require extremely stringent application-level isolation and security, like privileged workloads running untrusted or untested code and a Kubernetes-native experience. By using a sandboxed container you can further protect your application from remote execution, memory leaks, or unprivileged access by isolating:

- developer environments and privileges scoping

- legacy containerized workloads

- third-party workloads

- resource sharing (CI/CD Jobs, CNFs, etc.) and deliver safe multi-tenancy

Red Hat resources

How does Red Hat OpenShift work with sandboxed containers?

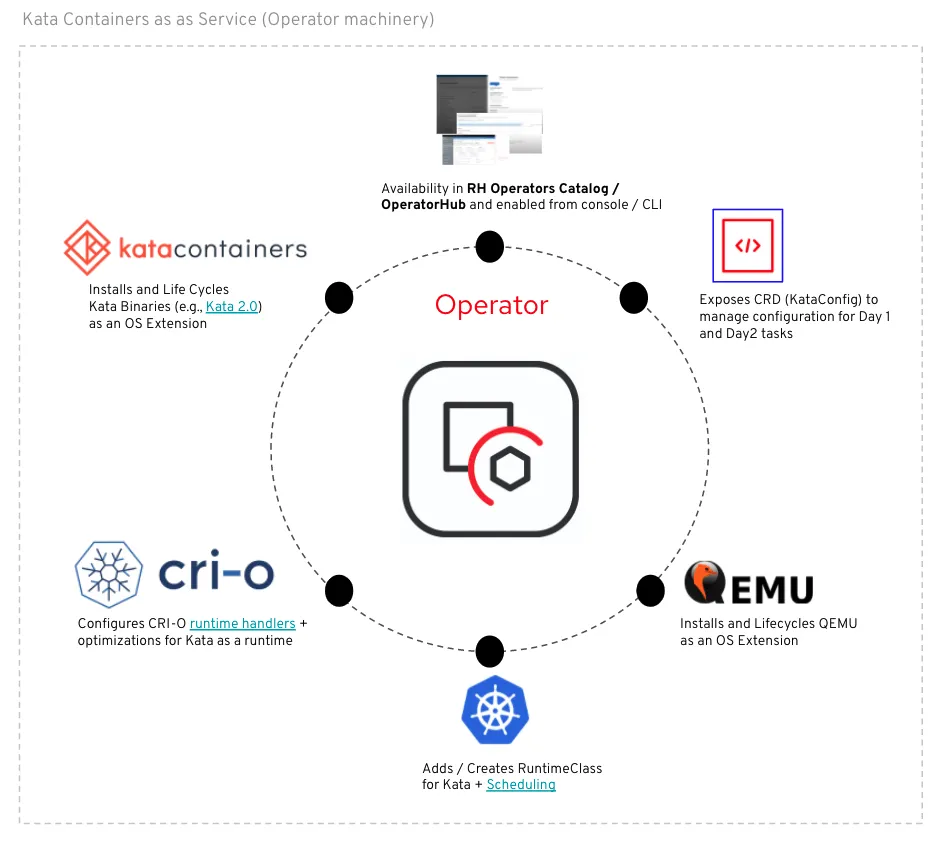

Red Hat OpenShift sandboxed containers, based on the Kata Containers open source project, provides an additional layer of isolation for applications with stringent security requirements via Open Container Initiative (OCI)-compliant container runtime using lightweight virtual machines running your workloads in their own isolated kernel. Red Hat OpenShift achieves this through our certified Operator framework which manages, deploys, and updates the Red Hat OpenShift sandboxed containers Operator.

The Red Hat OpenShift sandboxed containers’ Operator delivers and continuously updates all the required bits and pieces to make Kata Containers usable as an optional runtime on the cluster. That includes but is not limited to:

- the installation of Kata Containers RPMs as well as QEMU as Red Hat CoreOS extensions on the node.

- the configuration of Kata Containers runtime at the runtime level using CRI-O runtime handlers and at the cluster level by adding and configuring a dedicated RuntimeClass for Kata Containers.

- a declarative configuration to customize the installation such as selecting which nodes to deploy Kata Containers on.

- checks the health of the overall deployment, and report problems during the install.

Red Hat OpenShift sandboxed containers are now generally available.

Red Hat named a Leader in 2025 Gartner® Magic Quadrant™ for Container Management

Read the 2025 Gartner® Magic Quadrant™ for Container Management to learn why Red Hat OpenShift has been named a “Leader” for the 3rd year in a row.