Microsoft SQL Server is one of the most widely used enterprise databases today, and it’s available in several editions, including Express, Developer, Web, Standard, and Enterprise, each differing in capabilities and cost. In this post, I'll explain how to create a SQL Server 2019 instance that runs in a Red Hat OpenShift Container Platform pod and consumes data services from Red Hat OpenShift Container Storage. Then I'll show how to use the HammerDB benchmark to test the performance of the database and the persistent storage. I'll also talk about sample failure scenarios and how to monitor them and see how all this is connected to Sherlock Holmes. Yes—Really!

The foundation

As many organizations started to move from running databases on bare metal/VMs to running containers, Microsoft created SQL Server container images and introduced production support for those images on enterprise Linux platforms. As with any other database in the OpenShift/Kubernetes domain, SQL Server needs persistent storage to hold its data, and Container Storage provides persistent storage on OpenShift.

Together, the technologies underlying Container Storage—Rook, Ceph, and NooBaa—offer a resllient, redundant, and performant storage that SQL Server can use as a backend.

OpenShift/Container Storage on Azure

This post concentrates on Azure as the cloud infrastructure for the test run. Installing OpenShift on Azure via installer-provisioned infrastructure (IPI) is uncomplicated, which means the install-config.yaml specifies the type of instances to deploy in Azure.

Following is my setup:

- Masters x 3 using Standard_D4s_v3.

- Infra nodes x 3 using Standard_L16s_v2: These nodes run OpenShift infrastructure components and Container Storage. This particular instance type has 2 NVMe direct-attached storage devices attached to each VM, and these devices are used for the OSDs.

- Worker nodes x 3 using Standard_D16s_v3: These nodes are where the SQL Server pods run.

SQL Server deployment

In OpenShift, deploying SQL Server is pretty straightforward. Before creating the deployment, you should create two objects. The first should be a Persistent Volume Claim (PVC) based on the ocs-storagecluster-ceph-rbd storage class. The second should be a secret to hold the SQL Server server admin password.

Following is an example using a simple deployment yaml. Note: I deliberately omitted a few options from the YAML file to shorten it for the purposes of this post.

--- apiVersion: apps/v1 kind: Deployment metadata: name: sqlserver spec: strategy: ... template: metadata: labels: app: sqlserver spec: containers: - name: sqlserver image: mcr.microsoft.com/mssql/rhel/server:2019-latest imagePullPolicy: "Always" ports: - containerPort: 1433 volumeMounts: - mountPath: /var/opt/mssql name: sqlserverdb env: - name: MSSQL_PID value: "Developer" - name: ACCEPT_EULA value: "Y" - name: MSSQL_SA_PASSWORD valueFrom: secretKeyRef: name: sqlserver key: SA_PASSWORD resources: ... volumes: - name: sqlserverdb persistentVolumeClaim: claimName: my_ocs_pvc

As you can see, the deployment YAML is no different than for other database deployments in OpenShift. Just make sure that, for testing purposes, you use the “Developer” edition and also create an SA_PASSWORD that’s strong enough and follows these rules.

Once you run the deployment, your SQL Server 2019 pod will be up and running. To access the database, we must create a service that connects to the database on the designated port (1433).

HammerDB

HammerDB is a common database benchmark created initially for use with Oracle, SQL Server, and Db2 and, later, for MySQL, PostgreSQL, Redshift, and even some NoSQL databases such as Redis. (You can find the full list here). While HammerDB is not an official TCP-C or TPC-H implementation, it does base its benchmark on these two tests for OLTP (TPC-C) and analytics (TPC-H) because they’ve proven to be well-suited to testing environments in which your databases run.

For this testing, I used the TPC-C based benchmark. While HammerDB has many options, variables, and flags, for this project I focused on the most basic options. HammerDB also allows you to run from a GUI or CLI, but I used only the latter.

As a next step, you need to create another pod running one of the supported HammerDB operating systems and then install HammerDB on it. (Note: This procedure is outside the scope of this post.) This pod will be used to run HammerDB.

Once you have a database running, you create data to be used with the test. HammerDB uses a Tcl interpreter to run all scripts. Following is an example of a simple Tcl script for building the database schema and populating it with data. (Note: vu stands for “virtual user.”)

#!/bin/tclsh dbset db mssqls dbset bm TPC-C diset connection mssqls_tcp true diset connection mssqls_pass <whatever password you set in your secret> diset connection mssqls_server <the DB service cluster-ip> diset connection mssqls_linux_server <the DB service cluster-ip> diset connection mssqls_port 31433 <the port the service uses> diset connection mssqls_authentication sql diset tpcc mssqls_count_ware <number of warehouses> diset tpcc mssqls_num_vu <number of users to create the warehouses> print dict buildschema vwait forever # end of script

We run this script, the bare minimum, from the “hammerdbcli” client. The most important factor here is the number of warehouses you want to create. Think of a warehouse as an online store that sells various products. The more we create, the more storage we consume and also the more users who can use the store.

Once the schema is created and populated (the database that will be created is named “tpcc”), we can run our first test, again using Tcl. The gist of the script is similar to the previous one (i.e., type of database, IP address, password) with some changes:

#!/bin/tcl # # connection info here… # diset tpcc mssqls_driver timed diset tpcc mssqls_rampup <rampup in minutes> diset tpcc mssqls_duration <run duration> vuset logtotemp 1 loadscript puts "Run starts..." vuset vu <how many users will run> vucreate vurun # end of script

Again, these are the minimal commands to use to run the test. The output will be long and contingent on what you choose to show, but the most important part is in the form of:

... Vuser 1:TEST RESULT : System achieved 5678 SQL Server TPM at 2345 NOPM ...

The information that’s returned includes the transactions per minute (TPM) we achieved (post ramp-up time) and the number of new orders per minute (NOPM). We need both numbers because TPM is not really something we can compare between different database software (e.g., Oracle to SQL Server on the same platform/hardware) because databases are designed and operate differently. In contrast, the number of new orders is relatively easy to compare.

What about Sherlock…?

So how is all this related to the detective Sherlock Holmes? If you’re in the business of testing storage performance, you realize the best way to do so is with a real-life workload. This is the motivation behind the Sherlock project. Sherlock provides an easy-to-deploy database for a Kubernetes/OpenShift cluster and then runs different database workloads that stress not only the storage but the cluster in general.

With Sherlock, the previous steps we looked at to deploy a SQL server pod and then run HammerDB on it are straightforward. All we need to do is calculate how many databases we want per node (based on our worker nodes resources), and Sherlock will then create the databases for us, making sure they’re spread evenly across the cluster, and run workloads against each database in parallel across all nodes, calculating averages per single database and collecting operating system statistics we can view later. Sherlock currently supports MySQL, PostgreSQL and SQL Server, using sysbench, pgbench, and HammerDB.

The idea behind Sherlock is to allow those with less experience creating and running databases in Kubernetes/OpenShift to do so easily from their laptops. You can view all the options, examples, and how-to information in the Sherlock project github page. In this post, I concentrate on running some Container Storage failures scenarios using Sherlock.

Container Storage failure scenarios

First, let's look at our infrastructure. Recall that our worker nodes consist of Azure’s Standard_L16s_v2 instances. Each instance has 16 CPUs and 128GB of memory, so we set up Sherlock to create 4 databases per worker node, limiting each SQL Server pod to 2 cores and 16GB of memory. Each database is using a 50GB PVC. We do all this in the file sherlock.config and, using the “create_databases” script, all databases, we create PVCs and services.

This is what my “sqlserver” project pod list looked like after running the script:

Once all the databases are up and running, to run HammerDB on all 12 databases in parallel is as simple as running the “run_database_workload-parallel” script.

Here’s a few of the variables we used in the Sherlock configuration file (sherlock.config) for our runs:

HAMMERDB_WAREHOUSES=50 HAMMERDB_RUN_VU=50 HAMMERDB_RAMPUP=60 HAMMERDB_RAISE_ERROR=false WORKLOAD_RUNTIME=600 BENCHMARK_POD_MEM_LIMIT=3Gi STATS=true $ ./run_database_workload-parallel -b hammerdb -j run -c sherlock.config -n myrun

Some of these variables are self-explanatory. For example, the ramp-up and runtime variables are in seconds, and basically impact how long the tests will run. The raise error variable is important because it signals HammerDB to continue running the workload in case there is a short period where IOs are slowed or “paused” or errored. We’ve also instructed Sherlock to run the performance statistics collection pod (“stats” pod) on each of the nodes.

The script will start and run a HammerDB job per each of the databases we deployed and will wait for the jobs to finish. Because we also instructed Sherlock to collect performance data, we have a job per nodes per type of node (worker that runs the databases or infrastructure that runs Container Storage):

Once all jobs are done, all the pod logs and the configuration we used for this run are created under a directory named after the run name we provided (the “-n myrun” in the script).

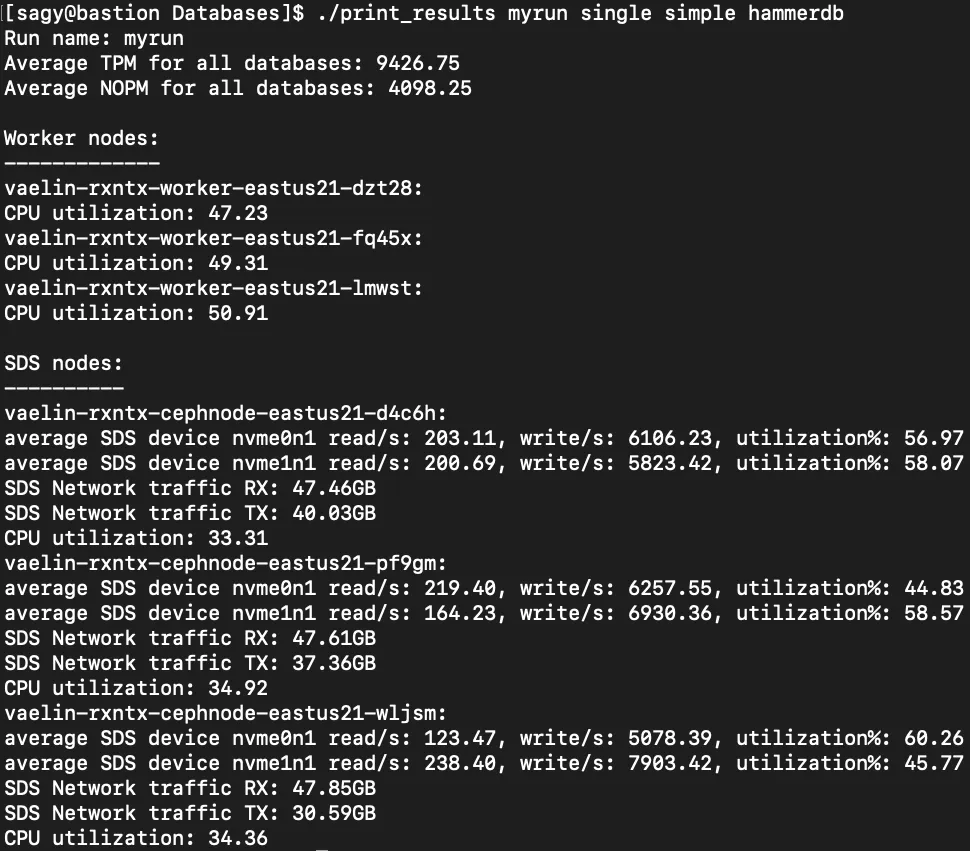

We can use the “print_results” script to show us the results, like TPM/NOPM and whatever metrics were collected on the nodes.

Fault and resiliency

Now that we know how to easily deploy many databases and run HammerDB on them using Sherlock, let's get to some resiliency testing. The Sherlock project provides a script called “run_loops” that basically calls the “run_database_workload-parallel” script in a loop (with a rest between each run) and collects all the runs information under one directory. What I did was to modify the loop script to also inject faults in the nodes that run Container Storage.

In my own tests I’ve used two types of fault injection:

- Deleting (“killing”) an OSD pod.

- Rebooting an infra node that contains two OSD pods.

We measure the impact in our HammerDB tests two ways:

- We first run the same test with no faults and compare TPM/NOPM numbers average numbers between all “good” runs and all “bad” runs.

- We make sure that whatever VUs that started the run finished it successfully.

Deleting an OSD pod

OSDs are the building blocks of the Ceph data plane. In Container Storage, an OSD pod basically corresponds to a storage device that the Ceph cluster consumes. The Ceph cluster consumes and aggregates all the OSDs into a logical storage layer that the application can use. The easy way to think of it is that OSD = a storage device.

In our configuration, each of the Standard_L16s_v2 instances have two storage devices, meaning each instance runs two OSD pods. I ran the tests in a loop 10 times. Each run was 10 minutes (with 1 minute of warm-up), and each run performed a “oc delete pod rook-ceph-osd-XYZ” randomly on one of the 6 OSD pods available, each time during a different minute during the HammerDB.

With Rook monitoring the Ceph components and also using Kubernetes deployments for the OSD pods, deleting an OSD pod causes Kubernetes to bring up another OSD right away to support the same NVMe device the “deleted” OSD pod used before. This happens so fast in my tests that the Ceph cluster didn't declare the OSD that we killed “down.”

In my tests on Azure, it took an average of 3.15 seconds for Rook to create a new OSD pod instead of the one that was deleted.

The fault injection of deleting an OSD pod, that “blip” of moment when the OSD pod was deleted and a new one had to be created, did not impact either the TPM/NOPM numbers or the number of VUs who finished the test.

Restarting a node/instance running Container Storage

In this scenario, I rebooted one of the infrastructure nodes running Container Storage in the Azure cloud portal during each of the HammerDB runs. If you recall, each of these nodes held two direct-attached storage devices, meaning 2 OSD pods were running on each node. Therefore, removing one node removes 2 of the 6 OSDs on the Ceph cluster on which Container Storage is built, essentially removing ⅓ of the storage.

While Ceph is built for massively large mesh clusters and is typically used with hundreds or thousands of storage devices, the Ceph cluster remains intact and continues to provide IOs even in this small-scale configuration.

One thing that does happen when we reboot one of the infra/Container Storage nodes is that Ceph marks these two OSDs as down and promotes different “up” OSDs as the primary location of chunks that were previously on the two “downed” OSDs. This is communicated to the Ceph clients (the csi-rbdplugin pods). After a short period (the time it takes Container Storage to reboot and for OpenShift to acknowledge that the node is again part of the OpenShift cluster), the node is up again, and the two OSDs and their direct-attached devices are again connected to the Ceph cluster.

Ceph will also start the process of rebuilding whatever chunks were modified during this reboot to ensure we get back to 100% resiliency (3 copies of data) as quickly as possible. This also means that in these tests I had to monitor that Ceph finished this rebuild process and only then started the next run of HammerDB, thereby effectively comparing “apples to apples.”

Even in this type of fault injection, all VUs in each HammerDB run finished the run successfully. I saw a very small dip in performance, which can be easily attributed to the phase where the two OSDs have gone missing and the phase in which the rebuilt process started, which can be IO intensive. This test was run in a more manual fashion, six times. In each test, I rebooted a different Container Storage node so that throughout all tests each Container Storage node was rebooted twice and each reboot was done during a different time into the run.

Summary

In this post, I showed how easy it is to deploy SQL Server on OpenShift using Container Storage as the persistent storage layer. I used the Azure Cloud as the infrastructure and as such leveraged the Azure direct-attached block device instance type.

I also explained a little about HammerDB and also how easy it is to deploy databases and test the performance of storage on Kubernetes/OpenShift using the Sherlock project. Introducing fault injections while running performance tests shows zero impact for an OSD pod restart (delete pod) and negligible impact when we reboot one of the nodes running Container Storage.

Über den Autor

Sagy Volkov is a former performance engineer in ScaleIO, he initiated the performance engineering group and the ScaleIO enterprise advocates group, and architected the ScaleIO storage appliance reporting to the CTO/founder of ScaleIO. He is now with Red Hat as a storage performance instigator concentrating on application performance (mainly database and CI/CD pipelines) and application resiliency on Rook/Ceph.

He has spoke previously in Cloud Native Storage day (CNS), DevConf 2020, EMC World and the Red Hat booth in KubeCon.

Ähnliche Einträge

Refactoring isn’t just technical—it’s an economic hedge

Migrate your VMs faster with the migration toolkit for virtualization 2.11

Understanding AI Security Frameworks | Compiler

Data Security And AI | Compiler

Nach Thema durchsuchen

Automatisierung

Das Neueste zum Thema IT-Automatisierung für Technologien, Teams und Umgebungen

Künstliche Intelligenz

Erfahren Sie das Neueste von den Plattformen, die es Kunden ermöglichen, KI-Workloads beliebig auszuführen

Open Hybrid Cloud

Erfahren Sie, wie wir eine flexiblere Zukunft mit Hybrid Clouds schaffen.

Sicherheit

Erfahren Sie, wie wir Risiken in verschiedenen Umgebungen und Technologien reduzieren

Edge Computing

Erfahren Sie das Neueste von den Plattformen, die die Operations am Edge vereinfachen

Infrastruktur

Erfahren Sie das Neueste von der weltweit führenden Linux-Plattform für Unternehmen

Anwendungen

Entdecken Sie unsere Lösungen für komplexe Herausforderungen bei Anwendungen

Virtualisierung

Erfahren Sie das Neueste über die Virtualisierung von Workloads in Cloud- oder On-Premise-Umgebungen